Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

.png)

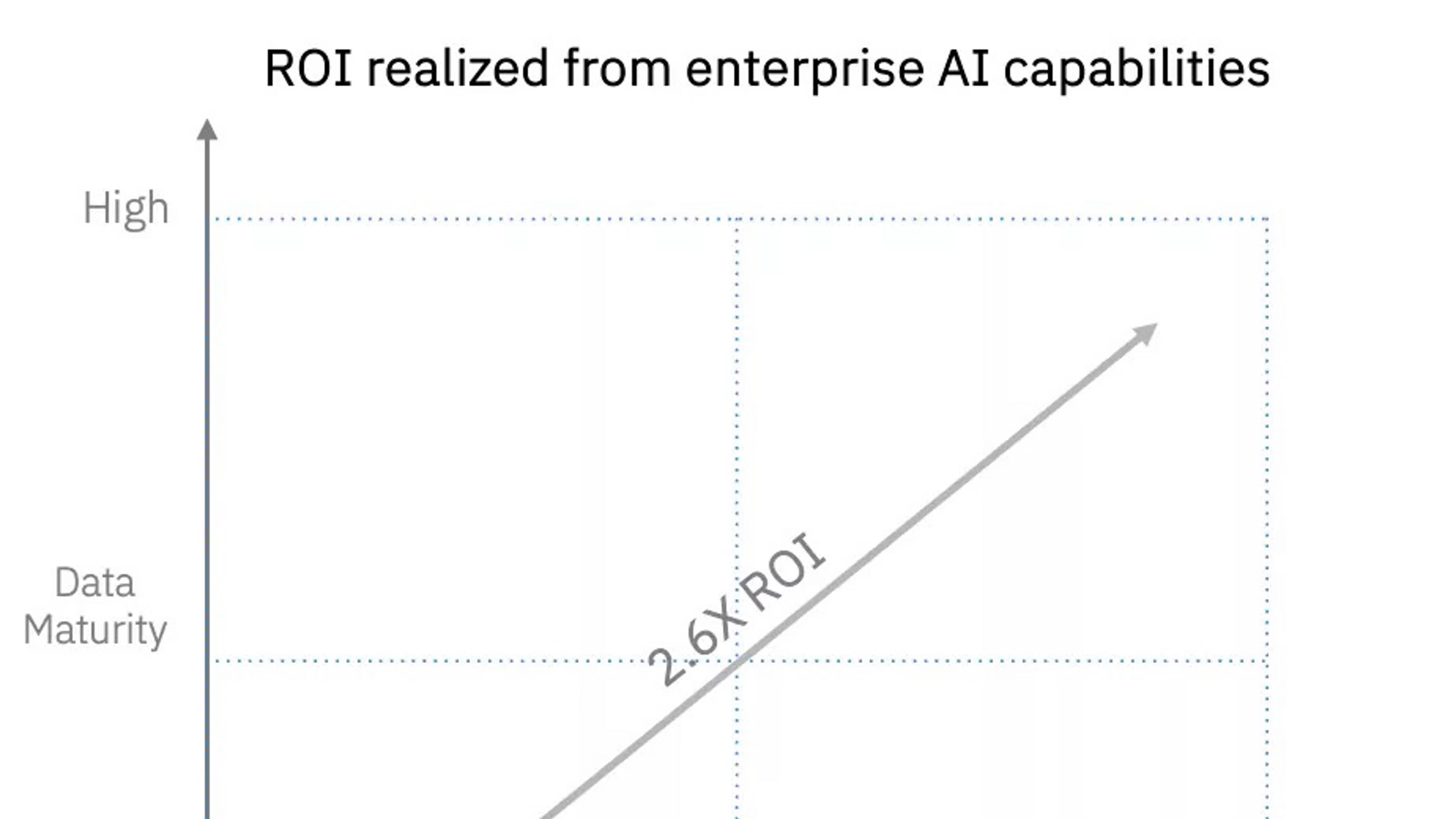

Despite record spending on enterprise AI solutions, most organisations are still struggling to translate pilots into measurable outcomes. Investments are rising, yet returns remain ambiguous, a pattern now widely recognised as the AI-ROI paradox. Companies are deploying more models, standing up more tools, and experimenting with more generative AI capabilities, but very few are capturing sustained business value.

The real issue is that what enterprise AI is supposed to deliver, scalability, reliability, and impact across the value chain, rarely aligns with the underlying data and operational foundations required to make that possible.

Most enterprises are aware of AI’s essence, but simply adopting it for enterprises isn’t enough. The value only emerges when AI is built on consistent, governed, and deeply integrated data.

[state-of-data-products]

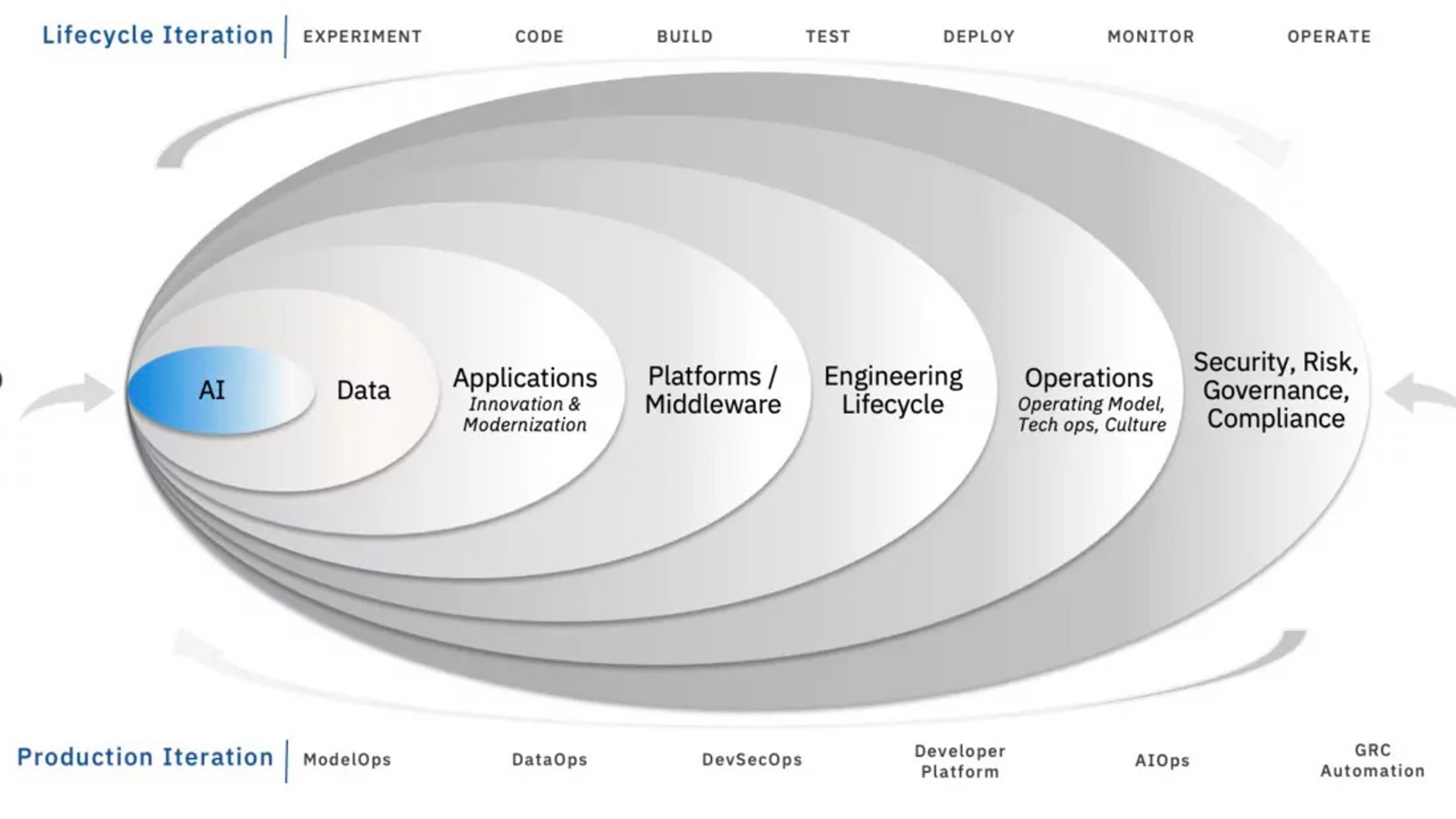

Enterprise AI integrates intelligence across business domains, workflows, and operations. It connects to the systems that run the organisation and influences decisions at scale.

Enterprise AI solutions have a few non-negotiable characteristics.

▪️must scale across multiple use cases,

▪️plug directly into enterprise data, and

▪️operate with strong governance, security, and role-based access.

These solutions need to integrate with cross-functional workflows rather than sit in silos. And they must be reliable enough to support business-critical decisions, not just experiments.

Related Read: Learn more about domain based templates as a new approach to AI

Enterprises invest in AI for real, measurable outcomes. The north star goals of the business are predictable: Reduced costs, increased revenue, improved productivity, and accelerated time-to-insight. These benefits should be quantifiable.

While these seem pretty straightforward, most enterprises fail to achieve this. Across leading studies on enterprise AI ROI, a consistent pattern emerges. Investment keeps rising, but clear returns remain elusive, because value doesn’t materialise in a clean, linear way.

[related-1]

AI in no way is a cheap experiment. Compute cost alone can overwhelm budgets long before value shows up. Training, fine-tuning, and running models at scale requires sustained GPU capacity, elastic infrastructure, and repeated experimentation cycles that burn budget long before value appears.

Even when models reach production, inference costs grow non-linearly as usage increases, turning every prediction into an operational expense. Without clear ROI guardrails, enterprises end up scaling cost faster than impact, mistaking technical progress for business value.

What is the 30% rule in AI?

The 30% rule is an informal enterprise AI heuristic that shows up in ROI conversations: only about 30% of AI initiatives typically make it past pilots into production or deliver measurable business value. The rest stall due to data readiness gaps, rising compute and infra costs, unclear ownership, or use cases that were never tied to economic outcomes. The rule isn’t a hard statistic—it’s a cautionary signal. It highlights why successful teams define ROI early, constrain scope, and treat AI as a product with value, adoption, and cost-to-serve targets, rather than as an open-ended experiment.

An org deploys dozens of models, but if decision-makers aren’t using them, it is futile. So, assessing the real value or the ROI enables enterprises to track whether AI actually changes workflows, reduces cycle times, improves accuracy, or increases revenue.

It shifts conversations from model performance to business performance.

When you don’t measure ROI, it yields duplicate models, shadow AI tools, unnecessary infra costs, and low-trust usage. Measuring ROI surfaces where AI is creating more complexity than value, so teams stop scaling inefficiency.

When you don’t measure ROI, it yields duplicate models, shadow AI tools, unnecessary infra costs, and low-trust usage. Measuring ROI surfaces where AI is creating more complexity than value, so teams stop scaling inefficiency.

AI competes with new hires, new GTM motions, modernisation projects, automation, R&D, and marketing budgets. ROI gives AI the financial credibility to pass CFO scrutiny and stay on the strategic agenda.

[data-expert]

Measuring AI ROI breaks down primarily because enterprises treat AI as a model problem instead of a product and platform problem.

Data used for AI is fragmented, inconsistently defined, and owned by multiple teams, making it impossible to establish a stable baseline.

In most scenarios, every AI initiative ends up rebuilding its own datasets, features, and business logic. Metrics drift. Assumptions change. What worked in a pilot looks different in production. As a result, ROI becomes a narrative rather than a measurement: complex to compare across teams, and impossible to aggregate at an enterprise level.

The second considerable challenge in AI projects is governance.

Most organisations try to measure AI results without knowing which data was used, how it evolved, or what policies were applied. Lineage, access controls, and quality checks (if at all they exist) aren’t embedded in the workflow.

The cost of fixing data issues, handling compliance reviews, or reworking pipelines never shows up in AI ROI calculations, even though it directly erodes value. Without productised data, clear ownership, and platform-level observability, it is complex to trace AI performance back to data readiness or operational effort. The value is there, but the system to measure it isn’t.

Related read:

Learn more about AI ready data here.

Enterprises measure AI ROI through a set of KPIs. These metrics pinpoint how AI for enterprise is actually improving efficiency, reducing cost, driving revenue, or strengthening resilience, not just running a model in production.

The most crucial metrics aim to measure elements like:

Dive into a detailed breakdown of a Technical Assessment for AI-Ready Data ↗️

In most cases, AI ROI does not fail because of insufficient investment, but because value creation is structurally misaligned with how AI is deployed and measured.

First, there remains the need to eliminate the gap between the data and the business context, which ensures leveraging data assets to their fullest and onboarding AI effectively.

We often come across the ‘AI ROI Paradox’, when organisations scale experimentation faster than they scale thier operating discipline. AI ROI is better realised when AI is embedded into the enterprise operating model, instead of AI being layered on top of existing processes without proper infra-fit. Returns materialise most when AI is tightly connected to core data, decision flows, and business metrics.

The following are some fundamentals to achieving higher AI ROI:

With enterprises sustaining on fragmented, inconsistent, and poorly governed data, AI returns silently erode. The effort spent on fixing data issues, reworking pipelines, or handling compliance delays directly impacts ROI, even when they are rarely accounted for.

Integrating AI capabilities across the business is a practical lever to attaining good AI ROI, as this influences core products, services, and internal operations consistently rather than in fragmented pockets of effort.

For an enterprise aiming to improve its AI ROI, it is important to maximise productivity and operational efficiency by using AI to automate routine work, extend capabilities, and reduce costs in ways that compound over time. Garnering AI to inform better decisions by ensuring models are fed the right data and tied to measurable business outcomes, rather than simply generating output for its own sake.

By this point, a pattern is clear: AI ROI does not break at the model layer, and it rarely breaks at the use-case layer.

AI outcomes are siloed and broken when the initiatives depend on bespoke data pipelines, inconsistent definitions, and invisible operational effort. When AI outcomes cannot be repeated, compared, or extended, returns remain fragile.

.png)

By abstracting low-level infrastructure concerns, a Data Developer Platform allows teams to focus on building and iterating AI and ML applications, increasing the likelihood that AI efforts translate into repeatable business outcomes rather than isolated experiments.

The core emphasis of DDPs is on platform philosophy, composability, and scale. This unified platform’s primitives support analytics and data sharing. The AI/ML workloads and capabilities built once can be reused across multiple AI applications, reducing duplication and accelerating downstream innovation. As the data is turned into reusable Data Products, teams are not required to recreate the same plumbing. Hence, the organisation spends less on maintenance and duplication, resulting in an overall reduced total cost of ownership.

DDPs enable developers, reduce cognitive load and standardise how data applications are built. These allow to shorten the time required to operationalise AI by providing a consistent, developer-friendly foundation on which AI and data applications can be built and scaled.

No ROI numbers. Just time-to-value enablement.

By shifting the centre of gravity from a pipeline-first environment to productising enterprise data, a DDP enables teams to work with ready-to-use, governed, versioned Data Products that give AI models the context, quality, and consistency they need to perform reliably.

Making AI value visible has to be a design choice. AI is forcing organisations to rethink what counts as return. Traditional ROI models are narrow and often assume immediate, purely financial outcomes, an assumption that rarely holds for AI. Gartner predicts that by 2028, more than half of enterprises building AI models from scratch will abandon them due to cost, complexity, and accumulated technical debt.

This makes the build-vs-buy decision critical, not just for execution, but also decides whether AI initiatives remain isolated efforts or become part of a system that can sustain learning and improvement.

A Data Developer Platform enables the decision by creating the conditions under which AI value can be leveraged, regardless of whether AI is built in-house or purchased.

A Data Developer Platform delivers governed, versioned data products with embedded semantics, lineage, and quality signals.

In this use case, AI becomes more repeatable and auditable.

DDP allows teams to focus on model logic and experimentation instead of data plumbing, where the AI systems directly inherit consistent contracts, policies, and standards by default. Thus, usage patterns, dependencies, and operational behaviour are surfaced through the platform early-on rather than reconstructed later.

Buying AI tools or copilots often weakens visibility into how value is actually created. External systems tend to operate as black boxes when they are connected to fragmented or inconsistently governed data environments. Also, the vendor lock-in problems!

A Data Developer Platform allows purchased AI systems to integrate into a unified, governed data layer where access, lineage, quality, and usage conventions are already defined. The data that external AI systems consume is now easily This allows organisations to delineate the data that external AI systems consume, how they interact with core data products, and where operational dependencies emerge.

With fragmented and siloed data, missing context, and governance forced into the process later, AI remains trapped in pilots and narratives rather than outcomes. Measuring AI ROI is therefore a design discipline.

The real difference happens when the right platforms and infrastructure are deployed for the AI solutions. And more importantly, when businesses carefully delineate the real need for AI and don’t go by the ‘trend.’

In 2026, the leading AI projects and capabilities will define the most optimised path from data to decision to measurable value.

An AI ROI calculator refers to the capability that estimates the business value of an AI initiative by comparing expected benefits, cost savings, revenue impact, productivity gains, and risk reduction against total costs, including data, infrastructure, models, and governance.

Generative AI primarily focuses on generating content or outputs, including text, code, images, or insights, based on patterns learned from data. GenAI solutions do respond to prompts but typically operate within a single interaction or task.

Whereas Agentic AI uses generative models as a component but adds autonomy, memory, and decision logic to plan actions, call tools, interact with systems, and execute workflows over time.

The 30% rule is a guideline suggesting organisations begin AI automation with roughly one-third of a process, focused on repetitive tasks, to secure measurable value quickly while keeping humans in the loop for complex decisions.

Ritwika is part of Product Advocacy team at Modern, driving awareness around product thinking for data and consequently vocalising design paradigms such as data products, data mesh, and data developer platforms.

Ritwika is part of Product Advocacy team at Modern, driving awareness around product thinking for data and consequently vocalising design paradigms such as data products, data mesh, and data developer platforms.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.