Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

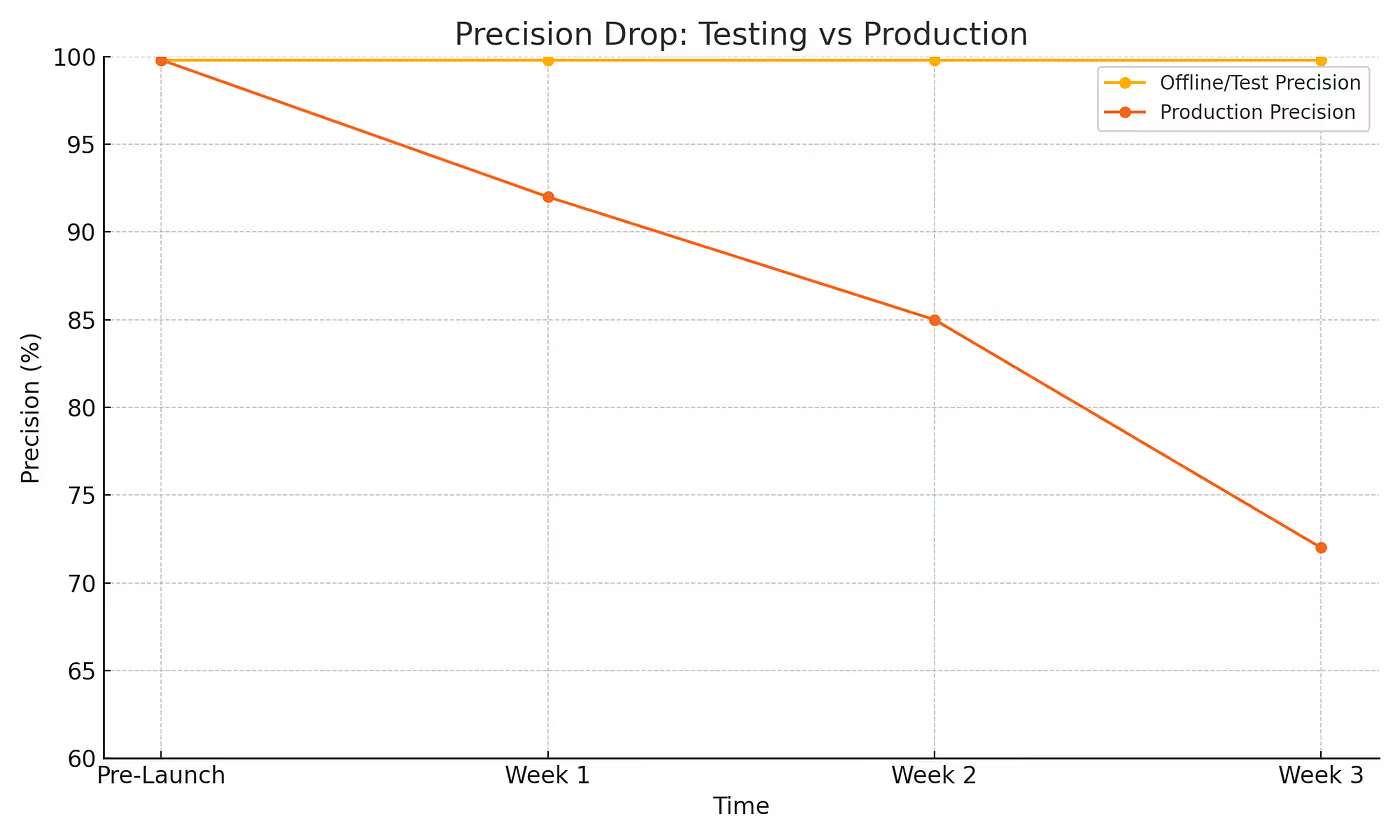

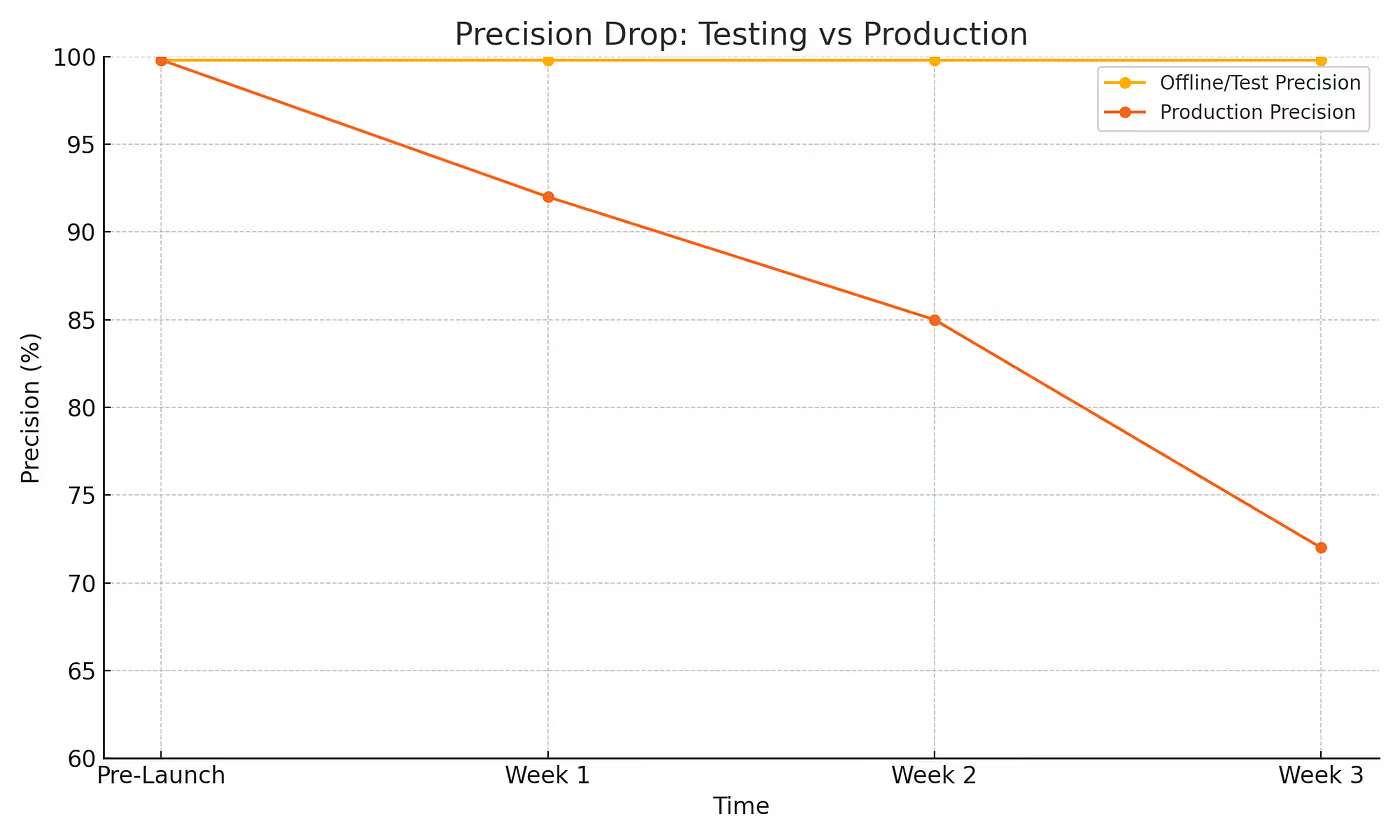

Remember the accuracy and sharp insights delivered by this new AI model in the lab? It had the potential to evolve your business in an unparalleled way.

Fast forward a few weeks in production, and the story might have drifted away. Suddenly, the performance metric has dropped drastically, predictions are obsolete, and the model hallucinates. Sounds similar?

This is Model Decay, a common ailment that’s plaguing AI deployments.

The real culprit? Data.

Let's first agree on this: even the best algorithms show how good the data was that powered them. Many people often overlook the connection between data quality for AI and sustained AI model performance. But what if you can proactively address this issue across applications?

We are discussing production-ready data templates. These templates ensure that models get high-quality inputs for every need. This includes churn prediction, estimating lifetime value, and understanding Natural Language.

The best part is that these reusable instructions can help stop Model Decay. They can also unlock the true potential of AI investments. Productised templates have proved to be one of the key tools for data management, and we'll soon see how.

Model decay is when an AI or machine learning model performs much worse over time. This drop in performance is significant and long-lasting.

Think of a sharp knife. When new, the cuts are clean, smooth, and require less strength to operate. Over time, it loses its sharpness and may need more strength to use. The same is true for Model Decay. Slow but persistent loss in performance.

Before we confuse it with Model Drift,

Model Drift is a more gradual shift in the statistical properties of the target variable. Think of it like drift is a slow leak, while decay is a more sudden puncture.

The culprit? Bad data. Errors, inconsistencies, missing or outdated information can quickly hamper the performance of our Lab-hero when put to production.

Missing signals that built the model are not always available now. This can often cause quick decline.

The data distribution can cause a gap between what the model learned and the reality it faces now. This brings us to another critical concept: Data Readiness.

Data Readiness refers to the state of data. Is it in the right shape? Is it good enough to power your model for performing optimally with the right signals?

Neglecting the preparation of data for training can cause problems. It can also affect how the data appears in the serving environment. This may lead to model decay. People often call this training-serving skew.

📝 Related Read:

AI-Ready Data: A Technical Assessment

Let’s understand what is production-grade data first. We organise production-ready data to ensure it is correct and reliable. It meets the model's needs by being complete, up-to-date, and easy to access.

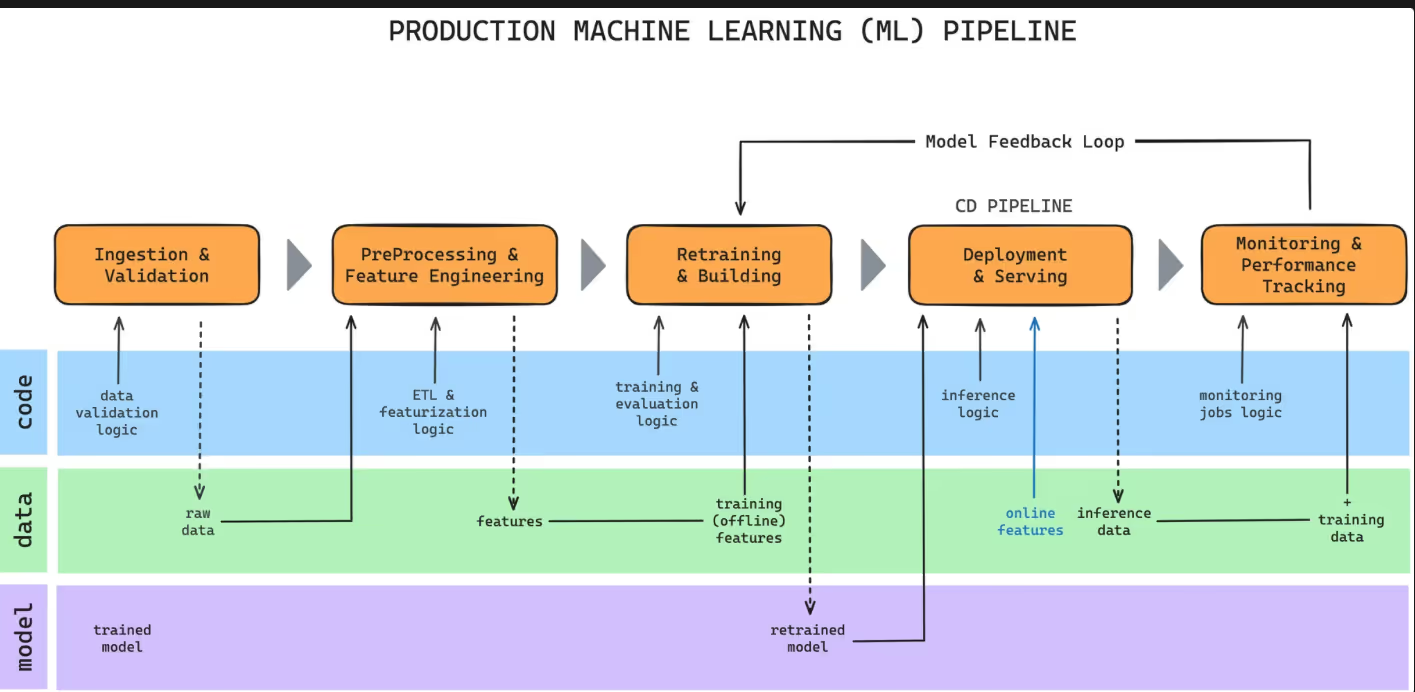

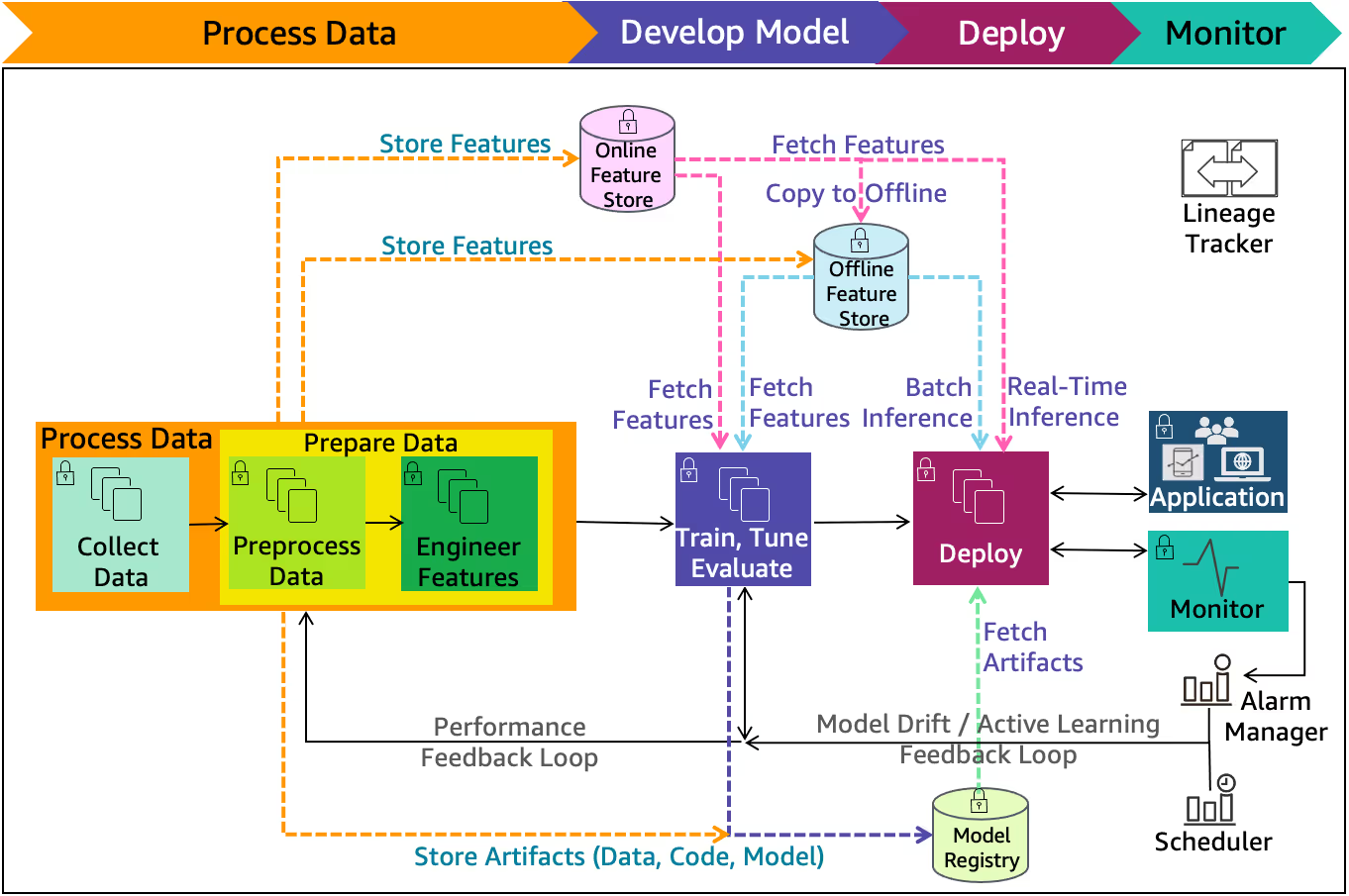

This consistently requires adaptable data pipelines for machine learning. These automated systems extract, transform, and load data. They prepare the data for training and inference. This ensures a steady flow of quality input.

People call the traditional way of making hand-stitched data bespoke. In this method, the data science team creates a custom solution for each project. This approach also contributes to Model Decay.

These solutions are brittle, difficult to maintain and tough to reuse, leading to inconsistencies and increased risk of errors. In contrast, adopting AI pipeline best practices with modular, reusable templates offers a far more sustainable and effective solution.

These templates are like ready-made plans for data preparation. They keep things consistent, speed up development, and reduce the risk of errors or losing important data signals.

Instead of regular data processing, think pre-engineered blueprints to prepare data for common and high-value use case templates? This is revolutionary. Instead of starting from scratch, using a template that already has all the essential features, data transformations, and structures needed for a specific domain saves significant engineering time and effort.

These templates are built upon the established best practices and a deep understanding of the data patterns relevant to a particular business problem of a specific domain. These templates accelerate building data products by providing a Standard Operating Procedure (SOP) for feature engineering templates, cutting down repetitive tasks and pipeline detangling.

Building upon these templates accelerates the development cycle while ensuring consistency in how data is prepared across different projects within the same domain. Some examples of such templates include: churn prediction, LTV modelling, and NLP entity extraction, each tailored to the unique data requirements of these applications.

It is important to note that production-ready data templates are facilitated on top of data products. Data products include independent units of data that serve specific use cases and domains to provide high-quality and trusted data. Data product managers or domain analysts are able to strategically create multiple templates on top of one data product, depending on the type of requirements.

Learn more about productising data as templates on Where Data Becomes Product ↗️

Let's look at how domain-based templates can make a difference:

Instead of manually identifying and engineering features, a data template for the churn prediction use case will include pre-defined steps to extract features. Features related to user activity, engagement, and demographics, like time-series behavioural data (e.g., frequency of app use, features accessed), sentiment analysis of user reviews, and key account information would be auto-identified along with a standard customisable pipeline.

This would directly feed into the AI Agent or agents built on top of the customer churn model. Doing so ensures that vital signals aren't missed. It also reinforces the trust that the model is trained on a comprehensive and relevant feature set.

Estimating Lifetime Value (LTV) requires careful consideration of historical purchase data, customer demographics, browsing behaviour, and potentially even marketing interactions as data sources. LTV model data structure holds the potential to provide a standardised way to aggregate cohort-level historicals, incorporate relevant contextual data (e.g., seasonality and or promotions), and structure the data for various LTV modelling techniques. AI Agents work with this data to orchestrate the customer interaction map across their journey to improve LTV scores or trigger actions in operational systems.

Customer service can have applications such as automated ticket tagging and sentiment analysis of customer inquiries. These templates come with a pre-defined set of steps, such as tokenisation, handling stop words, extracting named entities, and calculating the sentiment scores. These templates ensure that the NLP model consistently receives processed and relevant text data, leading to accurate entity recognition and sentiment classification in the customer support workflows.

These examples highlight the speed to value, enhanced consistency, and significantly lower risk of data signal loss that AI use case templates provide.

📝 Related Read:

Speed-to-Value Funnel: Data Products and Platforms, Where to Close the Gaps

Moving AI models from lab to robust production deployments is a significant hurdle. Reusable data templates streamline this process by providing well-defined, tested blueprints for data preparation. This efficiency in data handling speeds up the task when operationalising AI initiatives by significantly reducing the time and resources needed for deployment.

Data templates are scaled and maintained with an evolution-friendly, machine-friendly, and adaptable data infrastructure for AI. Your enterprise data platform underneath is the wind to the sails of templates working at par with AI or Agentic requirements, where the platform is coded defensively enough to not let dependencies fail fatally.

📝Related Read:

AI Needs Architecture: A Platform That Remembers

A robust MLOps pipeline incorporating Feature Stores and feedback mechanisms, crucial for preventing model decay and maintaining high-performing AI. | Source: Machine learning reference architecture

Additionally, these templates allow better data monitoring and data engineering practices when it comes to maintenance. With the expected data structure and features defined, setting up alerts for data anomalies or missing values indicating potential model degradation becomes easier. Template versioning allows you to track changes and ensure that your models are consistently trained on the appropriate data preparation logic as your data evolves.

At a platform level, a library of well-documented reusable data templates empowers different teams across the organisation to build and deploy AI applications with greater confidence. A modern data platform is the backbone and unseen backstage director, enabling data citizens to operate on a solid foundation of machine learning data readiness.

📝 Related Read:

How to Build Reusable Features for Machine Learning and AI with Data Products

Before wrapping up, let's recap.

Model Decay is a persistent and real challenge. However, the success of an AI effort is directly proportional to the quality and readiness of the data backing the system. The need for sophisticated algorithms is undeniable, but it too leans on clean inputs and information. Great models use information very well, but for that, the information is essential.

Template-first data mindset is the way to go. Reusable data templates that are custom-built for specific domains, use cases, and organisations ensure production-ready pipelines. Such data templates are the interface between data platforms or infrastructure and the consumption layer where AI apps access the production-ready data. This also minimises the risk of data-induced model failure and improves AI accuracy with better data.

Q1. What is the difference between model decay and model drift?

Though these may sound similar, Model Decay and Model Drift are distinct. While Model Decay is a sudden and persistent drop in performance due to issues with bad data or missing signals, often caused by fundamental problems with data quality, Model Drift is a more gradual shift in the underlying data patterns over time. Think of decay as a sudden failure and drift as a gradual, natural evolution. Model Decay is unwelcome and disruptive and requires preventive methods, while model drift is a natural event that is inevitable and requires adaptation.

Q2. How do reusable data templates save time?

Data templates provide pre-engineered blueprints for everyday machine learning tasks. Instead of building a new data preparation pipeline from scratch every time, teams can leverage standardised templates. This not only accelerates the development cycle but also reduces errors and ensures consistent data readiness from prototype to production.

Q3. Is a data template the same as a feature store?

No, they are different but complementary. A feature store is a centralised repository for storing and serving curated features for AI models. A data template, on the other hand, is a reusable process or pipeline for creating those features in the first place. You would use a template to build features and then store them in a feature store.

.avif)

I am a passionate & pragmatic leader, architect & engineer. I use iterative architecture & lean methodologies to deliver software products with measurable value, aligned with goals & objectives, on time & with balanced technical debt.

I am a passionate & pragmatic leader, architect & engineer. I use iterative architecture & lean methodologies to deliver software products with measurable value, aligned with goals & objectives, on time & with balanced technical debt.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.