540+ Respondents,

64 Countries,

29 Industries

.svg)

WHAT’S INSIDE

- About the Report

- Executive Summary: The Data Activation Gap

- Business Stakes

- Al-Readiness

- Day-to-Day Reality of Data Users

- The Discovery Challenge

- Collaboration and Dependence

- Platforms and Tools

- Convergence Today

- About The Modern Data Company & Modern Data 101

.svg)

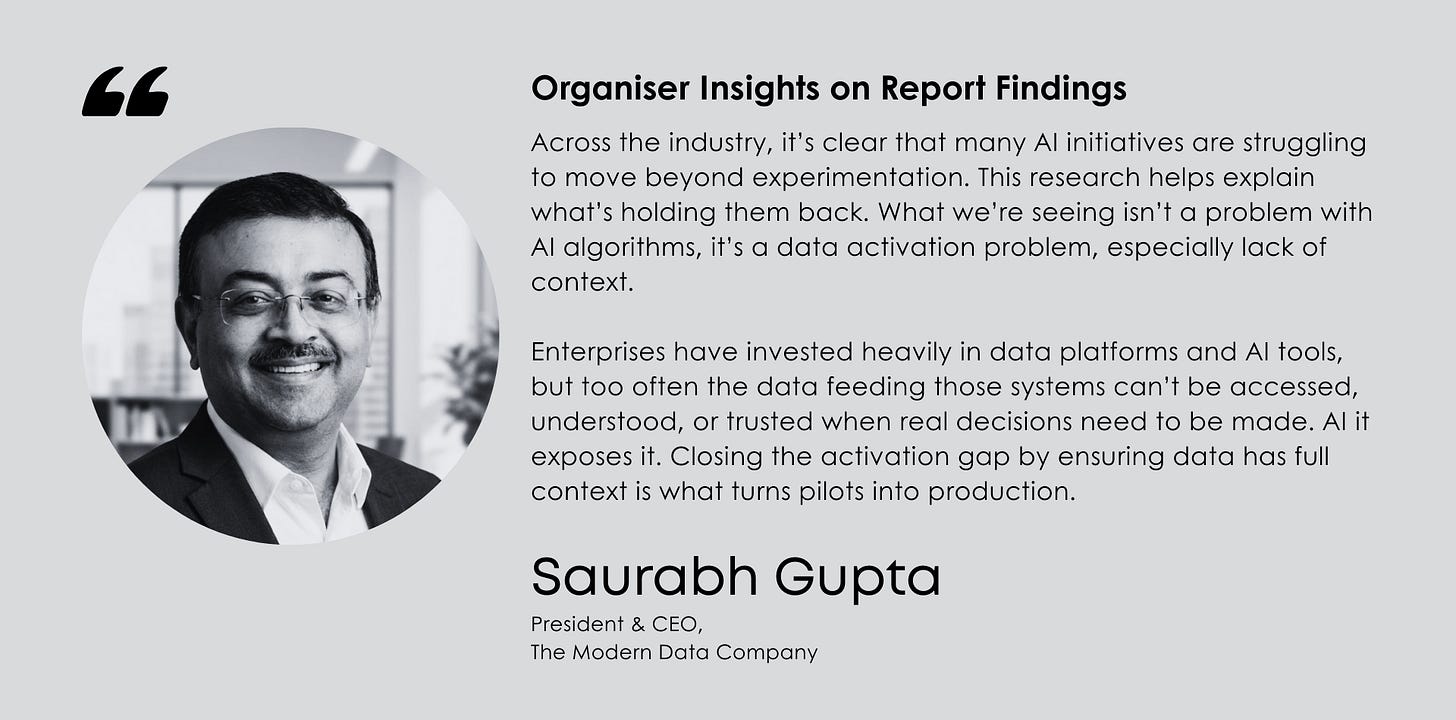

The Modern Data Report reveals how enterprise data is nowhere near AI-ready. AI cannot reason over data that humans themselves cannot rely on.

Through this survey, we are able to observe the precise reasons behind the current state of the data stack, and why so many data leaders, subject matter experts, and practitioners across industries report the same experience: despite years of investment in modern platforms, data still fails at the moment it is needed most.

What emerges is not a shortage of tools, ambition, or technical sophistication. It is a breakdown in activation. AI initiatives struggle to move beyond experimentation because the underlying data is difficult to find, hard to interpret, and only conditionally trusted. Humans have learned to compensate for these gaps through meetings, intuition, and manual checks. AI cannot.

About this Survey

The Modern Data Report 2026: The Data Activation Gap is our second annual survey in the Modern Data 101 community. This year, more than 540 members participated, spanning 64 countries and 29 industries, including and not limited to financial services, manufacturing, healthcare, retail, technology, and government sectors, with an average of over a decade of experience.

Key Findings

- Enterprises have modern data stacks, but their data still can’t be trusted or activated: nearly half can’t fully rely on their data for decisions, and most say it isn’t ready for AI.

- The real work of data teams is no longer analysis. It’s search, reconciliation, and validation: finding and confirming data consumes more time than actually using it.

- Context is fragmented across tools, breaking meaning, lineage, and shared definitions: data exists, but the knowledge required to interpret and act on it does not travel with it.

- AI adoption is blocked by weak data foundations, not model capability: without semantic layers, standardised metrics, and trust signals, AI cannot reliably trigger decisions or close feedback loops.

- Practitioners are aligned on the fix: convergence over complexity: unified access, embedded governance, shared semantics, and self-service are the foundations required to close the data activation gap.

AI: The Breakthrough that Became the Stress Test

Enterprises did not adopt AI to build better dashboards or generate clever insights. They adopted AI to compress time, to collapse the distance between data and action, between detection and response, between knowing and doing. AI was meant to turn data into motion, not interpretation.

What surprised many organisations is that AI didn’t accelerate decision-making but exposed how fragile the path to decisions actually was.

Despite significant investment, AI initiatives are stalling because everything upstream was never designed for machines to reason over.

The report makes this visible in uncomfortable ways.

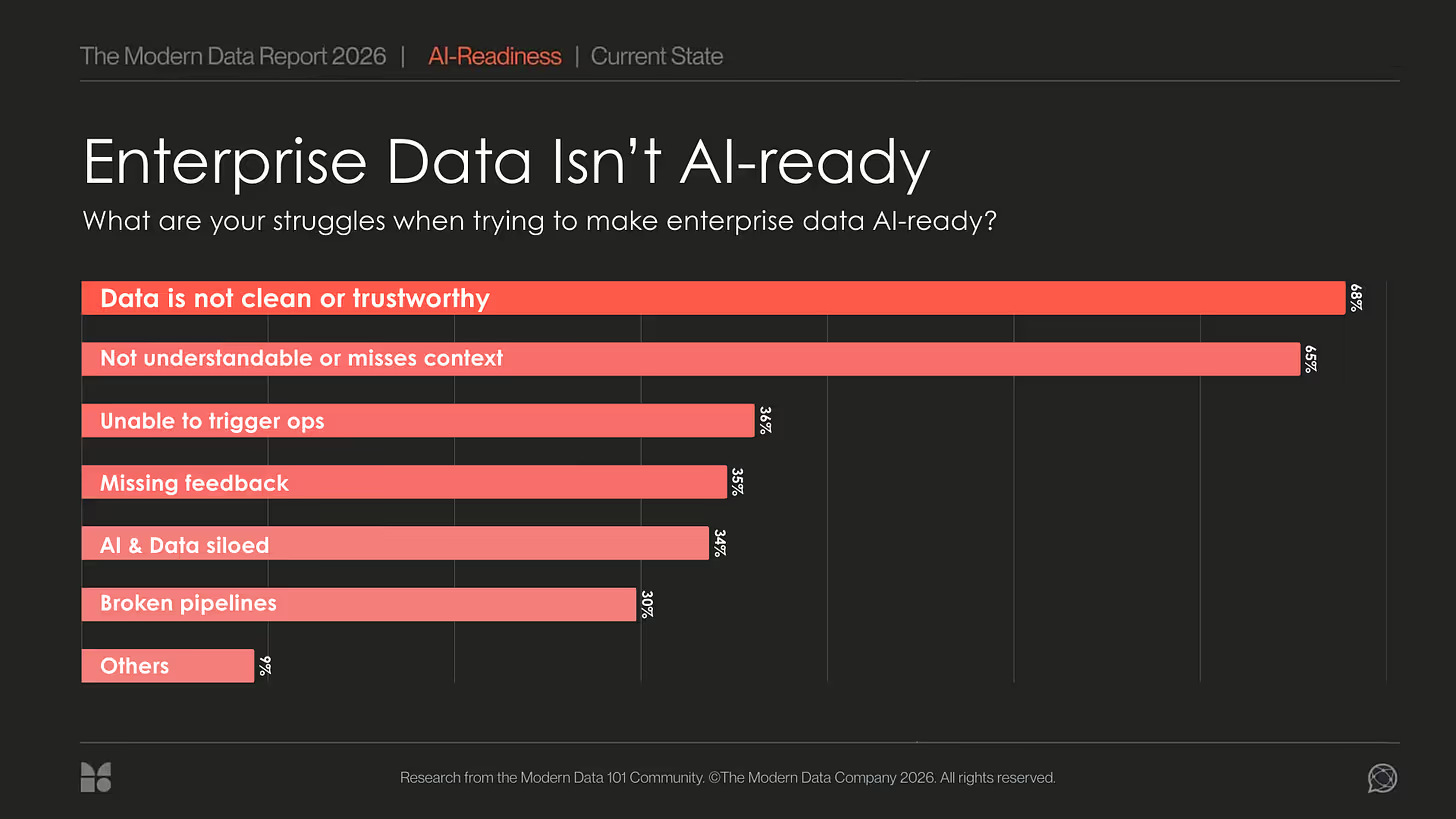

Almost 70% of respondents say their data isn’t clean or trustworthy enough for AI. 65% say their data lacks the clarity and business context AI needs to be useful.

Only 30% use AI for automated data preparation, even though data quality is universally acknowledged as a bottleneck. More than a third cannot trigger operational decisions using AI, and a similar share reports that their AI systems don’t meaningfully connect to their data systems at all.

For years, humans acted as the compensating layer. The system appeared to function because people absorbed its inconsistencies. AI removes that human buffer. AI doesn’t tolerate weak foundations and increasingly surfaces them.

AI Changes What “Data Readiness” Actually Means

For years, data readiness was defined by proximity to storage and presentation. If data was stored, modelled, and visualised, it was considered usable. Readiness meant that someone, usually an analyst, could find it, explain it, and make sense of it when needed.

In an AI-driven system, data doesn’t get the benefit of tribal knowledge. Models don’t browse teams channels, and agents don’t remember why Metric A is “more correct” than Metric B, as discussed on a call. AI doesn’t intuit which dashboard is trusted or which table is unofficial. It only knows what the system itself makes explicit.

That changes the bar for readiness entirely, with respect to how we end up using that data, or who ends up consuming it.

Desired State

Data must be discoverable without insider context. It must be interpretable without explanation and must be trustworthy without manual validation. And most importantly, it must be actionable at machine speed instead of human pace. After all, that’s why we do AI for.

The report signals this shift clearly.

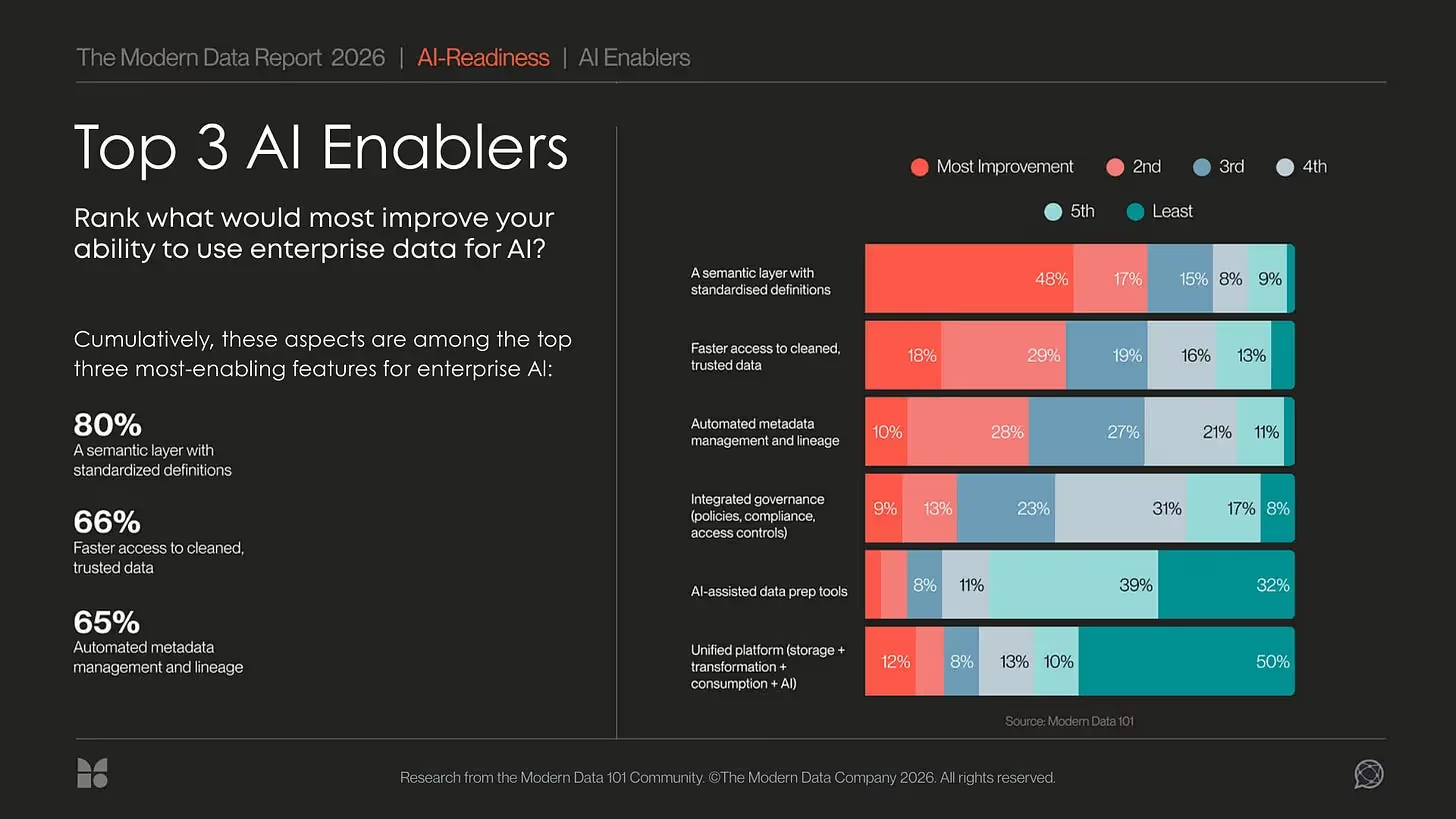

80% of respondents rank a semantic layer with standardized definitions as the most important enabler of AI: above AI tools themselves and above faster processing.

AI doesn’t reward more data or better models. It rewards systems that encode meaning, context, and trust directly into the data layer. Data readiness for AI, or AI-readiness, is about whether machines can reason without asking for help.

AI is Failing Exactly Where Humans Are Unable to Cope

Automation depends on certainty. If humans can’t reliably discover the right dataset, the right table, or the right metric, agents and models have no chance. AI doesn’t explore, infer intent, or guess which version is “the real one.” Discovery delays don’t merely slow analysis but prevent automation from starting at all.

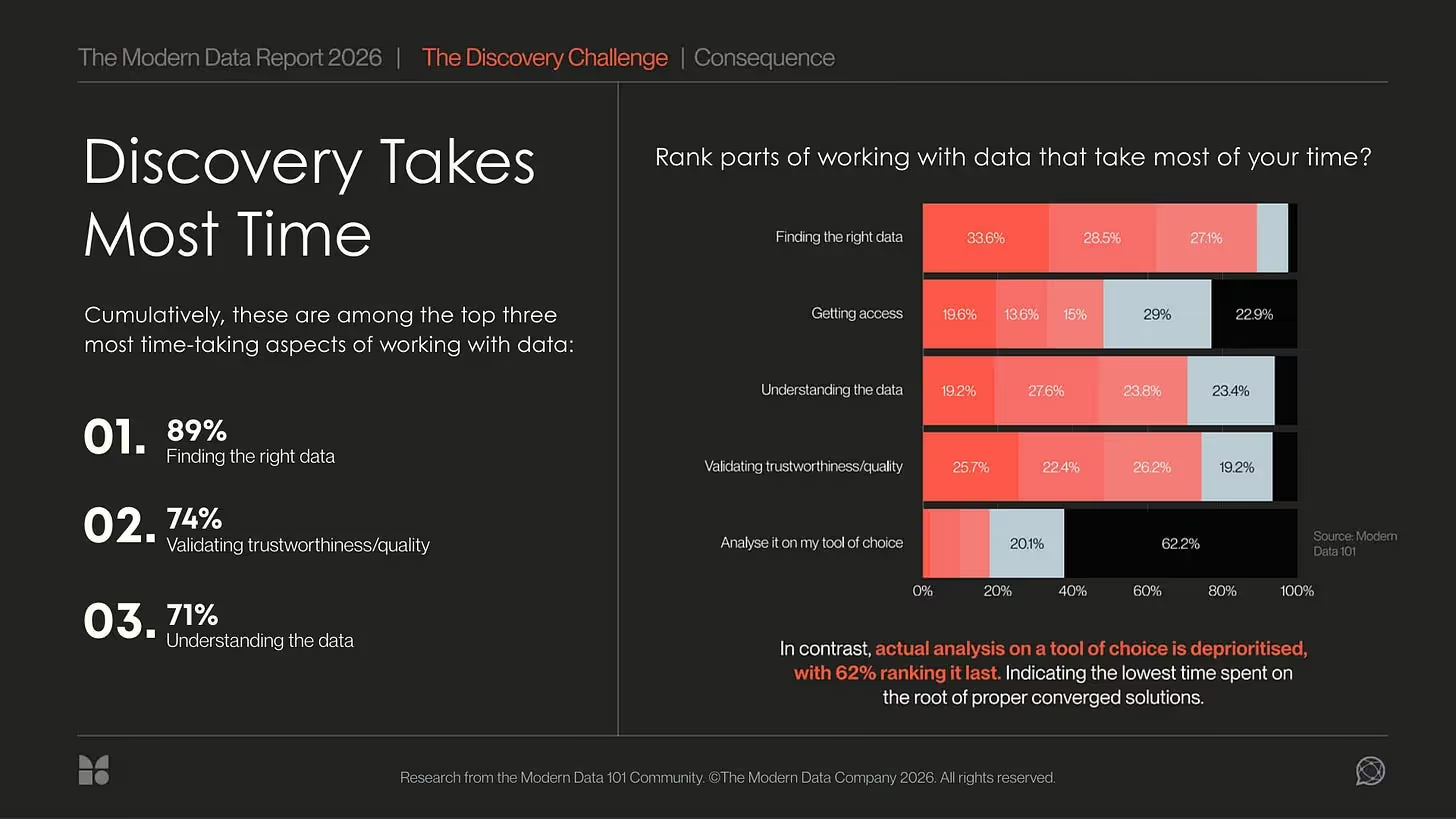

Most of the respondents suggest that discovery is where this gap first becomes visible. 89% of respondents say finding the right data is among their top three time drains.

Trusting the data and quality available is right behind, with 74% citing it as an aspect that consumes most of their time. Understanding the data follows closely, with 71% ranking it in their top three, underscoring how missing context, definitions, and ownership slow progress even further.

For human users, this manifests as delay, while AI goes into failure modes or worse, hallucinations on misdirected discovery.

Context Is Non-Negotiable

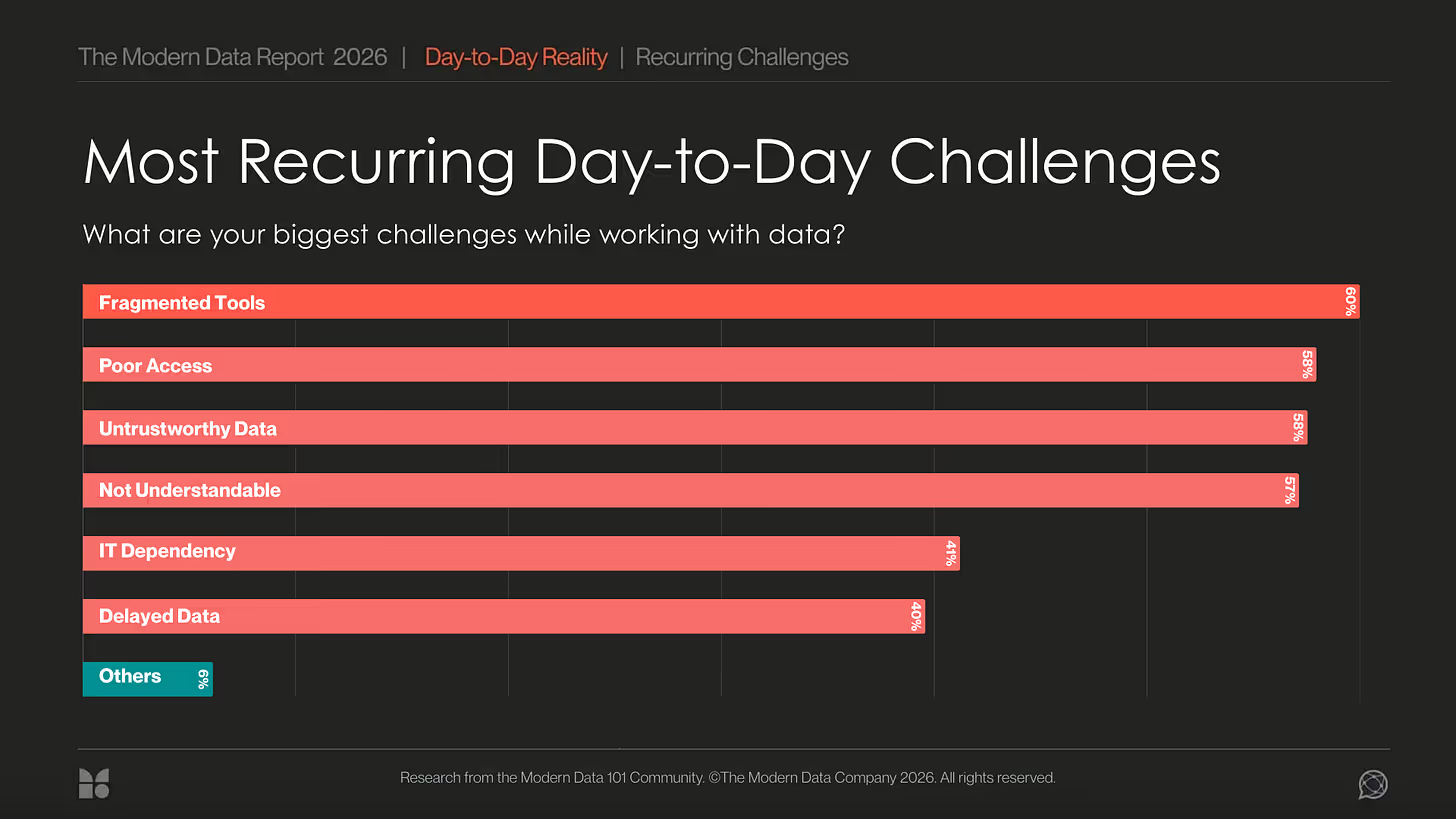

Data without context is fundamentally unusable for AI. Over half of practitioners, 57%, report struggling to interpret data simply because the necessary context is missing.

Lineage, the map of where data comes from and how it evolves, is absent for 53%, leaving both humans and AI blind. More than a third cite missing business context as the largest platform gap, and 35% cannot even close AI feedback loops because semantic layers are absent.

The findings are unavoidable: AI does not infer meaning the way humans do. It does not “understand.” It computes, executes, and propagates logic based strictly on the definitions provided. When those definitions are incomplete or ambiguous, AI does not hesitate, stumble, or pause. It makes decisions with full confidence and hallucinations. Context has always been a prerequisite for humans, more so in the age of AI.

Conditional Trust Prevents Autonomous Action

Trust is not binary in modern data systems. Trust in data and business execution is conditional, negotiated, and fragile. And respondents say there’s enough reason for it, given how they cannot trust metrics even through human-led channels of analysis.

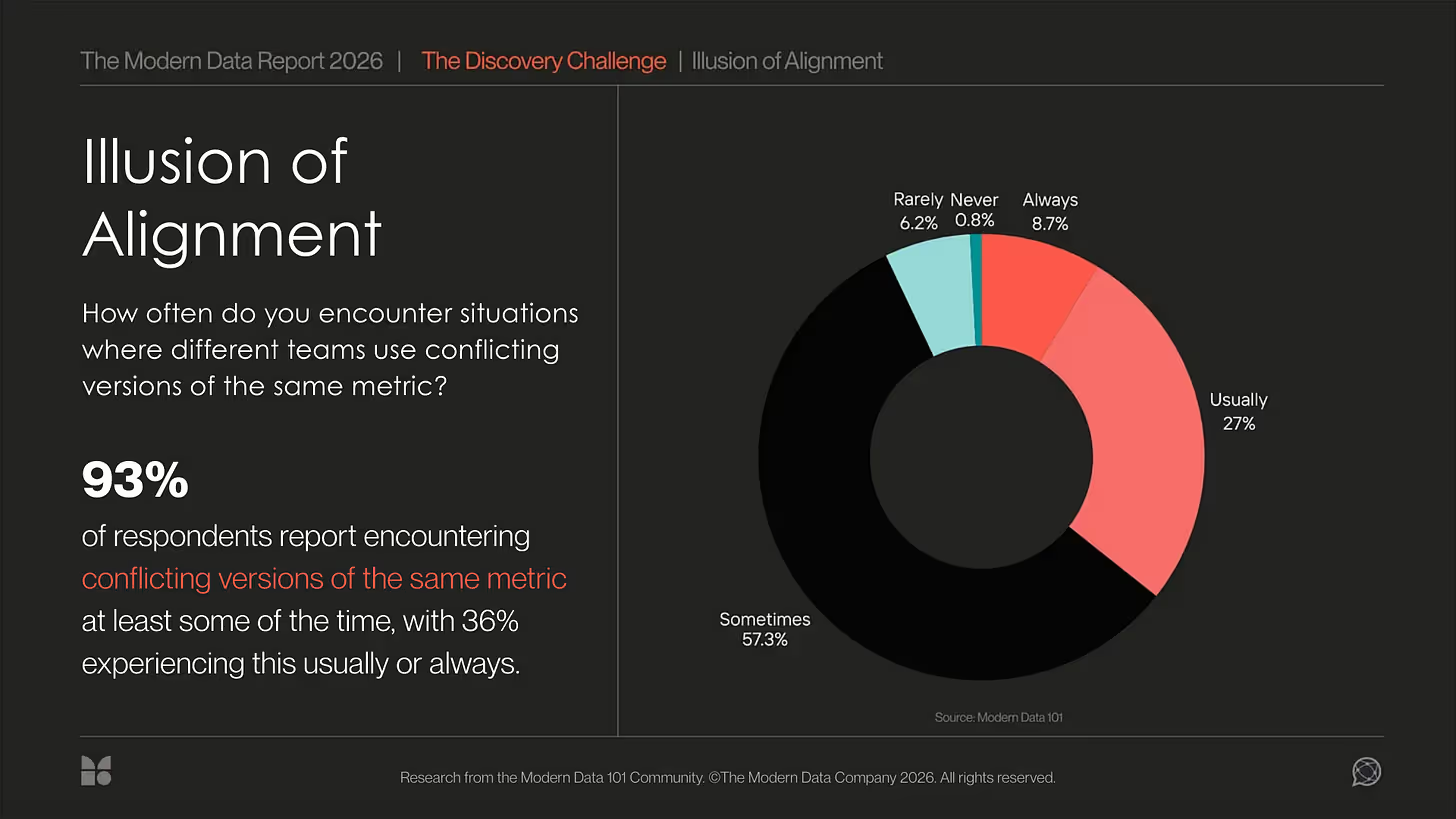

93% of respondents say they encounter conflicting metrics. Nearly half do not fully trust their own data, and 68% explicitly state that it is not trustworthy enough for AI (refer: figure 1)

These are not edge cases, but the majority’s vote. These are the dominant operating conditions under which AI is being asked to act.

Humans cope by improvising. They cross-check numbers across dashboards, ask colleagues which metric is “the real one,” and delay decisions until consensus, intuition, or authority fills the gap left by unreliable data. This is inefficient, but it works because humans can suspend action when trust is low.

AI cannot. For an autonomous system, trust must be explicit and machine-readable. No trust signal means no action at all, or worse, action taken on the wrong signal with absolute confidence. This is why AI adoption stalls precisely where the stakes rise, like enterprise autonomy. While that’s the goal most data leaders will to establish, the reality is that the moment decisions matter, conditional trust becomes insufficient, and autonomy collapses.

The Data Stack Was Built for Humans, We Need a Rebuild for Machine Users

The current state of the data stack was designed around a consequential assumption: the primary consumer of data is a trained human analyst.

Meaning was never expected to exist concretely and definitively in the system itself. It mostly existed in people’s heads, scattered documents, and meetings where ambiguity could be resolved socially. Validation was not formalized but negotiated. When numbers conflicted, humans stepped in to arbitrate, which is why the state of data systems never grew beyond dependency. And till date, as we observed above in the article, 84% of respondents say they encounter conflicting metrics.

AI breaks this model completely. Machines cannot compensate for fragmentation with intuition or context-sharing. They cannot infer which definition is “correct” this week, or which metric the business actually cares about right now. For machines, meaning must be embedded directly into the system: explicit, durable, and machine-readable. Anything implied might as well not exist.

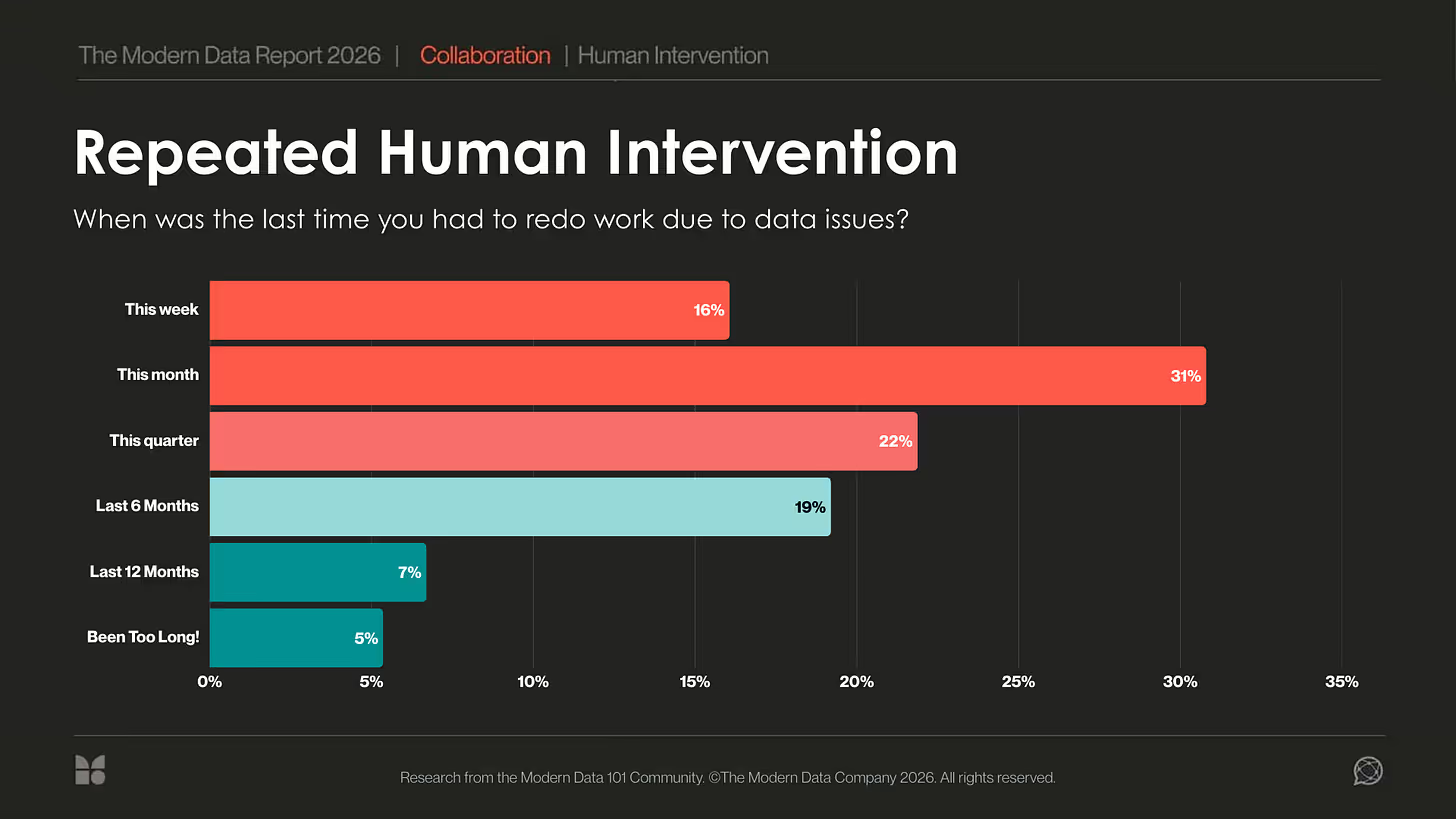

The consequences show up clearly in the data. Almost 70% experience rework within a single quarter. 66% report productivity loss. More than half point to strategic missteps, and nearly half link revenue loss directly to data issues.

This signal does not yet suggest failed investments or goals, and is not typically a surprise either. It’s a predictable outcome of a stack optimized for human coping mechanisms.

The Industry Signals Convergence of the Data Stack as the Emerging Requirement

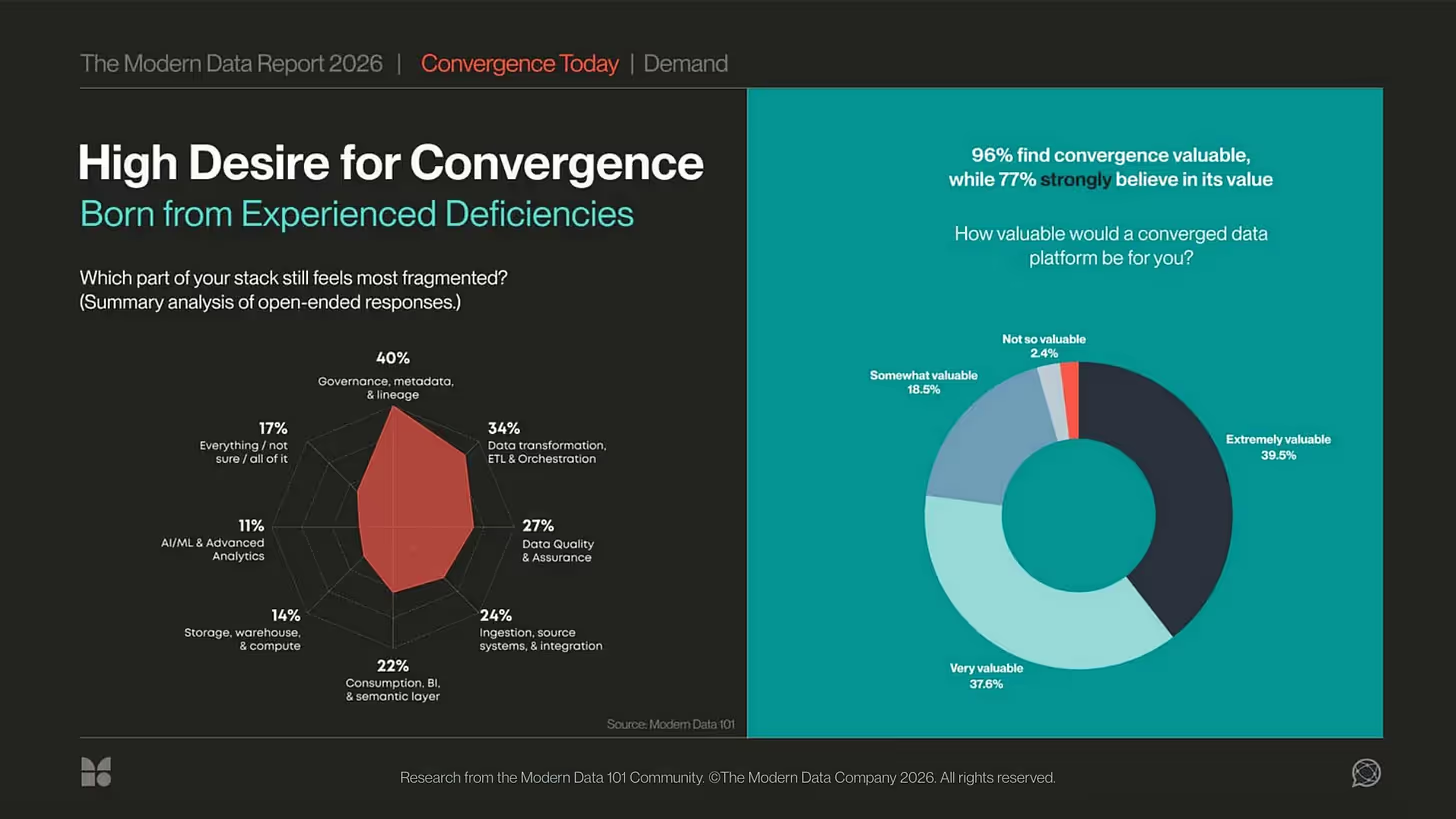

The report makes something clear that is easy to misread if framed incorrectly. 77% say a converged platform would be valuable, and nearly 40% go further, calling it extremely valuable. This is not a soft signal of interest or curiosity, but we detect real strain behind such strong signals.

The correct reframing is not vendor fatigue. Organizations are not tired of too many tools, they are exhausted by fragmented architecture. Each additional system introduces another boundary where meaning, trust, and context can leak or are unable to interoperate.

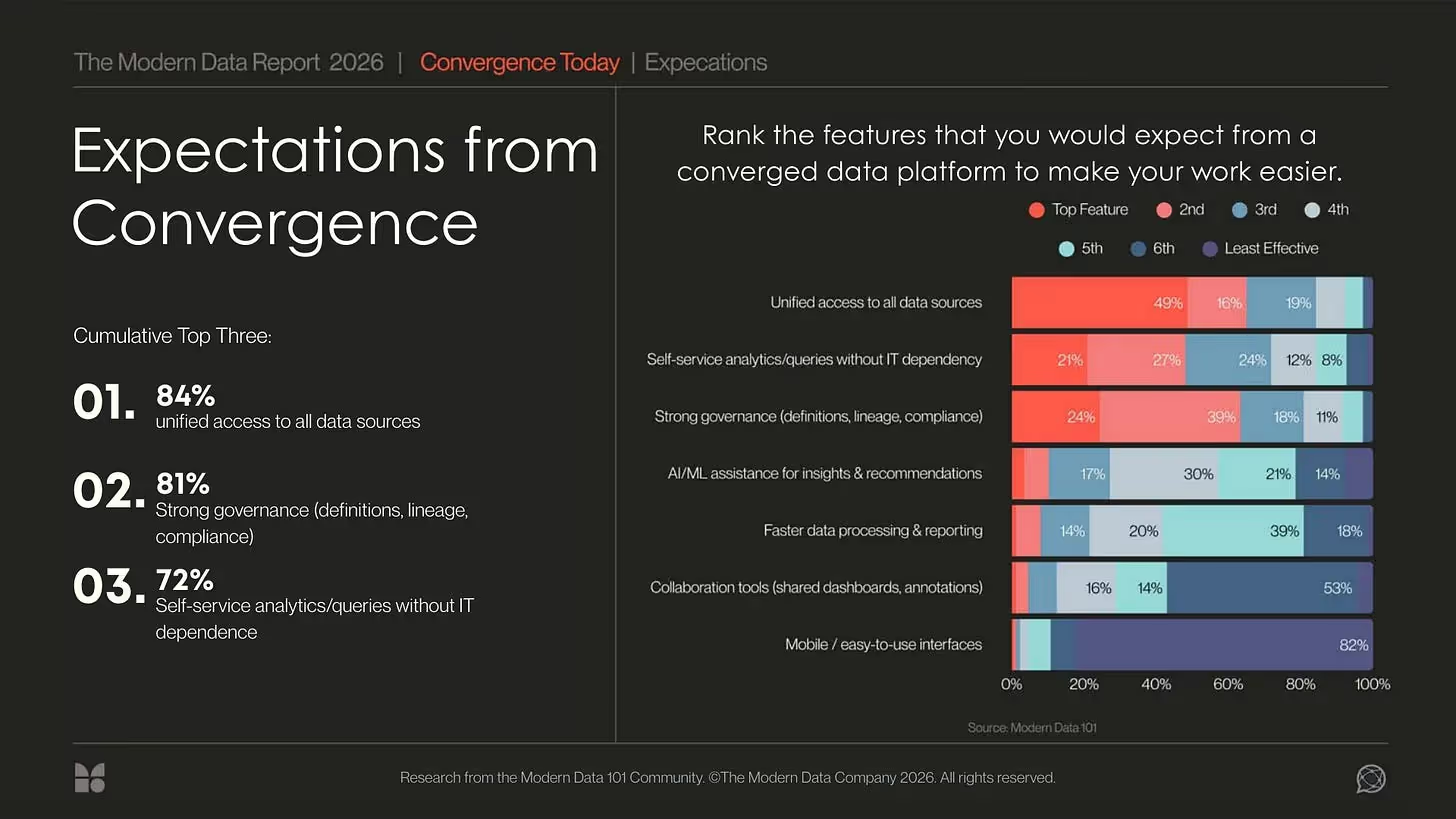

AI demands coherence through convergence of the data stack, and these are the industry’s expectations from convergence (ranked among the top three):

- Unified access to all data, cited by 84%, is not about convenience, but about eliminating blind spots.

- Strong and consistent governance, demanded by 81%, is what makes trust machine-readable.

- Self-service without IT dependency, at 72%, is what allows action to scale.

These are often mislabeled as “AI features.” They are the minimum conditions required for data activation. Without convergence, AI fails in business environments where the stakes are high, because the system on which it depends was never made whole.

Activation Is the New Benchmark for AI Maturity

For years, AI maturity was measured using technical proxies. Model accuracy, latency, and cost dominated evaluation frameworks, as if intelligence could be inferred from performance characteristics alone. These benchmarks made sense when AI was experimental and when the goal was to prove that models could work at all.

That phase is over. The only benchmark that now matters is activation: can AI reliably trigger action without human hesitation? Especially in high-stakes business environments where the cost of inaccurate or hallucinated data is unacceptable.

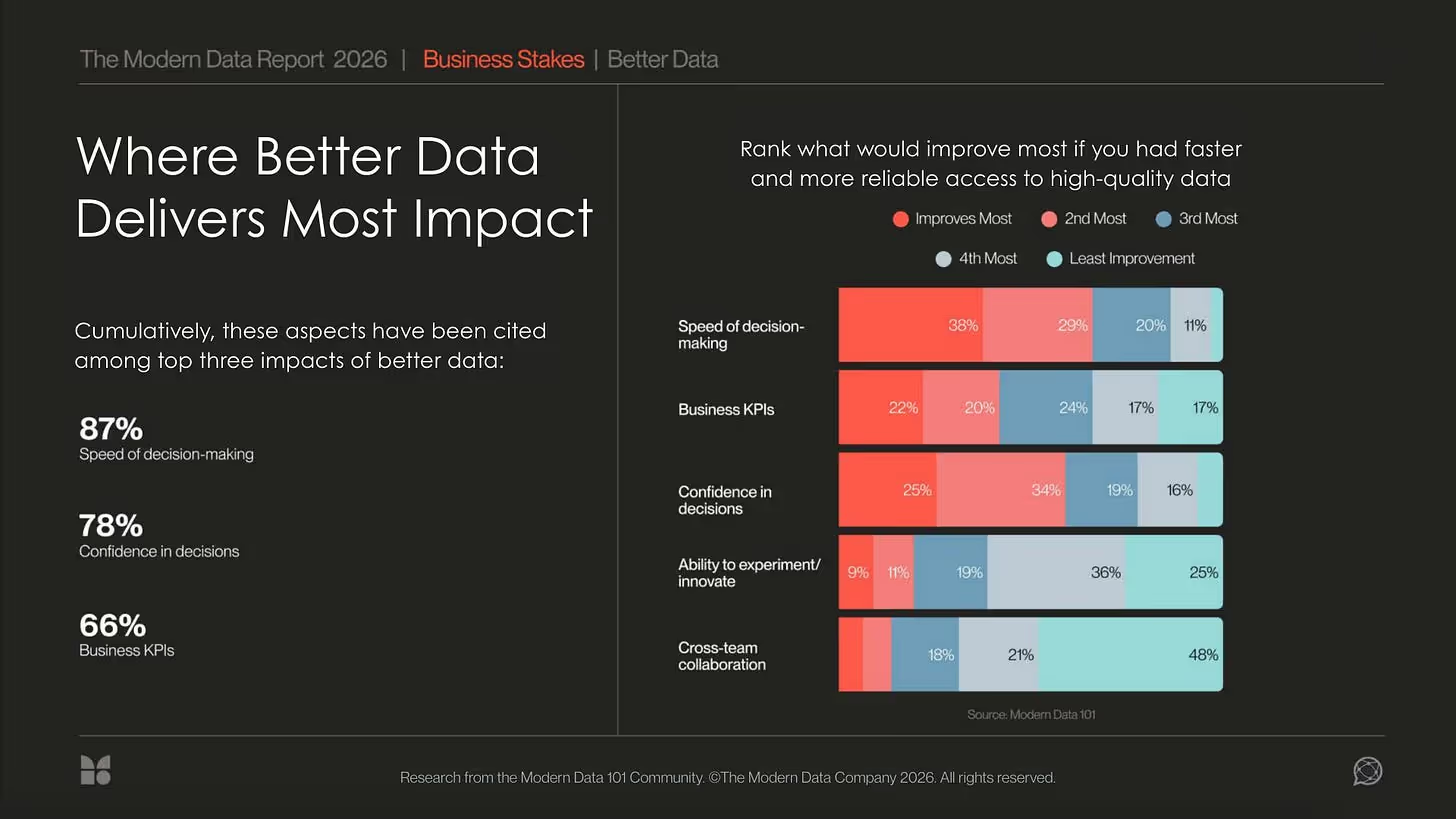

We asked practitioners what would improve most with faster, more reliable access to high-quality data. The responses show that speed improves first, cited by 87%, followed closely by confidence at 78%.

Only after this hesitation disappears do business KPIs begin to move. Not because models suddenly become smarter, but because decisions finally become executable.

AI maturity is no longer a question of intelligence. It is a question of decision readiness. When systems are trusted, contextualized, and converged, action becomes the default. Until then, even the most accurate models remain spectators.

From Tool Stacks to Data Systems Built for AI-Native Consumption

AI collapses the distance between data and decisions. What once tolerated delay, interpretation, and human mediation is now compressed into moments where action must be immediate and defensible.

In this compressed environment, fragmentation is no longer a nuisance, but an architectural failure. Stacks built as collections of tools cannot survive when the gap between insight and execution disappears.

Convergence emerges as the only viable response. It is not about consolidation for efficiency, but about continuity. Meaning must flow without translation, trust must persist without negotiation, and access must be unified rather than stitched together.

Only then does data become consumable for both humans (without the overheads) and machines (without the hallucinations and costs of lacking precision).

Get the Complete Report

The Modern Data Report 2026 is a first-principles examination of why AI adoption stalls inside otherwise data-rich enterprises. Grounded in direct signals from practitioners and leaders, it exposes the structural gaps between data availability and decision activation.

With hundreds of datapoints, this report reframes AI readiness away from models and tooling, and toward the conditions required and/or desired for reliable action.

.svg)

Access the full report

540+ data leaders, subject matter experts, and practitioners with over a decade of experience on average and from across 64 countries and about 30 industries came together to participate in the second edition of the Modern Data Survey.

- Understanding the Deterrents to AI-Readiness

- Uncovering Data Activation Gaps

- Zooming in on Leadership Goals with Data and AI

And much more!

Previous Year’s Report

230+ Industry voices with 15+ years of experience on average and from across 48 countries came together to participate in the first edition of the Modern Data Survey.

- Locating specific time sinkholes

- Uncovering recurrent and unnecessary tooling gaps

- Projecting the desired data stack in demand

And much more!

Who we are

The Modern Data Company

The Modern Data Company is redefining data management for the Al era. The company's flagship platform, DataOS, serves as the foundational analytics and Al-ready data layer for any data stack. This unified platform gives enterprises the ability to build and deploy data products, simplify data management, and optimize data costs. DataOS frees teams to focus on driving real value from data, accelerating the journey to becoming a truly data-driven and Al- enabled organization.

The Modern Data 101 Community

Modern Data 101 is a publication and community for data leaders, practitioners, and visionaries building data platforms, designing data teams, and architecting the invisible. In a world of countless tools, trends, and templated thought leadership, Modern Data 101 slows things down down to ask deeper questions: Why was this actually built? And what business problem can it solve?We explore architecture, semantics, and organisational design, the invisible foundations that determine whether data works.