Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

Data ecosystems have geared up to become more distributed and tightly aligned to business outcomes. With continuous data product delivery, streaming pipelines, and AI agents in the loop, teams must move beyond detecting failures; they need instant clarity on why something broke…

🎢 The global data observability market size is expected to reach USD 9.7 billion by 2034 from USD 1.7 billion in 2025 at a CAGR of 21.3%- Dimension Market Research

This shift is why data observability has moved from a “nice-to-have” to strategic infrastructure. It provides the visibility and context required to keep data products reliable and ensure observability capabilities become standard across modern data stacks.

In this piece, we’ll unpack what data observability really means today, and dig into the key discussions the industry often overlooks but urgently needs to address.

[report-2025]

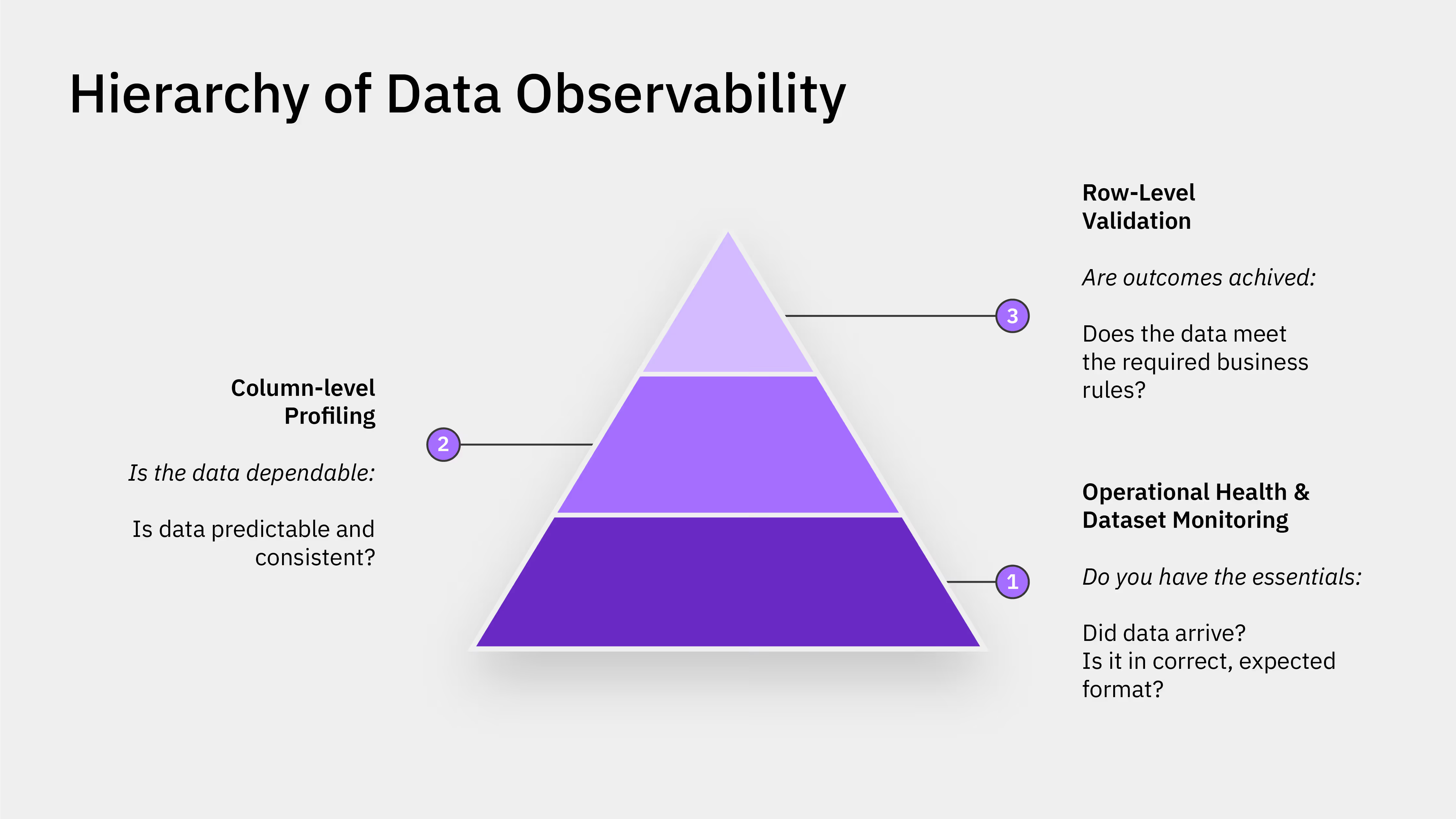

Data observability is known as the capability of understanding the state of data systems by measuring different metrics or signals, using them for the detection, explanation, and prevention of failures.

Data observability refers to the practice of monitoring, managing and maintaining data in a way that ensures its quality, availability and reliability across various processes, systems and pipelines within an organization ~ IBM

For 2026 and beyond, this definition now also includes automated anomaly detection, active metadata, and model integrations, including application telemetry, so that teams can trace data to downstream AI-driven decisions. Such a broad view links data quality and observability as a single discipline underpinning trustworthy AI and also reusable data products.

Being explicit helps!

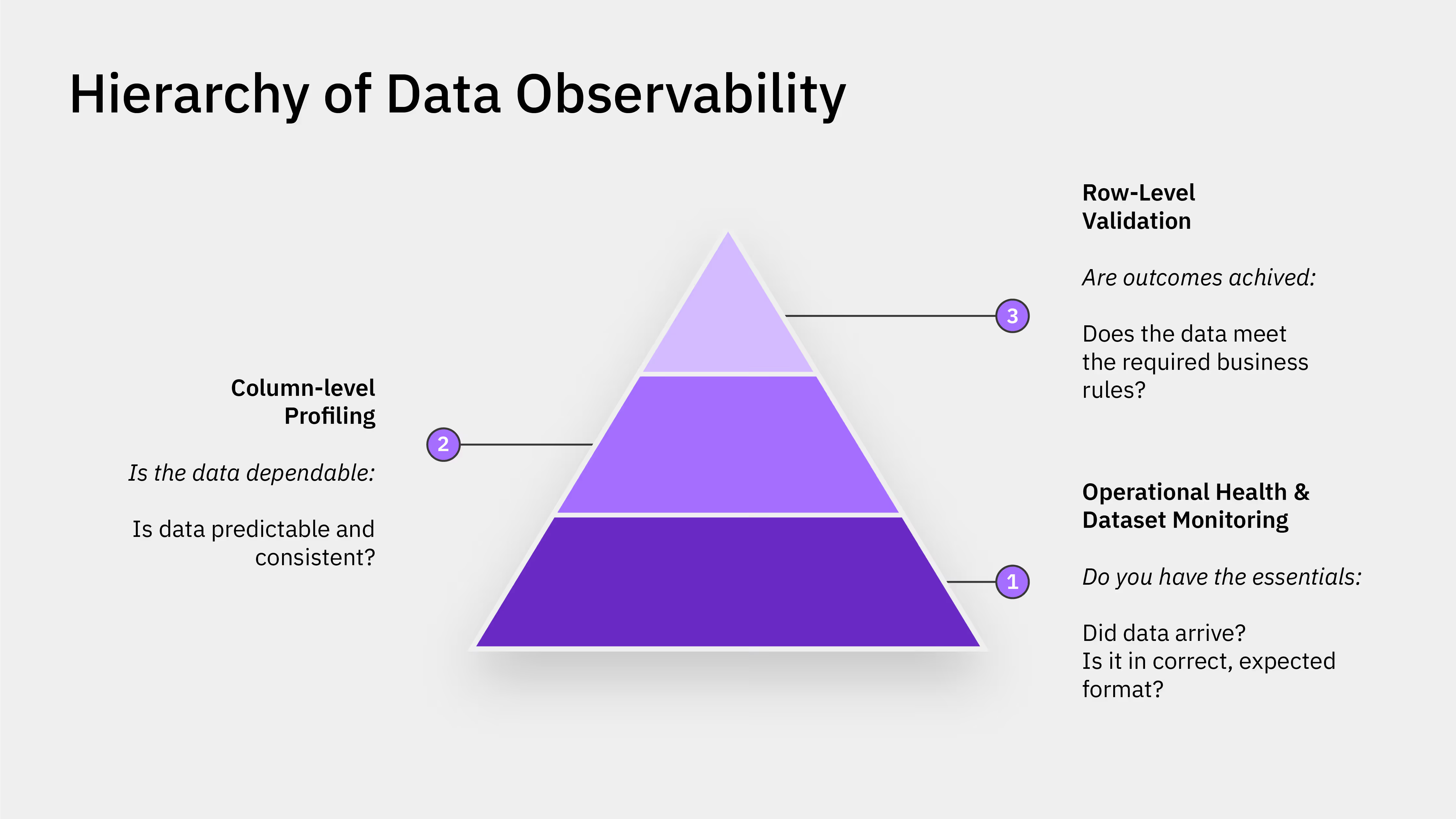

Observability vs. monitoring is not semantics. While monitoring alerts you that something broke, observability helps you understand and get answers to the why and the where.

Monitoring is rules-based, where metrics such as thresholds and counts come into play, while observability combines signals and context, which also includes observability tracing and lineage, so that engineers and data owners can timely diagnose the root cause.

In modern pipelines, relying only on monitoring leads to time-consuming incident cycles. Observability significantly cuts down that time by ensuring causal visibility.

AI and data products should have predictable inputs. Whenever there’s a distribution drift or a table becomes stale, a lot of things can go wrong: analytics can go wrong, models can hallucinate, and SLAs can also slip.

AI observability relies on robust data observability. If inputs can’t be traced or validated, model-level telemetry will only tell an incomplete story. Investing in data observability for data products prevents silent failures, safeguards model performance, and dramatically simplifies compliance and audits.

Let’s think from a product’s perspective: Data observability is fundamentally about whether a data asset or a data product behaves in a reliable, predictable, and user-trustworthy way.

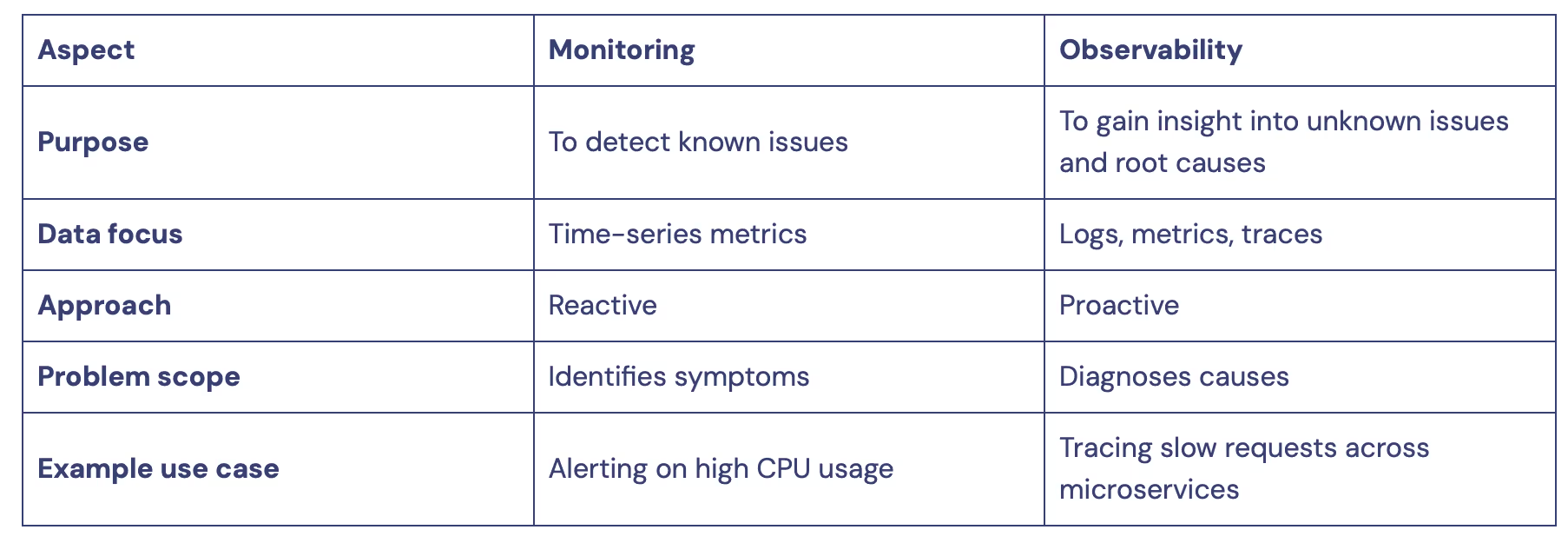

Given below are the five pillars of data observability that teams should evaluate and measure. They also reflect industry standards and the fact that observability, which is platform-first and model-aware, is now really important for enterprises.

This pillar measures when data was last updated and also evaluates if it meets the expected SLAs. Freshness is critical for streaming models and real-time decisions.

Freshness enables a stable user experience continuity, unlike stale data that breaks trust, confuses users, and forces them to create workarounds.

When approaching data as a product, freshness becomes a part of the product contract.

Distribution is responsible for monitoring the statistical shape of a data product’s features. Distribution shifts can quietly distort meaning and break data products or models.

From a product perspective, distribution observability revolves mainly around protecting interpretation, preventing users from being blindsided by little behavioural changes with the potential to alter performance, insights, or meaning. It can be thought of as the data equivalent of a product suddenly responding differently to the same input, cluttered and trust-eroding.

The volume pillar detects duplicate loads, missing batches, and spikes. Volume anomalies are mostly the earliest sign of ingestion issues. Innovative data observability tools use volume patterns to close in on and prioritise incidents automatically.

Volume anomalies need to be treated as product health regressions.

Embedded end-to-end lineage in an observability platform enables pinpointing volume anomalies like whether the deviation came from an upstream domain, a schema evolution, or a business rule change, so teams don’t waste hours debugging the wrong layer.

Schema monitors structural changes, like types, renamed columns, or nullable flags. Schema changes are the most significant cause of production breakages.

The best platforms with their schema in check are able to combine active metadata, contracts, and early validation to stop issues before they lead to degradation or damage.

Schema changes should be surfaced as product contract changes, instead of any surprise breaking events.

Observability needs to combine-

schema diffs + lineage + consumer impact analysis

So teams know exactly who will feel the change.

The goal: no accidental downstream breakages, ever!

It provides complete traceability from source to the final consumer. Lineage lends context for impact analysis, thereby boosting the remediation pace. Data observability with tracing connects lineage with the system as well as model traces so that teams can navigate the cause and effect across the entire stack.

Lineage is a strategy layer enabling the other observability pillars: it helps in root-cause analysis, connects anomalies to owners, drives improvement, and is also responsible for ensuring safe change management. With lineage, observability becomes intelligent.

It’s not possible to separate model health from data health in real-world use cases. AI observability and data quality best practices now overlap. This is why teams need to have joint dashboards that reflect input drift, model metrics, and downstream user impact.

With unified observability, it becomes easier to determine whether model performance degradation arises from data drift, a change in consumption patterns, or a feature calculation bug.

Learn more about how you can build your AI agent observability system here ↗️

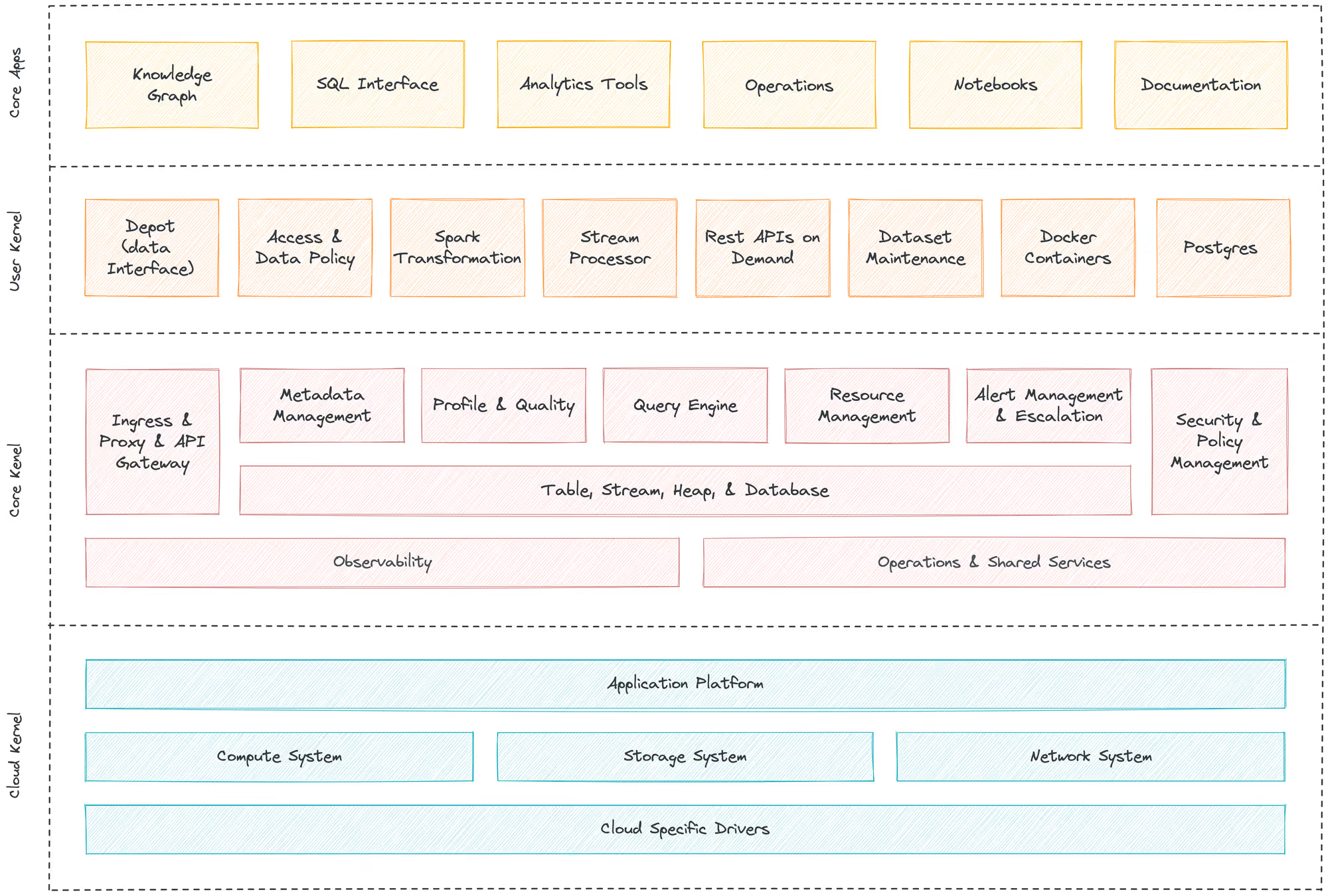

Data observability is a fundamental, built-in offering of Data Developer Platforms.

When observability gets embedded right at the platform level, developers are ensured to receive immediate feedback, data product owners inherit trust by default, and operational friction witnesses a significant decline.

As a DDP leverages a unified, consistent infrastructure, it inherently integrates metadata, lineage, monitoring, and governance into the basic structure of every data product. This means observability isn’t bolted on as an afterthought! It is a part of how every data product is designed, delivered, and maintained.

[related-1]

With DDP, you declare data products explicitly: inputs, transformations, SLOs, outputs, metadata, and contracts.

This makes freshness, schema, volume, distribution expectations, and lineage a part of the data product contract. When your data products have declared expectations, observability becomes a natural mechanism to enforce and validate those promises.

A Data Developer Platform treats metadata as a first-class citizen.

Semantics, lineage, provenance, and versioning are captured automatically, giving every data asset and every data product its full context from day one. You always know where the data came from, how it evolved, and who depends on it downstream.

In case of observability alerts cropping up, like a distribution drift or a schema slip, a schema change, teams don’t struggle anymore.

A Data Developer Platform offers modular building blocks to ensure that data products across domains share metrics, definitions, templates, and patterns.

That standardisation drastically reduces divergence, configuration drift, and “observability blind spots.” In product-thinking terms, observability becomes the same across all data products, making reliability scalable rather than artisanal.

DDP enables data developers (or domain teams) to deploy, manage, and monitor products through APIs, declarative specs, and templates.

Observability metrics such as schema enforcement, lineage, and metadata propagation get automated, lowering the barrier for consistent observability across the entire data ecosystem.

[data-expert]

As data systems advance and evolve, data observability is at the cusp of undergoing a transformation in itself, where it becomes something more, from just reactive monitoring to intelligent, proactive, and controlled.

Data products will have built-in SLAs, usage metrics, quality scores, and health indicators, ensuring better trust, reuse, and discoverability across multiple domains.

Rise of the Model Context Protocol (MCP)

Standardised metadata exchange will enable AI agents to natively consume observability signals, empowering autonomous decisions in platforms.

Data Developer Platforms will pave the way.

DDPs will start embedding observability by default, allowing teams to build, test, deploy, and monitor data products without relying on centralised bottlenecks.

Data observability is now the operational backbone for reliable AI and scalable data products.

In 2026, organisations treating observability as an integrated, platform-level capability, and not as an afterthought, will eliminate most of their monitoring and quality issues: fewer incidents, quicker debugging, and higher trust in data-driven outcomes.

If you are an enterprise that builds data assets and runs AI at scale, make observability a first-class part of your platform strategy today.

Even smaller teams get to benefit, as data issues don’t depend on or scale with team size. Data observability helps to catch silent errors such as gaps in freshness, schema drift, or volume anomalies, before they create an impact on AI models and analytics.

Data monitoring or data quality checks are known to flag issues that are known, but data observability goes deeper to combine distribution, freshness, volume, lineage signals, as well as schema to understand why something went wrong in the first place.

Simran is a content marketing & SEO specialist.

Simran is a content marketing & SEO specialist.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.