Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

It’s hard to find an enterprise today that isn’t betting big on AI. From automated customer service to predictive analytics and Generative AI infrastructure, investments are at an all-time high. Yet, the return on these investments tells a different story. Despite the scale of ambition, most organisations are still struggling to realise measurable value from AI.

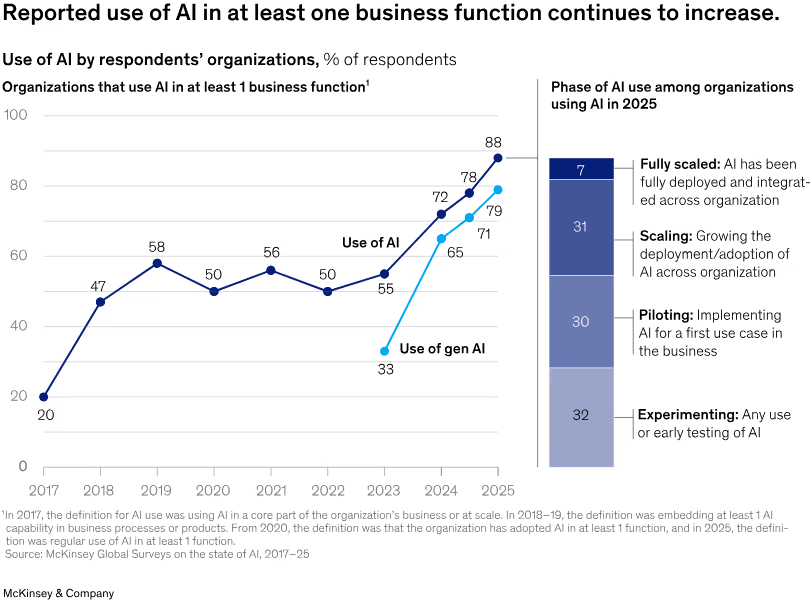

Most organisations are still experimenting; nearly two-thirds haven’t begun scaling AI enterprise-wide (Source: McKinsey Insights)

This widening AI value gap, the distance between the promise of artificial intelligence and the outcomes it actually delivers, reflects something deeper: a systemic disconnect between how enterprises build AI capabilities and how they operationalise them.

So, what’s really at fault here? Is it the technology itself, or the fragmented, brittle data foundations on which we keep trying to stack our AI aspirations?

The answer is increasingly clear. The problem isn’t a lack of models or talent. It’s that our infrastructure wasn’t designed for AI value realisation. The next generation of data platforms will need to change that, bridging the gap between experimentation and execution, and finally helping organisations accelerate AI value at scale.

The AI value gap captures a simple truth: even as enterprises invest heavily in models, platforms, and talent, the impact curve remains disappointingly flat. Most organisations can build impressive prototypes, few can turn them into sustained, measurable value.

So where does the system break down? The gap often traces back to four foundational cracks in the enterprise data environment:

AI systems are only as good as the data they learn from, yet most organisations underestimate the cost of getting data ready. Models trained on incomplete or inconsistent data produce unreliable outputs that can’t be trusted in decision workflows.

Data readiness means every dataset carries the context, lineage, and governance needed for use across teams and AI systems. Without it, models spend more time compensating for data defects than learning patterns that create value.

Data quality is still treated as an afterthought, audited after deployment instead of engineered into pipelines. This reactive approach leads to cascading errors in model performance, compliance, and business trust.

The AI value gap widens every time a model must bridge what the platform failed to deliver. Until data quality and readiness become continuous, discoverable, and self-serve platform capabilities, scaling AI value will remain aspirational.

Different teams maintain separate environments for analytics, ML, and operations. As a result, data transformations, integrations, and even model training pipelines are rebuilt repeatedly. This fragmentation inflates cost and slows delivery. Worse, it blocks institutional learning; every AI project becomes a one-off experiment.

True AI scale comes from the reuse of features, datasets, models, and orchestration patterns. But most enterprises still lack the systems to turn these components into shared, discoverable assets. Without a reuse layer, each use case begins from scratch, consuming time that should be compounding value instead.

AI teams are often measured by innovation velocity, but not business continuity. Success gets defined as “launching a POC,” not “delivering a maintained product.”

This encourages experimentation loops with no path to production, leaving even successful pilots stranded before they deliver value.

So this isn’t a modelling problem, rather a data and infrastructure maturity problem, a symptom of environments that weren’t built to sustain productised AI outcomes. Unless enterprises fix how data, infrastructure, and governance interlock, no algorithm, however sophisticated, will close the AI value gap.

Most organisations aren’t short on AI initiatives; they’re short on outcomes. Generative AI pilots are everywhere, yet few ever make it past the demo stage. Models are built, showcased, and abandoned because the surrounding ecosystem, data pipelines, infrastructure, governance, and ownership don’t exist to support them.

Without the right platform foundation, AI turns into a cost centre disguised as innovation. It consumes compute, data, and people without creating lasting value. As platformengineering.org aptly puts it, AI is an amplifier of what’s already there; if your architecture is fragmented or your governance is reactive, AI will only magnify that dysfunction.

To extract sustainable value, organisations must shift from one-off experiments to repeatable production. That means building for reuse and scale: treating data pipelines, agents, and generative models as composable components governed through a shared platform. This is where the real acceleration happens, when AI ceases to be a collection of disconnected proofs of concept and becomes an integrated capability powered by infrastructure that’s designed to evolve.

The economics of AI have never been neutral. They favour organisations with scalable data, elastic compute, and the governance discipline to connect the two. In other words, the ones with the right AI platform already behave differently; they compound value. Everyone else just runs experiments.

Small-scale experimentation looks good on paper but doesn’t translate into enterprise economics. A model that works in isolation adds no cumulative advantage unless it plugs into AI-ready data pipelines and governed feedback loops. Without that continuity, every new model starts from scratch, consuming more resources than it returns.

Learn more about AI-ready data here.

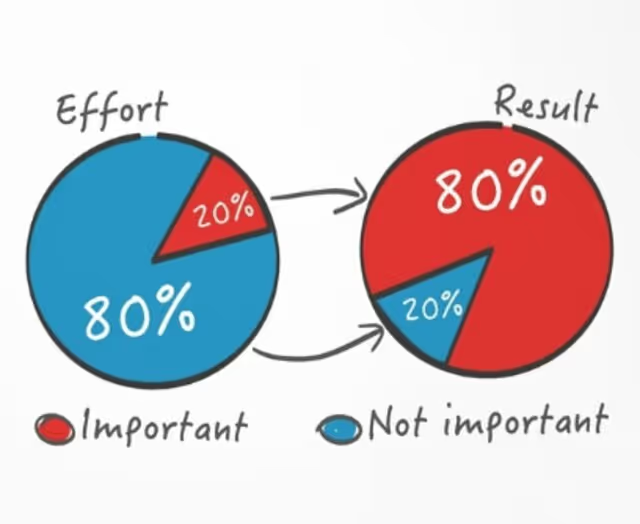

Across research and industry data, the pattern repeats: roughly 80% of AI projects stall before production, and the rest often fail in the final 20%, the integration and operationalisation stage. That last stretch is where organisational and infrastructural maturity decide whether artificial intelligence becomes an enterprise asset or another cost centre.

Most enterprises, faced with slow or inconsistent AI outcomes, double down on technology. They buy or build new AI platforms hoping to solve for scale, speed, or complexity. Yet despite the investment, few of these platforms deliver sustainable value from AI.

Three familiar pitfalls explain why:

Every discussion about AI value eventually circles back to one thing, the foundation.

An AI-ready data platform is that foundation: a system built not just to store data or deploy models, but to sustain continuous value creation across the AI lifecycle.

At its core, this kind of platform behaves more like a product ecosystem than a tech stack. It brings together data, models, and automation under shared principles of reuse, governance, and interoperability. Its defining traits are easy to recognise, but hard to replicate:

Unified Metadata and Lineage

Every dataset and model is traceable from source to output. Lineage should ideally not be an afterthought and needs to that allows teams to trust, audit, and reuse assets confidently.

Related Reads:

Data Lineage is Strategy: Beyond Observability and Debugging

Composable Data Products

Instead of raw tables and feature stores locked in silos, the platform exposes data products, clean, contextual, and reusable components that can serve multiple AI use cases without reinvention.

Embedded Governance by Design

Policies, access rules, and compliance checks are embedded in the platform itself. Governance guarantees that every action remains compliant and auditable by default.

Related Reads:

Solve Governance Debt with Data Products

Elastic Compute and Generative AI Infrastructure

An AI ready platform scales seamlessly across hybrid or multi-cloud environments, providing the flexibility to run everything from small models to complex Generative AI workloads without performance trade-offs.

A Data Developer Platform connects data infrastructure, AI platforms, and AI infrastructure into a single, interoperable layer. It eliminates the gaps that typically exist between data pipelines, model workflows, and governance systems.

Key integration capabilities include:

This approach shifts governance from manual oversight to embedded control. Compliance, auditability, and lineage are no longer separate workflows; they are intrinsic to how data and models interact.

By aligning data systems, governance, and AI workloads under one infrastructure, data developer platforms ensure AI platforms can scale without fragmentation, closing the feedback loop between data quality, model performance, and business value.

A Data Developer Platform makes data usable for AI by enforcing product thinking at the infrastructure level. Every dataset becomes a Data Product, discoverable, governed, and interoperable across systems. This isn’t another layer of tooling; it’s how the underlying platform standardises trust and reusability at scale.

Each Data Product encapsulates the core properties AI systems depend on:

The result: data becomes model-ready by default. Teams spend less time fixing inputs and more time optimising outputs. Experimentation cycles shorten, deployment becomes faster, and governance stops being an external process, it’s built into how data operates.

Q1. What is the funding gap for AI?

The AI funding gap refers to the mismatch between the high investment in AI model development and the underinvestment in the supporting data and infrastructure needed to operationalise those models. While budgets flow into building AI capabilities, many organisations neglect funding for data quality, governance, and platform readiness, leading to stalled pilots and poor ROI.

Q2. Which technology will enable businesses to expand AI and operate new training algorithms?

Businesses will rely on modern AI infrastructure, including scalable compute, unified data platforms, and MLOps pipelines, to expand AI and support new training algorithms. A Data Developer Platform (DDP) further enhances this by ensuring high-quality, governed, and model-ready data flows seamlessly into AI systems, enabling faster experimentation and more reliable scaling.

.avif)

Ritwika is part of Product Advocacy team at Modern, driving awareness around product thinking for data and consequently vocalising design paradigms such as data products, data mesh, and data developer platforms.

Ritwika is part of Product Advocacy team at Modern, driving awareness around product thinking for data and consequently vocalising design paradigms such as data products, data mesh, and data developer platforms.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.