Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

Even today, analysts are trapped in "report maintenance hell," spending more time updating static dashboards than actually hunting for the next strategic advantage.

Meanwhile, the executive suite sees sparkly AI demos but can't plug them into the day-to-day BI workflow because the data underneath is not ready. BI has clearly hit a wall.

The problem lies in the foundation of today’s Business Intelligence Platforms. Every attempt to inject AI into the BI process stalls at the same bottleneck: fragile data that isn't productised, reusable, or production-grade.

Enter Lean AI for Business Intelligence: a strategic pivot to AI for business that is practical, fast, and cost-effective, transforming those passive reports into proactive intelligence that turns the data into a reusable, contextualised, and ready for the next generation of predictive agents asset.

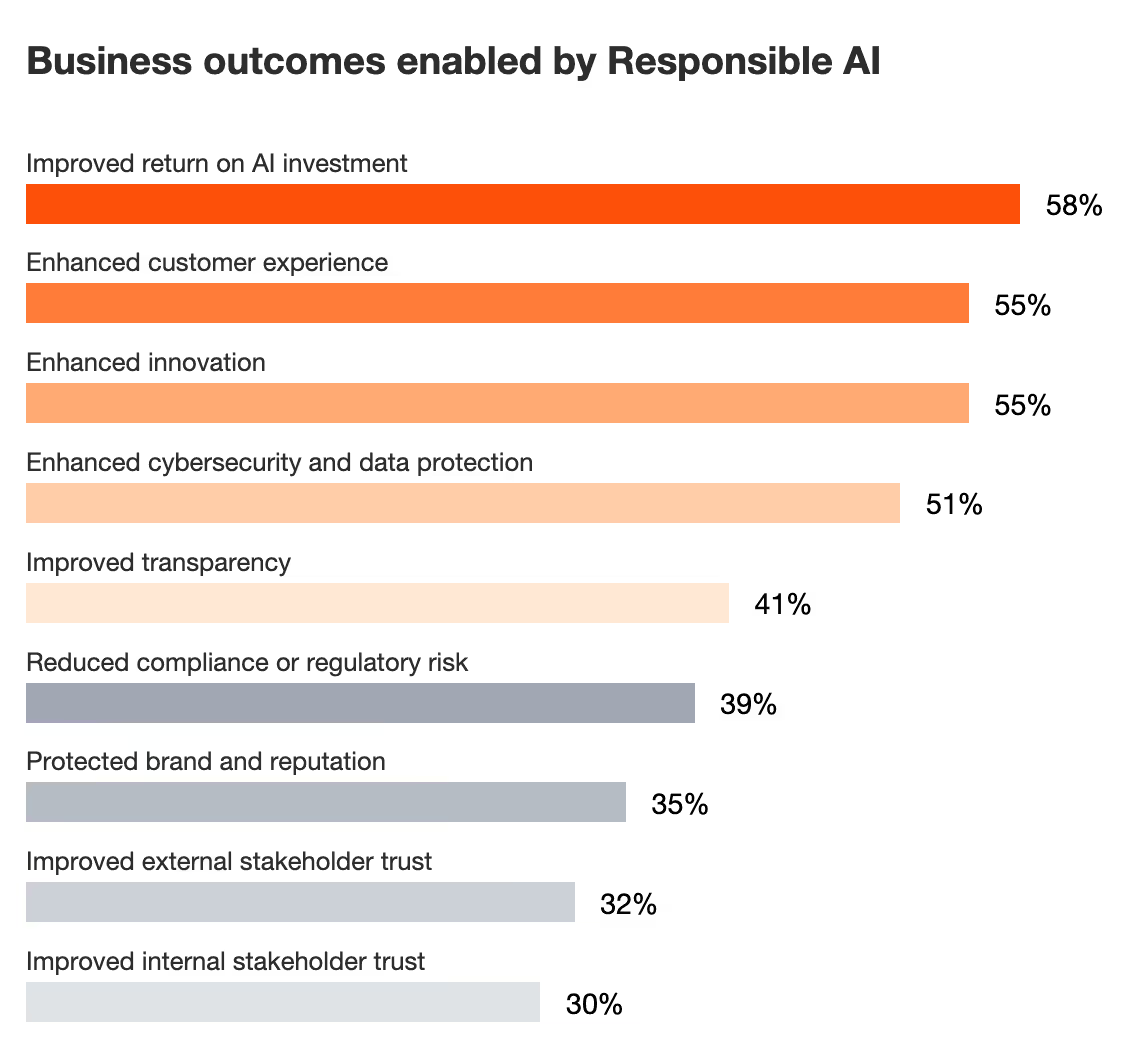

ℹ️ 46% of executives say that differentiating their organisation, their products and their services is one of their top-three objectives for investing in Responsible AI practices. If AI is the goal, the BI infrastructure must become its reliable launchpad.

Source: PwC’s 2024 Responsible AI Survey

😉The evolution is clear: we are moving from a passive, reporting-only BI stack to an AI-first stack built on self-service data products. This is the only path forward to solve the chronic problem of AI adoption, which is slow because BI data is not production-grade.

What is the difference between a high-cost, high-risk AI pilot that never scales and a genuinely impactful AI strategy? It’s Lean AI.

A lean AI strategy prioritises quality, purpose-driven data and streamlines the processes for building AI and machine learning (ML) models. By focusing on reducing tech debt, automating governance, and optimising workflows, enterprises maximise their AI investments while controlling costs and risks. These are essential capabilities during periods of market volatility. ~ Srujan Akula on Datanami

The Lean AI approach makes data a prerequisite for AI modelling. Data is no longer an afterthought for state-of-the-art AI models. Lean AI is an engineering philosophy that states AI investment should prioritise quality, controlled data environments over spending endless cycles on model tuning or infrastructure heavy-lifting.

When data is reliable, governed, and easily discoverable as a Data Product, the AI agent's job becomes inherently faster, cheaper, and less prone to inaccuracies.

For competent Business Intelligence Platforms, Lean AI is the antidote to the "diminishing returns" problem.

The traditional BI approach is backwards-looking: it tells you what happened. But that method forces analysts to be constant data janitors. Lean AI solves this by introducing reusability and prediction.

By structuring data into Data Products, we eliminate the need for analysts to reinvent the wheel every time. This not only frees up resources but also cuts down the insidious cost of technical debt.

📝 Related Reads

Solving Governance Debt with Data Products ↗️

Are You Feeding Your Data Debt↗️

Data Products elevate BI from a passive reporting function to a proactive intelligence engine. Instead of an analyst manually slicing data, a Lean AI-powered system can use agents to monitor those Data Products in real-time, instantly flagging anomalies, predicting customer churn, or automatically generating an executive summary. This is how a proactive insight looks like, a state unreachable by limited capabilities in static dashboards.

😉If you are still asking your analysts to export a CSV and paste it into a slide deck, you are not using a BI tool; you are using expensive data calligraphy.

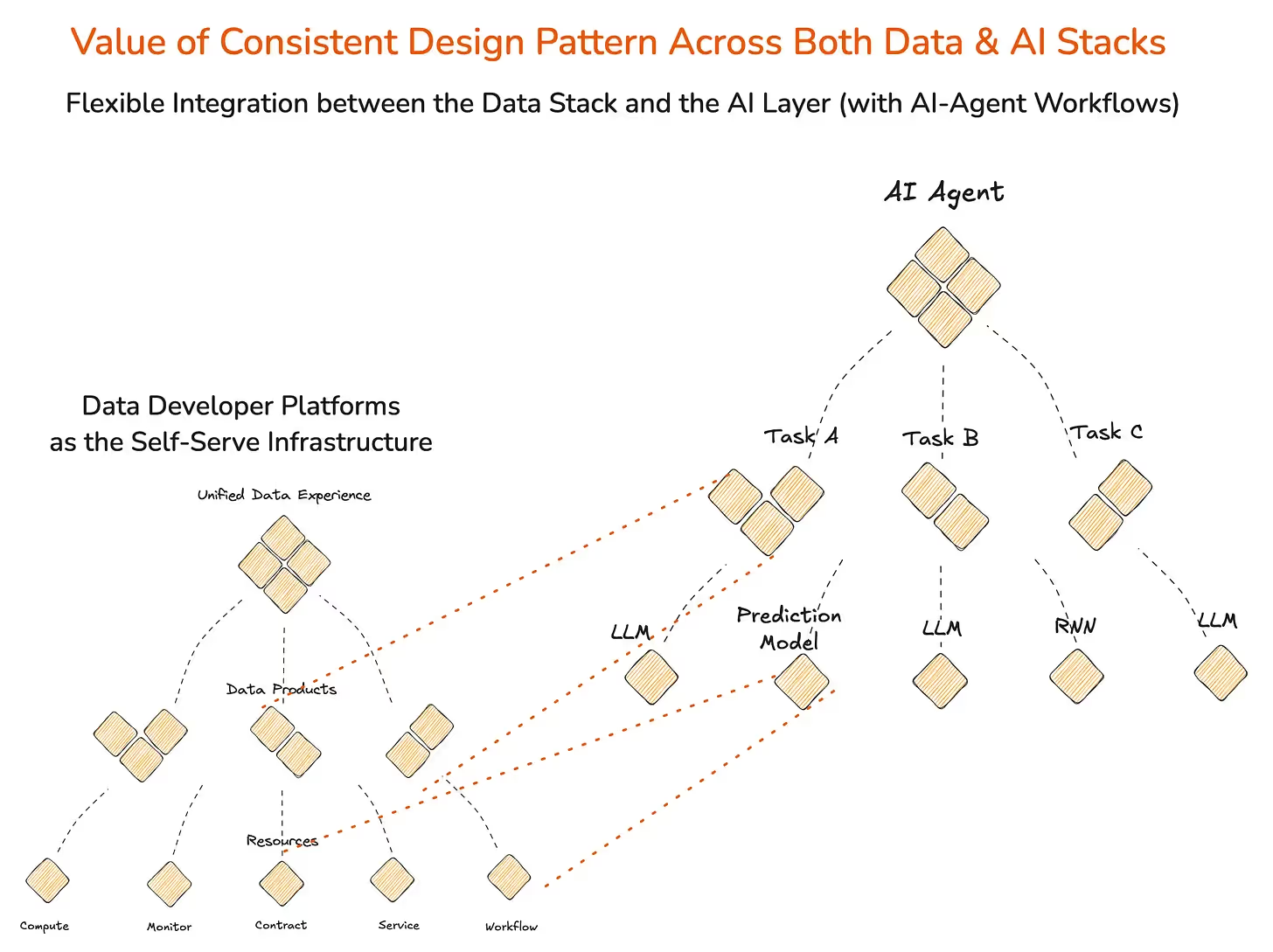

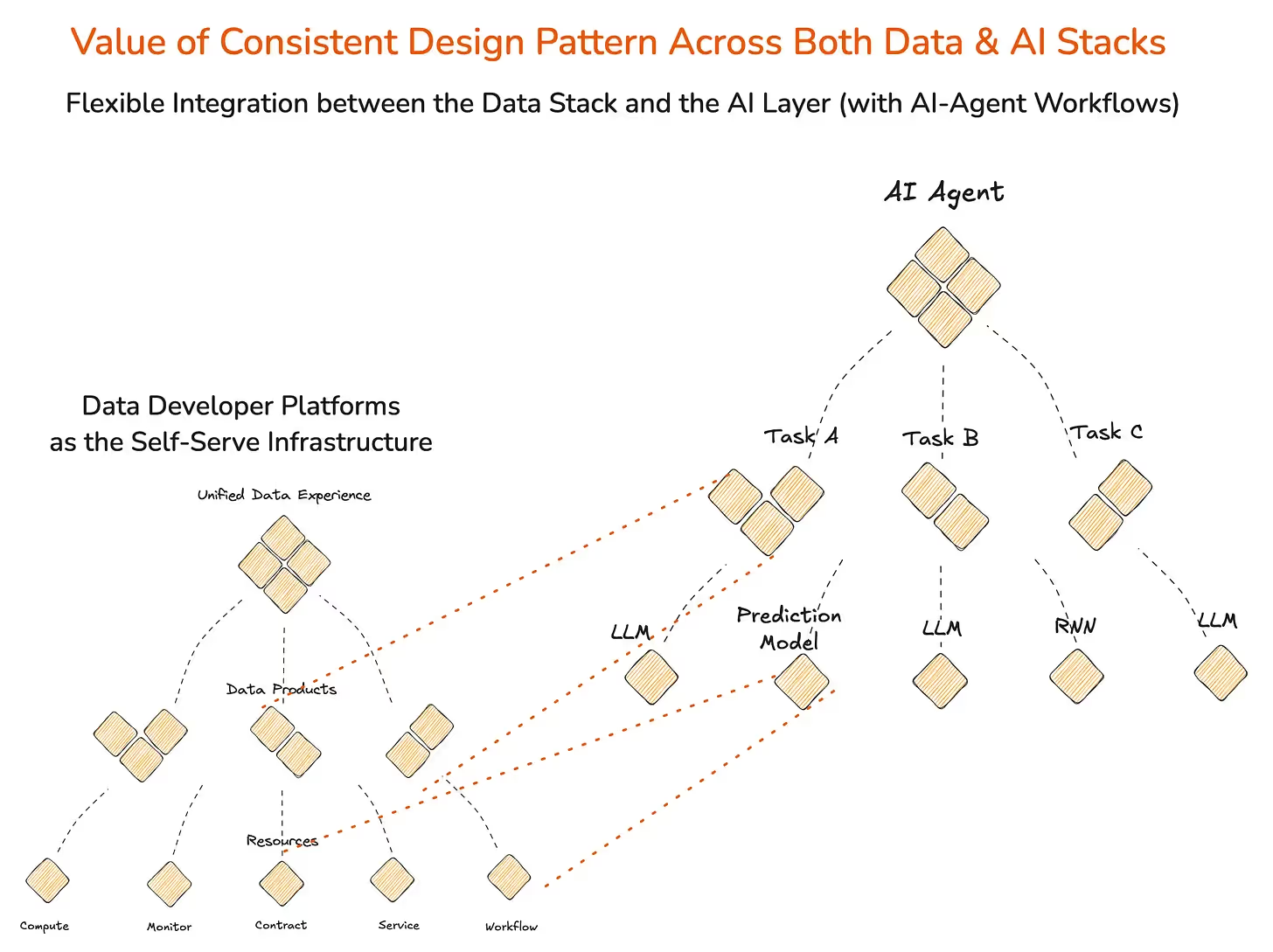

The new AI-first Business Intelligence Platforms demand an architectural shift away from monolithic data warehouses and siloed reporting. The modern stack is not a linear pipeline; it is a layered, composable ecosystem designed for speed and reusability.

Here are the five essential layers that enable Lean AI in Business Intelligence Platforms:

The base layer must unify the data sources and compute. Think of modern Lakehouse 2.0 architectures that allow for composable compute and structured, real-time access to both data lake and data warehouse patterns. The composable architecture also enables ease of embedding AI in BI Workflows. This layer guarantees that all data is available, governed, and ready for consumption without requiring data movement or heavy ETL, which is the antithesis of "Lean."

📝 Related Read

How AI Agents & Data Products Work Together to Support Cross-Domain Queries & Decisions for Businesses ↗️

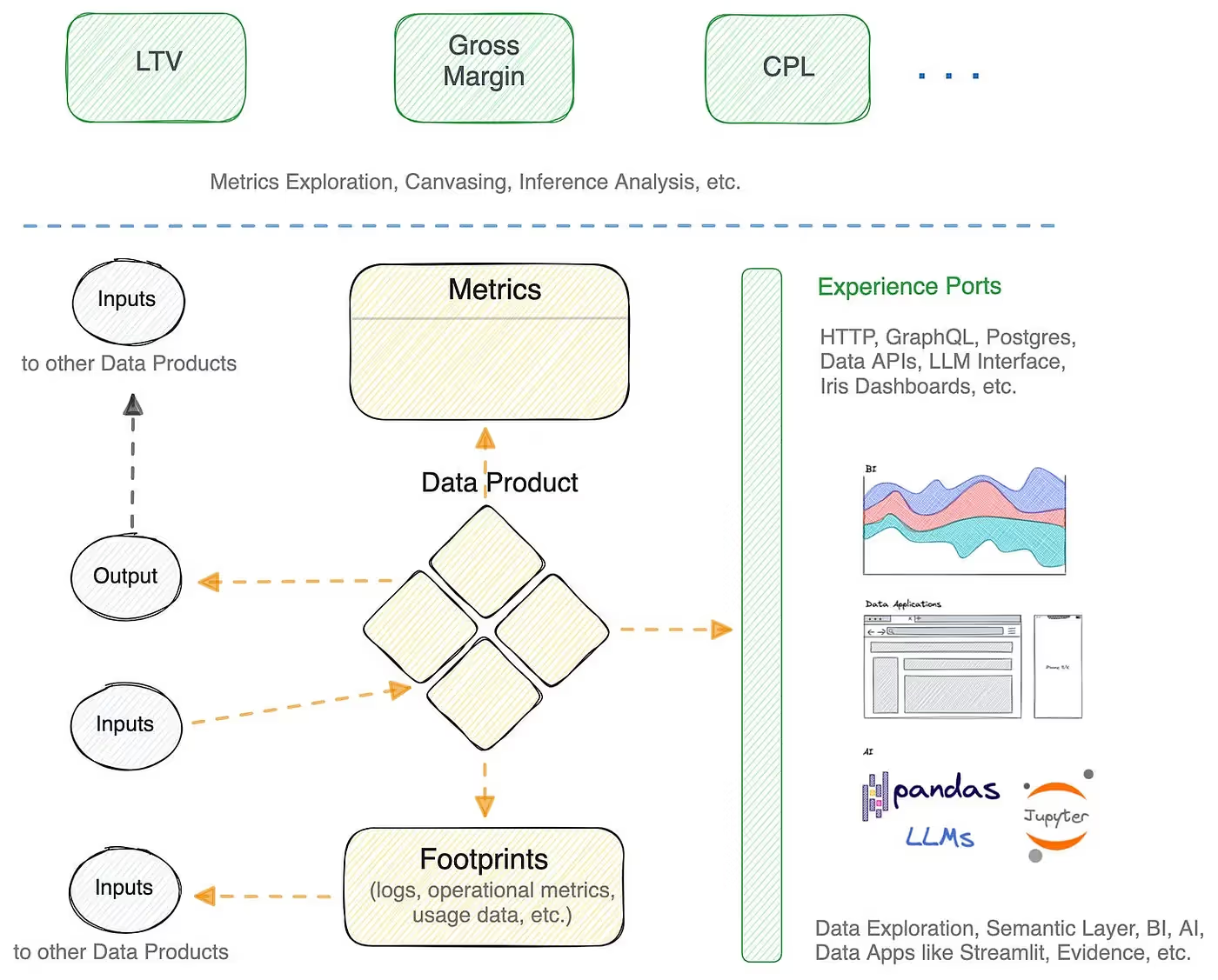

This is the most critical pivot away from legacy BI. Data Products are the antithesis of the static CSV export. They are governed, discoverable, and purpose-built APIs of insight. They package complex logic with baked-in quality and governance.

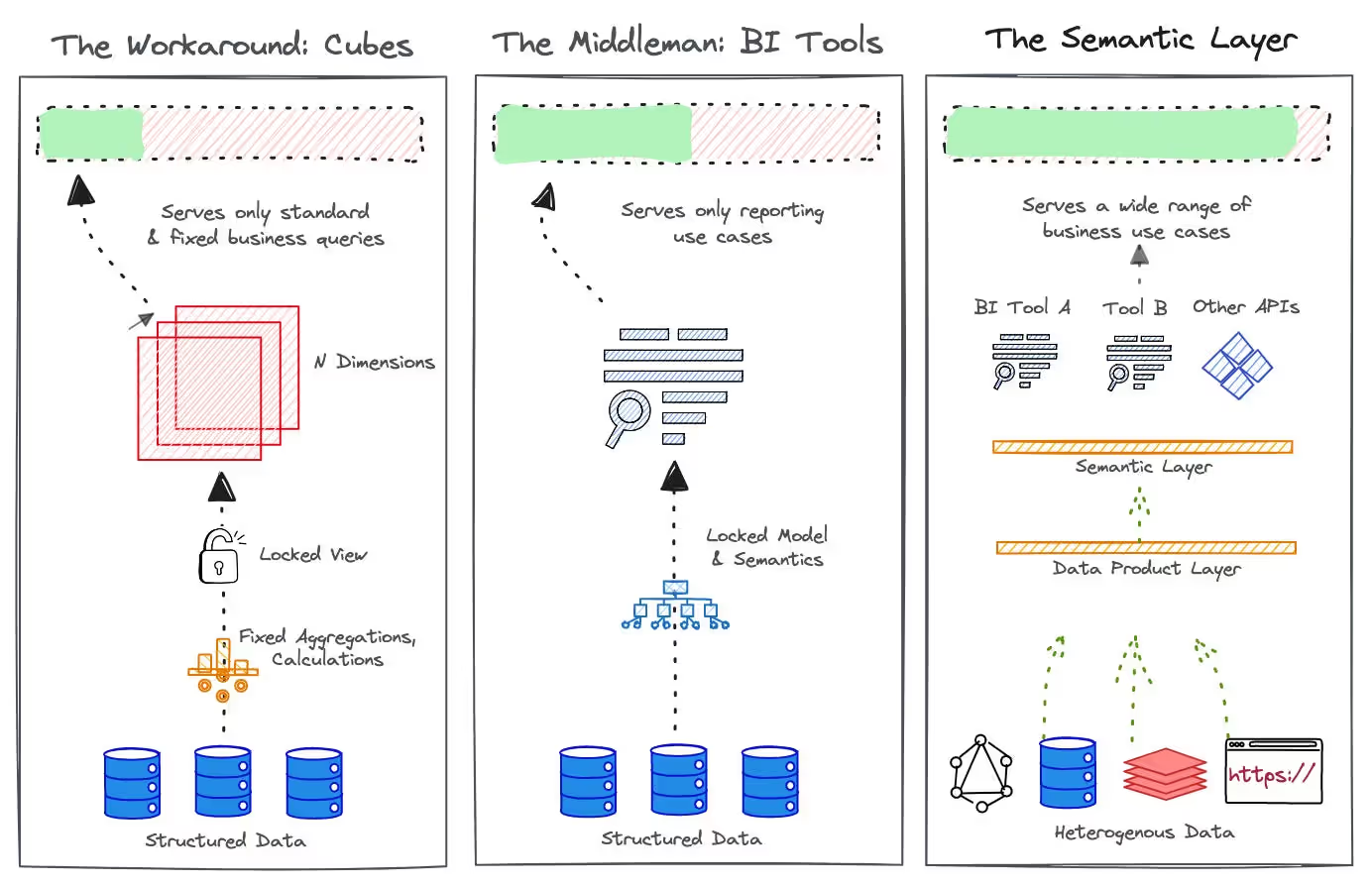

The Semantic Layer acts as the universal translator, ensuring that every tool and every agent in the stack uses the exact same definition for a key metric. This uniformity stops the eternal war over whose numbers are "right," thereby eliminating the primary friction point in AI and BI integration.

This layer provides the machinery for the actual intelligence. It includes the infrastructure necessary to run LLMs and specialised AI agents. Crucially, this must be a composable AI infrastructure, allowing the organisation to plug in different models as needed, without disrupting the foundational data layer.

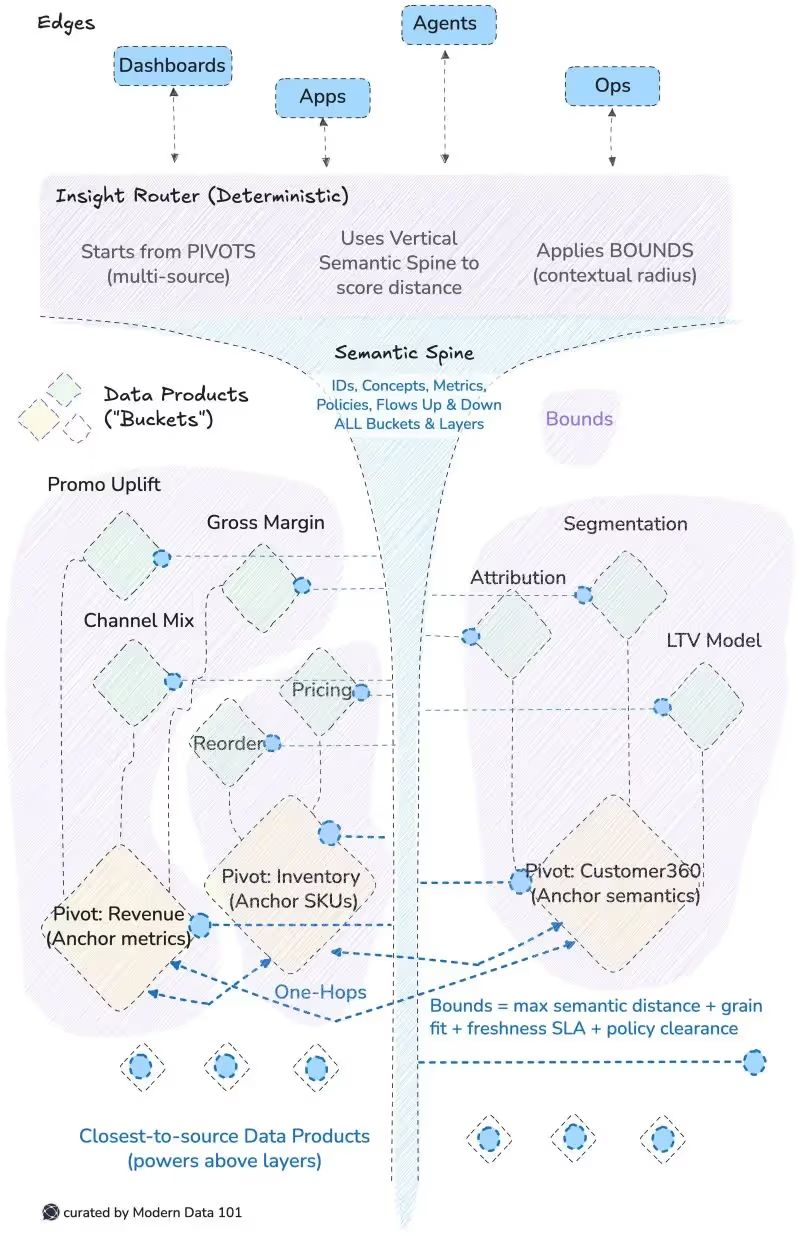

These are the digital employees that scale AI for business analytics. AI Agents are specialised, autonomous entities designed to perform proactive tasks using the reliable definitions delivered by the Data Products.

Example: Instead of a dashboard showing a high churn rate, an AI Agent monitors the "Churn Risk Data Product" in real-time and automatically triggers an intervention campaign in the CRM. These agents become the ultimate consumers of Data Products, turning passive reporting into proactive intelligence.

📝 Related Read

AI-Ready Data: A Technical Assessment ↗️

Governance becomes a transparent enablement layer instead of just gatekeeping assets. The Data Product Marketplace becomes the go-to interface where users (humans and AI agents) can discover, request access, and monitor the lineage of any Data Product.

This self-service model, paired with policy as code, ensures rapid access and usage while enforcing security and compliance boundaries automatically. This makes governance an enabler, not a bottleneck.

Several concurrent architectural and functional shifts accelerate the push toward Lean AI:

Role of Data Products: Data Products standardise metrics and dimensions, which is a necessary precondition for AI. Without them, every AI agent would have to independently ingest raw, messy data, which is neither Lean nor scalable.

Explainable AI: As AI moves into decision-making, it becomes crucial to understand why the prediction was made. Explainable AI tools built into the BI stack provide the necessary transparency, allowing analysts to audit the agents' reasoning against the trusted Data Products, ensuring both trust and regulatory compliance.

The MCP Boost and Natural Language Query: The emergence of powerful, cost-effective Multimodal Chat Platforms is democratising access to data beyond the analyst. Natural language interfaces, fed by the precise definitions in the Semantic Layer, enable business users to throw complex business questions in plain English and get instant, governed answers. This dramatically increases BI adoption and usage across the entire enterprise.

Lakehouse 2.0 Integration: The unification of data lake and warehouse capabilities provides the necessary composable, high-performance, and unified foundation for both traditional BI queries and AI model training on the same data. The unified support is essential to AI for business analytics, enabling tightly integrated and loosely coupled pairs of AI Agents and Infrastructure primitives.

BI Portability (One Logic, Multiple Projections): The Semantic Layer and Data Products ensure that the core logic is defined only once. AI in BI workflows significantly require the reusability and reproducibility factors. Whether the user accesses the insight via a traditional dashboard, a mobile app, or an AI agent, the calculation is identical. This removes the "whose numbers are right?" debate and drastically improves consistency.

Evolution of the "BI Analyst": The analyst's job is transforming from manual dashboard creation to AI oversight and model interpretation. They become the bridge between the autonomous AI agents and the business user, focusing on the Why and the What Next rather than the What Happened. (A job description that actually sounds interesting for a change.)

The BI Stack of 2026 is a fundamental architectural overhaul. Lean AI recognises that small, reliable and reusable Data Products lay the foundation for impactful intelligence.

By embracing composable data infrastructure, the Semantic Layer, and proactive AI Agents, organisations can turn their dashboards from passive reports into decisive, forward-looking intelligence.

Q1. Are companies actually using AI inside their BI tools?

Yes, but unevenly and often superficially. Most enterprises have AI-adjacent BI, not AI-native BI. They’ve bolted on assistants, auto-insights, or natural language queries, but stitching models into decision workflows is still rare. The reality is that companies aren’t lacking AI features, but lacking the data precision, semantic clarity, and trustrequired to let AI do more than decorate dashboards.

Q2. What can AI realistically improve in a business intelligence platform today?

AI brings leverage. Its real contribution to BI isn’t “automated dashboards,” but compressing the distance between question and action.

AI can surface anomalies before humans notice, explain patterns without analysts hand-holding the narrative, and personalise insights to each user’s role. But its highest value is strategic: it turns BI from a rear-view mirror into a forward-leaning decision engine.

Q3. Does low data maturity slow down meaningful AI adoption?

Absolutely. Low data maturity is the tax on every AI vision. When data is fragmented, undocumented, or contradictory, AI becomes performative instead of productive. You can’t expect intelligence from an environment that doesn’t yet understand itself. Real AI integration needs clean pipelines, shared semantics, and productised data. Otherwise your models become polished wrappers around organisational entropy. Data maturity is the foundation that lets AI think clearly and act confidently.

.avif)

News, Views & Conversations about Big Data, and Tech

News, Views & Conversations about Big Data, and Tech

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.