Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

Have you ever felt like your organisation is caught up in the swirl of an AI arms race? All around, teams are piloting powerful AI projects. From sophisticated recommendation engines to predictive analytics, promising to revolutionise operations.

But while the initial excitement is real, taking AI from clever experiments to robust, large-scale deployment is a whole different game. You might find yourself tripping over data issues time and again, as that first flush of AI sparkle starts to fade.

Yes, this time we want to talk about the unsung hero: Data Modelling.

It isn’t just a niche concern tucked away in the IT department. Today, it is the core blueprint upon which intelligent, scalable AI gets built. In the age of Machine Learning, Data Modelling means more than drawing up schemas. It’s about understanding what your data actually means, how it flows, and ensuring it’s positioned for powerful, flexible use by models not just for the day, but as your use cases grow in the future.

Think of your data as the critical infrastructure of the business. It is the roads and bridges connecting everything you do. For this analogy, Data Modelling is your Architectural Plan. Once you get that correct, your data flows efficiently, supporting fast, insightful AI. Get it wrong, and your foundations crack under the weight.

Traditional Business Intelligence focused on aggregating information for dashboards and reports. AI and Machine Learning, though, put very different demands on your data: they thrive on highly granular, contextualised inputs, and care about detail and signal more than neat summaries. If your data model doesn’t match what AI needs, you risk making the same transformation, integration, or data-cleaning effort over and over for each new model or project. That’s when “model drift,” unreliable pipelines, and fragile ops become the norm.

Beyond performance, solid data modelling is crucial for explainable, compliant, reusable AI. Teams can trace why a prediction was made, protect sensitive information, and reapply learnings across different products with confidence.

The journey to scaled AI is littered with good intentions gone awry through suboptimal models. The most common traps?

😉Think of it like you are building a fancy AI skyscraper with quicksand as the mortar for its foundation. That's precisely what happens with traditional, ill-suited data models. You might have the latest algorithms, but if your data is a tangled mess, your AI will probably predict ineffectively to add to the mess.

So how do you move from pitfalls to best-in-class? It’s a shift in both mindset and process.

Model data for AI use cases from the get-go. This AI use case could be an AI Application, an Agent, an ecosystem of AI Agents, or simpler ML models. Data should be purpose-driven and modelled (productised) to the niche case it serves. It may borrow from common heavy data models like Customer 360; but say a data model for marketing campaign acceleration would have specifics of the measures, fields, and SLOs demanded by the specific marketing app or the AI Agent running it.

Don’t treat data as an afterthought. Plan for AI consumption from the beginning. Shape your data products with an eye on what models will need, considering granularity, relationships, and semantic clarity before the data even hits your platform. Think deeply about meaning, not just format, and set up consistent versioning so models don’t break when sources evolve.

This is how a model-first approach for data translates into: Right-to-left data development instead of the traditional left-to-right path where data is extracted from the sources, whatever data is available is then processed, and then we go from there (think medallion).

When you think model-first, you model the use case requirements first and then work on only those requirements and that segment of data processing, which now enables much more focus, finesse, and purpose-driven workflows and resources. This also implies huge cost-effectiveness for AI-focused workloads where resources are self-served and unassigned at scale.

Data without context is just noise. Capture where your data comes from, its business definition, and any key assumptions right inside your models. This makes it possible for data scientists to understand, monitor, and explain results and not just build black boxes.

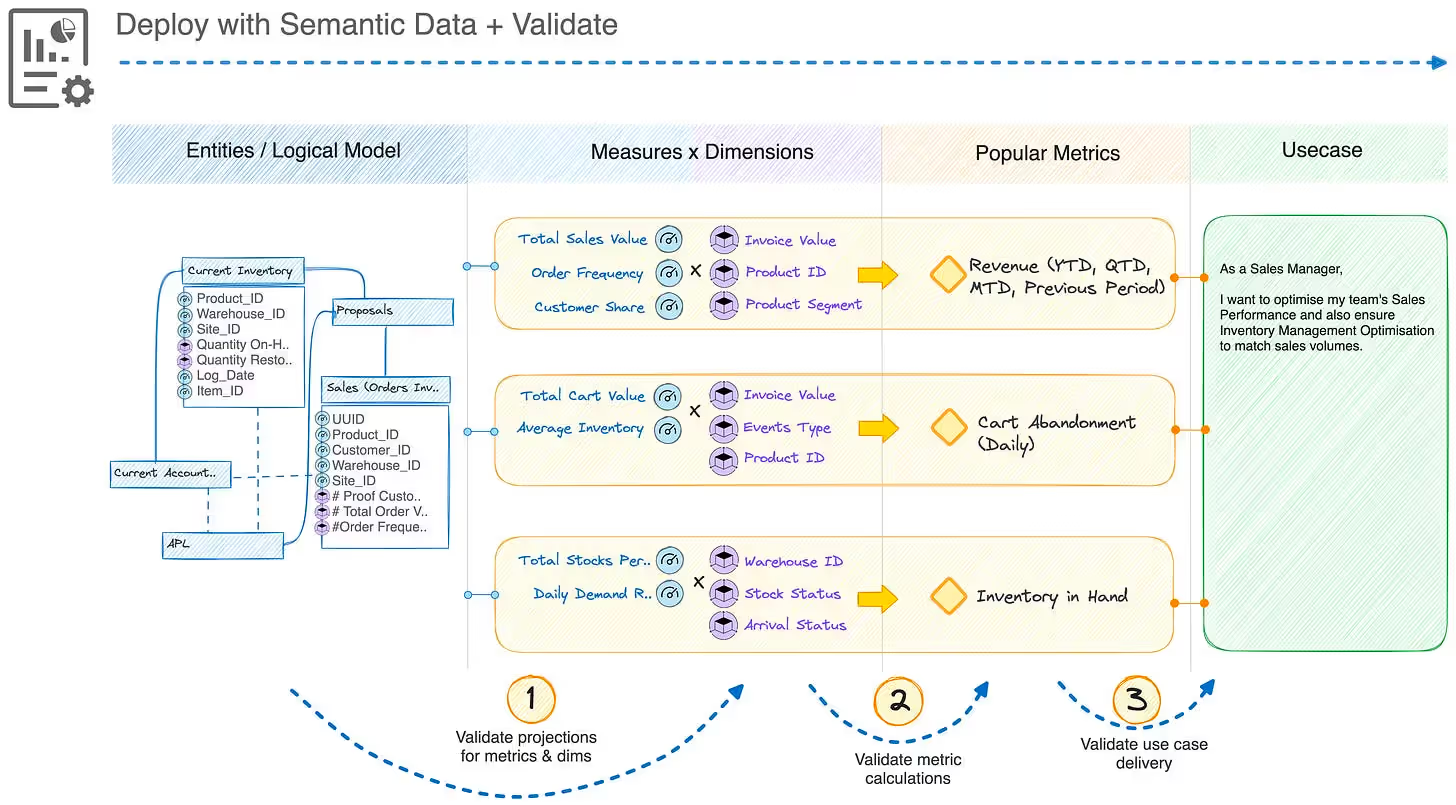

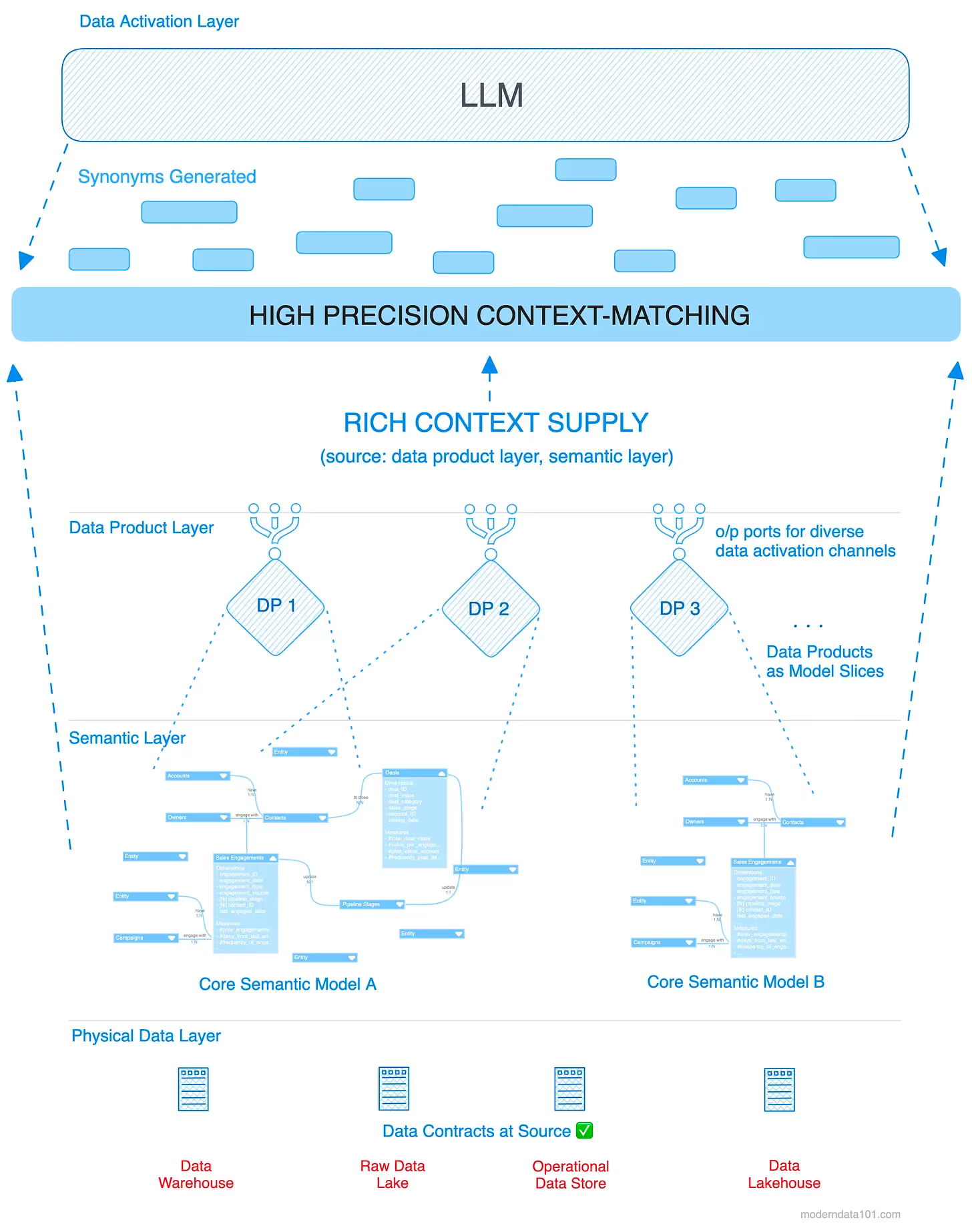

The semantic layer acts like a centralised plane where different entities, measures, dimensions, metrics, and relationships, custom-tailored to business purposes, are defined. With the semantic layer, the LLM functions with the pre-defined contextual models and accurately projects data with contextual understanding, and in fact, even manages novel business queries.

Instead of misinterpreted entities or measures, the LLM now knows exactly what table to query and what each field means, along with value-context maps for coded values.

When every pipeline contains its own version of feature engineering logic, teams end up reinventing the wheel in dozens of places. Standardised, modular feature stores let you build features once and share them across teams, streamlining development and bolstering reliability. Big tech firms like Netflix or Uber lean heavily on this principle to stay agile.

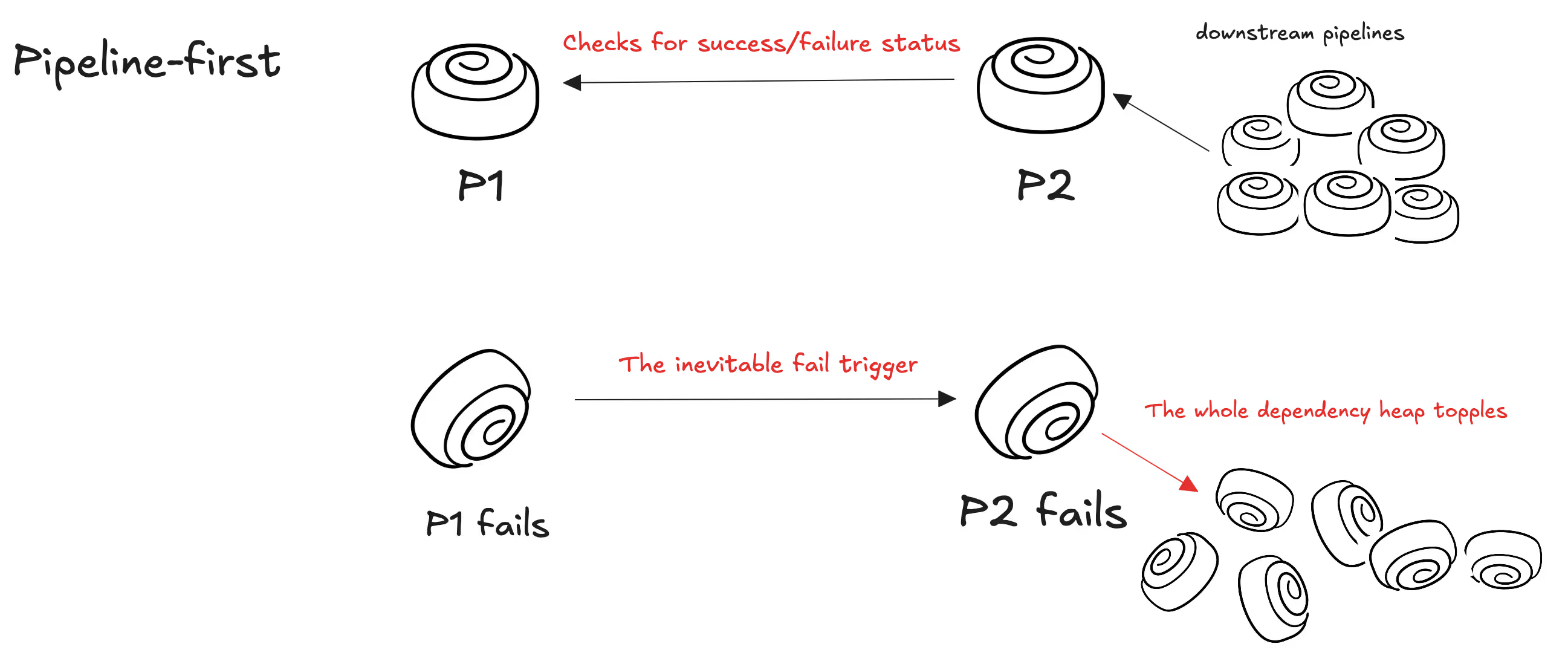

In pipeline-first, the failure of P1 implies the inevitable failure of P2, P3, P4, and so on…

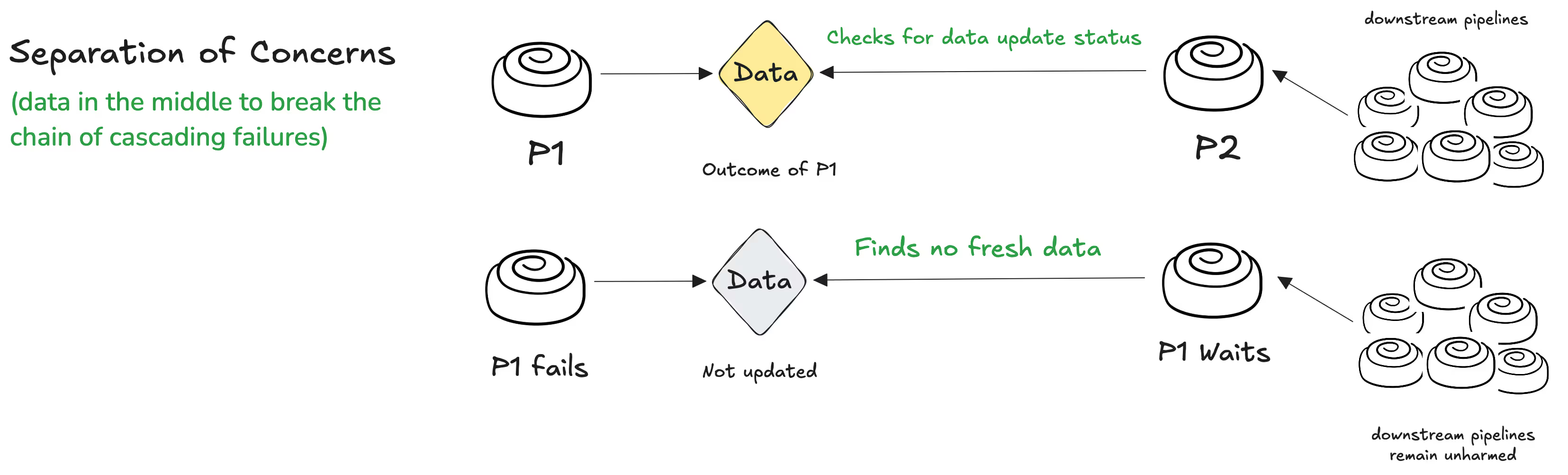

In data-first, P2 doesn’t fail on the failure of an upstream pipeline, but instead checks the freshness of the output from upstream pipelines.

Don’t fall into the one-dataset-per-model trap. Think in terms of feature “primitives.” Base units that can be recombined for many AI use cases. Build up canonical vocabularies and data models so everyone understands what a “customer” is, or how an “event” is defined, regardless of project or team. That shared language accelerates integration and boosts trust.

How reusability is driven at scale:

Most data platforms fragment as they scale. Every new use case creates more drift, not more alignment.

**Data Developer Platform (DDP) inverts that pattern.** Every new product adds structure. Every model becomes reusable.

This is where platform leverage shows up: in shared language. DDP’s semantic spine turns internal reuse into network effects. Strategic levers:

Treat your models as living things that evolve with your business. Bake observability and monitoring into your data flows: track usage across experiments, monitor for drift or anomalies, and collect feedback. Continuous oversight keeps your models healthy and your AI responsive to real-world change.

😉Think of metadata as your data’s ultra-detailed personal profile. Without it, AI models (and data scientists) are left guessing about what values actually mean, risking confusion and mistakes. Context matters, especially if you want intelligence & not just automation.

Even the best data practices require people and processes to stick. Assign clear ownership roles tasked with the health and quality of specific data models that create accountability and ensure ongoing investment in your most important assets. Foster close collaboration between engineers, AI specialists, and domain experts. It’s everyone’s job to ensure your data models truly represent the business and its goals.

📝 Related Reads

Universal Truths of How Data Responsibilities Work Across Organisations

Data Product Manager vs. Data Product Owner: Decoding the Rules for Data Success

The Role of the Data Architect in AI Enablement

It’s tempting to focus on the shiny advances in AI and Machine Learning, but as organisations like Gartner and Forrester point out, sustainable scale always comes back to the basics: data infrastructure and modelling.

Nail these fundamentals, and you’ll accelerate every downstream AI project & not just this year, but into the future too.

Teams that commit to strong data modelling practices build faster, safer, and more trustworthy AI. They reduce technical debt, encourage innovation, and get more from every dollar spent on Artificial Intelligence. If you want AI that grows with you, start by rethinking your foundation. Your data models might be the most transformative investment you'd make.

Connect with a global community of data experts to share and learn about data products, data platforms, and all things modern data! Subscribe to moderndata101.com for a host of other resources on Data Product management and more!

.avif)

📒 A Customisable Copy of the Data Product Playbook ↗️

🎬 Tune in to the Weekly Newsletter from Industry Experts ↗️

♼ Quarterly State of Data Products ↗️

🗞️ A Dedicated Feed for All Things Data ↗️

📖 End-to-End Modules with Actionable Insights ↗️

*Managed by the team at Modern

.avif)

Shreya is Senior Analytics Engineer at Modern, where she gets to blend her love for data with building meaningful models that drive decisions. She is a data nerd through and through — always excited to explore, analyse, and make sense of complex information. With a strong background in data engineering, she enjoys creating scalable and reliable data solutions.

Shreya is Senior Analytics Engineer at Modern, where she gets to blend her love for data with building meaningful models that drive decisions. She is a data nerd through and through — always excited to explore, analyse, and make sense of complex information. With a strong background in data engineering, she enjoys creating scalable and reliable data solutions.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.