Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

There’s been an explosion of all sorts across multiple industries when it comes to Generative AI. What can be done to leverage its capabilities is a question that has been ringing across boardroom meetings to the production/manufacturing floors with the same magnitude. And that’s not a surprise, as the global spending in GenAI is expected to hit $644 this year, in 2025.

Multiple surveys and polls by Gartner paint a promising picture, where 1 in 5 organisations have GenAI solutions in production. After all, who would not want a competitive edge with reimagined workflows? While the interest does remain high, execution often suffers due to a lack of clarity about what teams are playing with.

By no means are enterprises short on ideas. What is missing is a path that can lead from experimentation to making a real-world impact. A lot of questions find their way around data readiness, risk, governance, and scalability. Efforts remain trapped in silos, disjointed from core business functions.

In this one, we try to go beyond just the hype and understand what exactly it takes when implementing Generative AI at scale and responsibly.

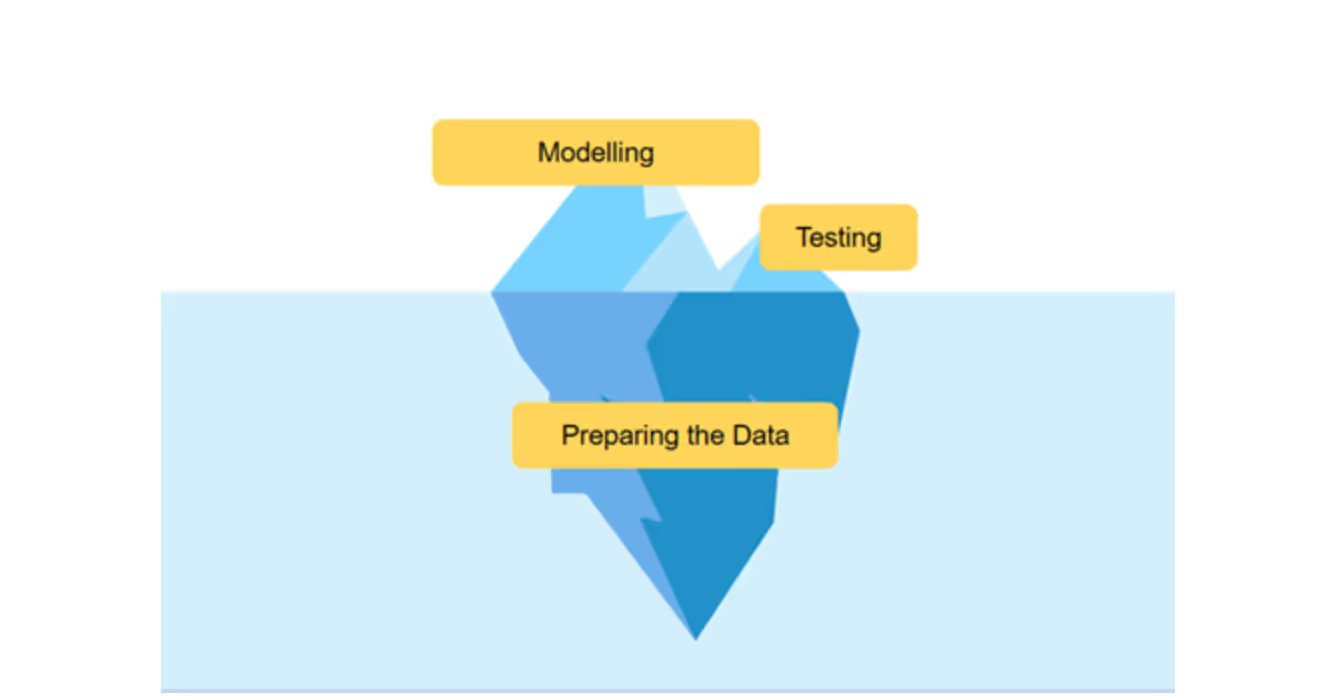

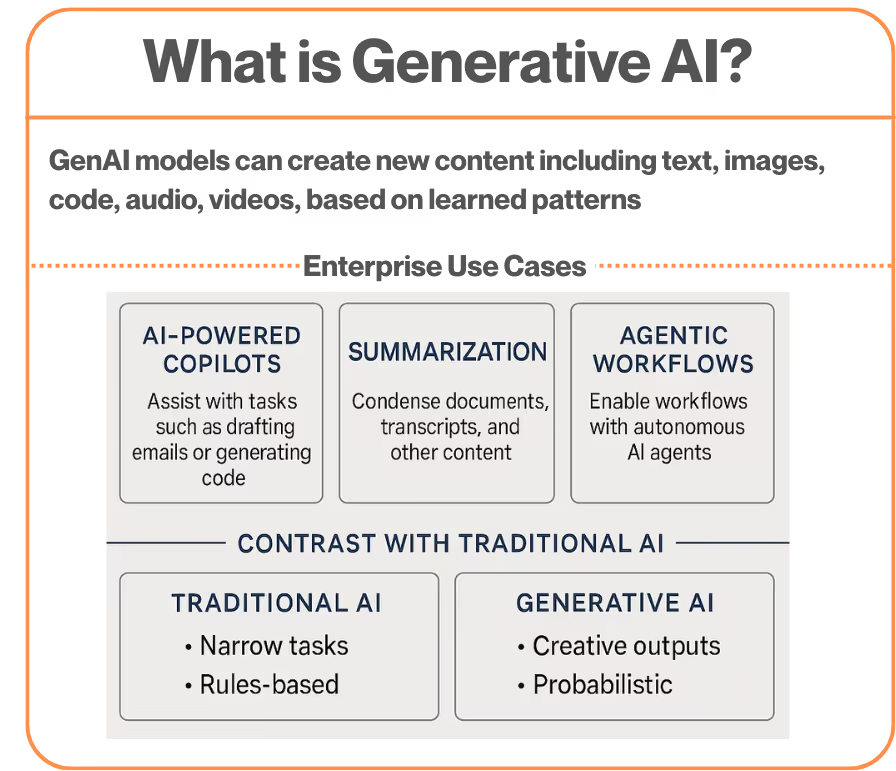

In simple terms, GenAI is a category of AI models designed not just for the purpose of analysing data, but also to generate new content, such as images, text, videos, and code, all based on learned patterns. When compared to traditional AI models, they are more capable of generating human-like output, simulating creativity, language, and reasoning to significant effect.

As far as enterprises are concerned, this opens a new horizon of use cases now. AI copilots can help teams by generating reports, creating emails, or even suggesting code in real-time, enhancing productivity levels across work. Complex documents, research papers, and meeting minutes can be easily converted into insight-rich pieces with AI-powered summarisation tools.

More complex use cases can include workflows where agentic systems interact with systems and APIs autonomously and complete tasks such as onboarding new users, managing support tickets, and even looking into the inventory requirements.

One of the most significant differentiating aspects in the case of GenAI is the capability to convert unstructured content into a strategic asset in itself, and achieve this contextually, conversationally, and also at scale. It completely transforms how organisations process information, internal operations, and customer interactions, ushering in a new dawn of digital empowerment.

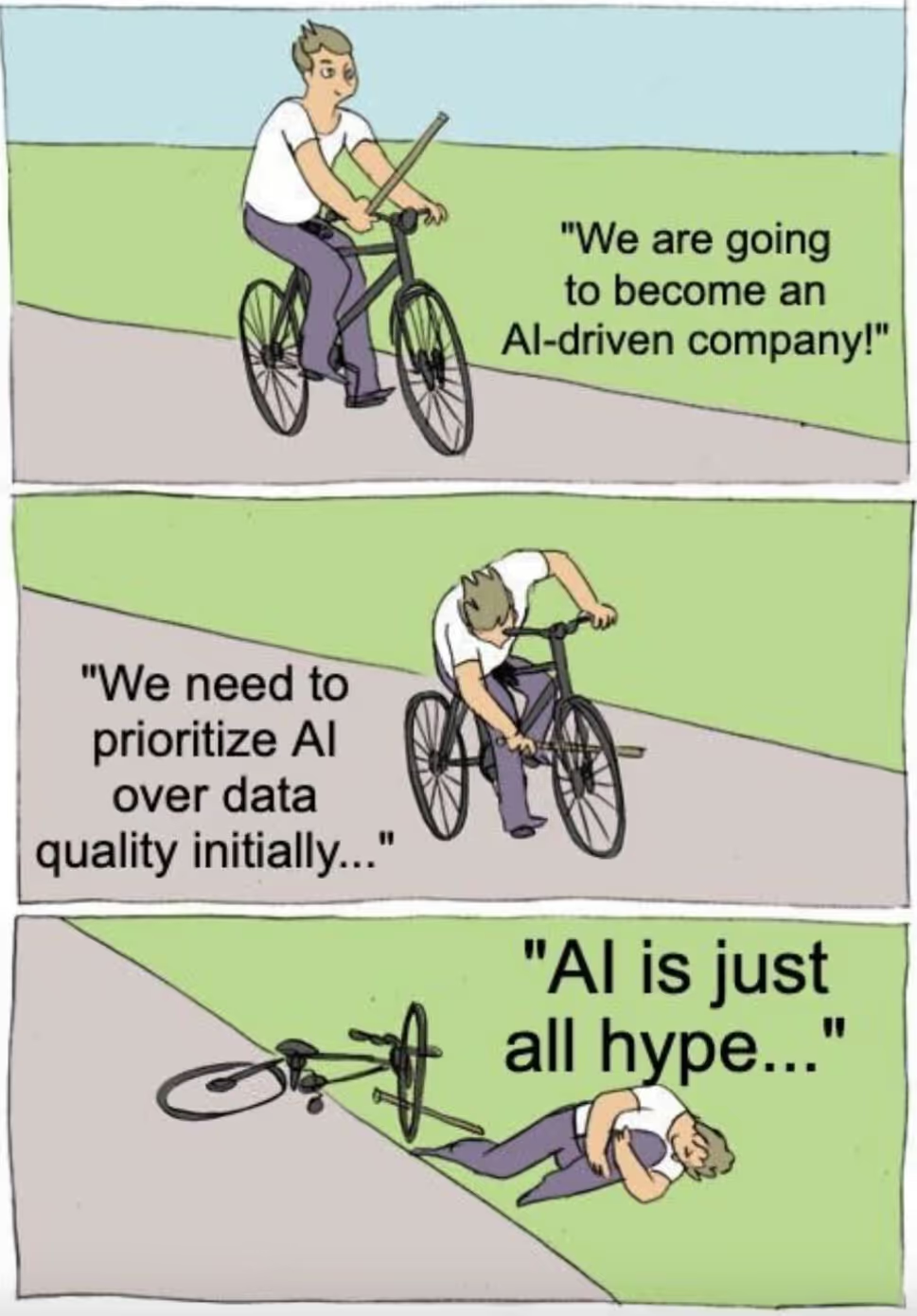

As far as production-grade implementation is concerned, a lot of enterprises are still having a tough time in moving beyond the GenAI hype and turning it into something meaningful. Interestingly, that has less to do with technical capability than it has to do with some fundamental gaps in infrastructure and the AI adoption strategy.

The lack of usable data is a significant challenge faced in leveraging generative AI for enterprises, as most of the enterprise data is incomplete, unstructured, and scattered throughout systems without the proper documentation or quality controls.

Given below are a few major areas of concern that hinder effective organisational workflows:

Business stakeholders, data engineers, as well as ML practitioners often operate in isolation, making it difficult to ensure smooth cross-functional collaboration and cutting down the pace of iteration cycles.

More often than not, teams are overwhelmed by a scattered landscape of APIs, platforms, and other GenAI tools that may or may not serve the core purpose. It leads to poor overall integration, unclear ownership, and duplication across the entire data stack.

Related Reads:

Learn more about the tooling overwhelm problem here.

Countless GenAI tools and platforms have been emerging in recent times, posing tough questions to enterprises, leading to stagnant decisions and high costs of switching, which puts a dampener on the long-term plans.

A lot of processes remain stuck right in the experimental phases, without a clear pathway to scale, execute, and even maintain models effectively in production environments. From POC to production, the journey becomes a tough one.

The absence of embedded governance, consent frameworks, and explainability makes it really difficult to ensure the deployment of responsible AI, more so in the case of regulated industries.

While these are significant challenges, a close observation would find that the absence of a platform thinking approach is also missing. If a unified approach, targeted to productise data and also support AI enablement at scale, is not there, each GenAI effort will stay confined in silos.

Implementing Generative AI requires clear intent, proper execution, as well as strategic alignment. A lot of enterprises tend to get consumed by the hype around AI, but miss to take a step back and consider how they can make their relatively large investments safe with AI.

There are five important principles that enterprises need to be mindful of:

GenAI can’t be treated as an isolated “AI project” owned by a single technical team. Success depends on early alignment between data teams, AI/ML teams, IT, security, compliance, and business owners. Without this, mismatched priorities and late-stage roadblocks are inevitable.

A product mindset from Day 1 changes the game. Assign product ownership to leaders who can prioritise features, balance technical trade-offs with business needs, and keep delivery on track. Bring in domain experts at the data preparation and model design stages to ensure the system is grounded in real-world business context and not just technical assumptions.

Enterprises often start GenAI initiatives chasing novelty instead of value. The result? Impressive demos that never make it into production. The better approach is to anchor every GenAI effort in ROI-driven problems like customer operations bottlenecks, high-cost manual processes, marketing personalisation at scale, or compliance-heavy workflows. These are areas where GenAI can create a measurable impact fast.

Equally important, choose use cases that can grow with access to structured, trusted, and explainable data. A great model on weak data is like a luxury car on a dirt road, it looks powerful but delivers a bumpy, unreliable ride. By aligning early with problems that depend on strong data foundations, you set yourself up for scalable wins rather than one-off successes.

The trap many enterprises fall into is over-engineering their first GenAI deployment. Instead, start small. Launch focused pilots that can prove measurable value in weeks, not months. This keeps momentum high and gives stakeholders tangible proof that GenAI delivers beyond hype.

Every pilot should have clearly defined success metrics, reduced processing time, improved model accuracy, and increased customer satisfaction. These early wins aren’t just proof of concept; they’re proof of value. They also help refine both your data pipelines and your model strategies before investing heavily in scale.

Scaling GenAI without guardrails is like scaling manufacturing without quality control; you’ll ship more, but you’ll also ship more mistakes. From Day 1, build in governance, security, and repeatability. Keep human-in-the-loopprocesses for sensitive outputs until the system proves itself.

Feedback loops are critical. Capture user feedback, model performance data, and changing business requirements, and feed them directly into both model retraining and data product refinement. Track lineage, enforce version control, and monitor quality so that as adoption grows, trust remains intact. When governance is baked in early, scale becomes a competitive advantage instead of a liability.

Your choice of AI foundation, whether open-source, proprietary, or hybrid, will define the flexibility, cost, and sustainability of your GenAI program. Open-source models like LLaMA give control and customisation; proprietary offerings like OpenAI or Claude provide speed to market; hybrid approaches can deliver a balance of both.

Whatever the choice, avoid hard lock-in. Decouple data orchestration, prompt engineering, and model integration so you can switch providers or add new models without breaking your pipelines. Over time, a multi-model orchestration strategy often proves most resilient, using the best model for each specific task instead of forcing one model to do everything.

But the smartest AI foundation won’t take you far if your data layer isn’t equally ready. A Data Product Platform becomes the connective tissue, ensuring every model, workflow, and experiment runs on trusted, reusable, and well-governed data from day one.

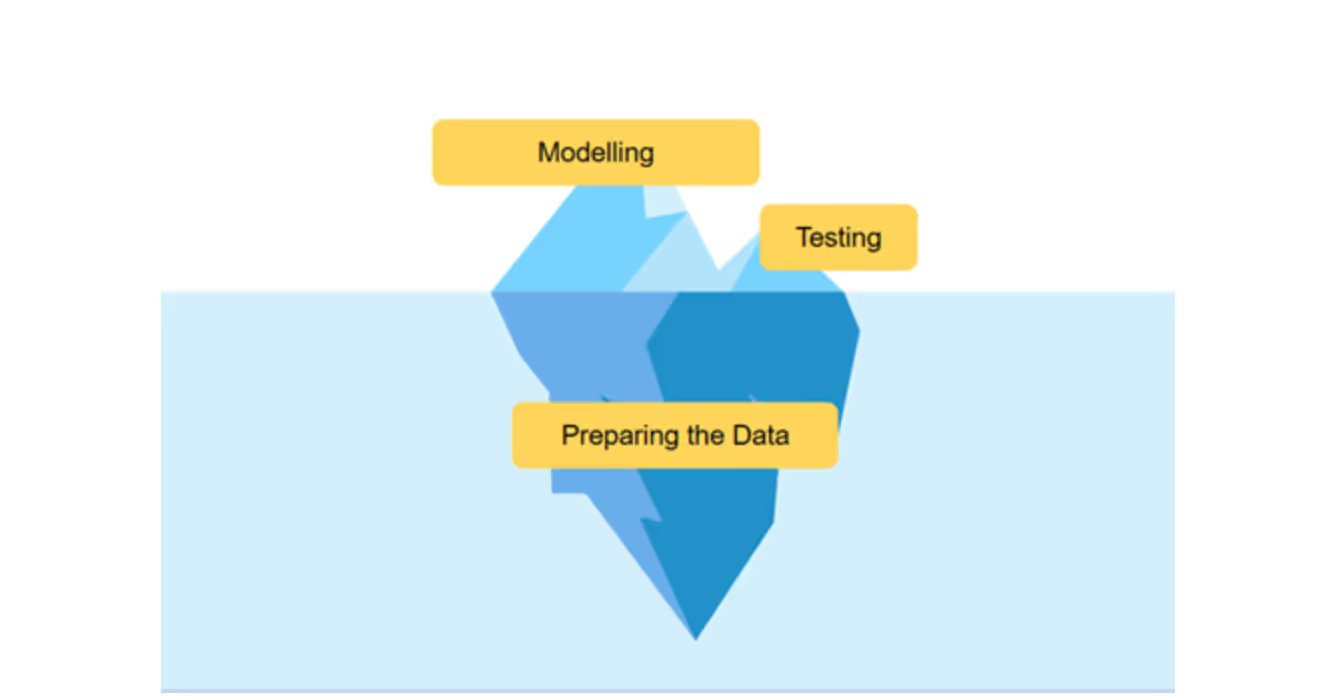

Most of the conversations around GenAI revolve around model capabilities, but one of the biggest challenges around is that of data chaos. For elevated operations, GenAI systems don’t just need API access; they also require contextual, consistent, and explainable data for generating expected results.

The absence of such data creates hurdles for organisations, such as broken workflows, hallucinations, and reduced user trust.

In such situations, a data product platform becomes absolutely crucial, as it transforms scattered data into properly governed, highly usable, and valuable assets which also have the proper context.

Here are a few key areas where a data product platform plays a crucial role:

With reusable attributes, teams no longer need to recreate connectors or prompts every time. They can easily access a library of curated data products that can be extended, used, and tuned across all domains. As a result, the cross-functional GenAI finds more takers.

A data product platform helps in the standardisation of critical business concepts, which means that terms like ‘partner value’ and ‘ROI’ can be used everywhere once defined. It takes away the ambiguity and also makes sure that models can interact on a shared business logic within the semantic layer.

A data developer platform tracks usage, lineage, and quality, bringing transparency to the fore. GenAI tools, when aligned with the data product approach, help enterprises reduce risk and pave the way for scalable AI systems.

Through a data product platform, GenAI becomes ideally linked to the enterprise reality and becomes a strategic capability.

Even the most promising AI initiatives get trapped in Proof of Concepts (PoCs) that find it difficult to reach the production stage. For unlocking actual and impactful business value, AI needs to transition from segregated experiments to productised and reusable capabilities that can reside within the business.

This is where a platform-focused and data product-driven approach becomes an essential element. The approach plays a crucial role in reshaping GenAI not as some isolated project, but as a modular capability to be able to scale across multiple domains.

The impact of Generative AI is very real. But when it comes to delivering enterprise value, simply connecting it to a model won’t really work. It’s more about solving the data problem that underlies AI outcomes, which is the reason why many GenAI implementations struggle with the lack of consistency, data inaccessibility, and silos.

That’s why there is a need for a data product platform, so that fragmented datasets can be transformed into reusable, governed, and contextual building blocks that can be trusted. By embedding a semantic layer in the midst, along with access controls and quality, systematic enablement becomes well within the reach of enterprises.

This is the real implementation strategy.

Building once, but reusing everywhere.

Q1. How does generative AI work?

Ans: The way in which generative AI systems work can be summarised into five broad steps:

Q2. Can we say that a data product platform is foundational to AI governance?

Ans: Yes, a data product platform is crucial for AI governance as it helps in embedding transparency, trust, and control into the GenAI lifecycle. When access controls, semantic clarity, and quality standards are enforced, it ensures that LLMs always have compliant and reliable inputs.

Q3. How important is it for enterprises to have a good AI adoption strategy in place?

Ans: A solid AI adoption strategy is important to align business objectives with AI efforts in order to avoid wasted investments. The right strategy will help in governed, scalable, and reusable AI capabilities, and avoid those isolated experiments.

Connect with a global community of data experts to share and learn about data products, data platforms, and all things modern data! Subscribe to moderndata101.com for a host of other resources on Data Product management and more!

.avif)

📒 A Customisable Copy of the Data Product Playbook ↗️

🎬 Tune in to the Weekly Newsletter from Industry Experts ↗️

♼ Quarterly State of Data Products ↗️

🗞️ A Dedicated Feed for All Things Data ↗️

📖 End-to-End Modules with Actionable Insights ↗️

*Managed by the team at Modern

.avif)

Simran is a content marketing & SEO specialist.

Simran is a content marketing & SEO specialist.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.