Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

This piece is a community contribution from Francesco De Cassai, an expert craftsman of efficient Data Architectures using various patterns. He embraces new patterns, such as Data Products, Data Mesh, and Fabric, to capitalise on data value more effectively. We highly appreciate his contribution and readiness to share his knowledge with MD101.

We actively collaborate with data experts to bring the best resources to a 10,000+ strong community of data practitioners. If you have something to say on Modern Data practices & innovations, feel free to reach out!

🫴🏻 Share your ideas and work: community@moderndata101.com

*Note: Opinions expressed in contributions are not our own and are only curated by us for broader access and discussion. All submissions are vetted for quality & relevance. We keep it information-first and do not support any promotions, paid or otherwise!

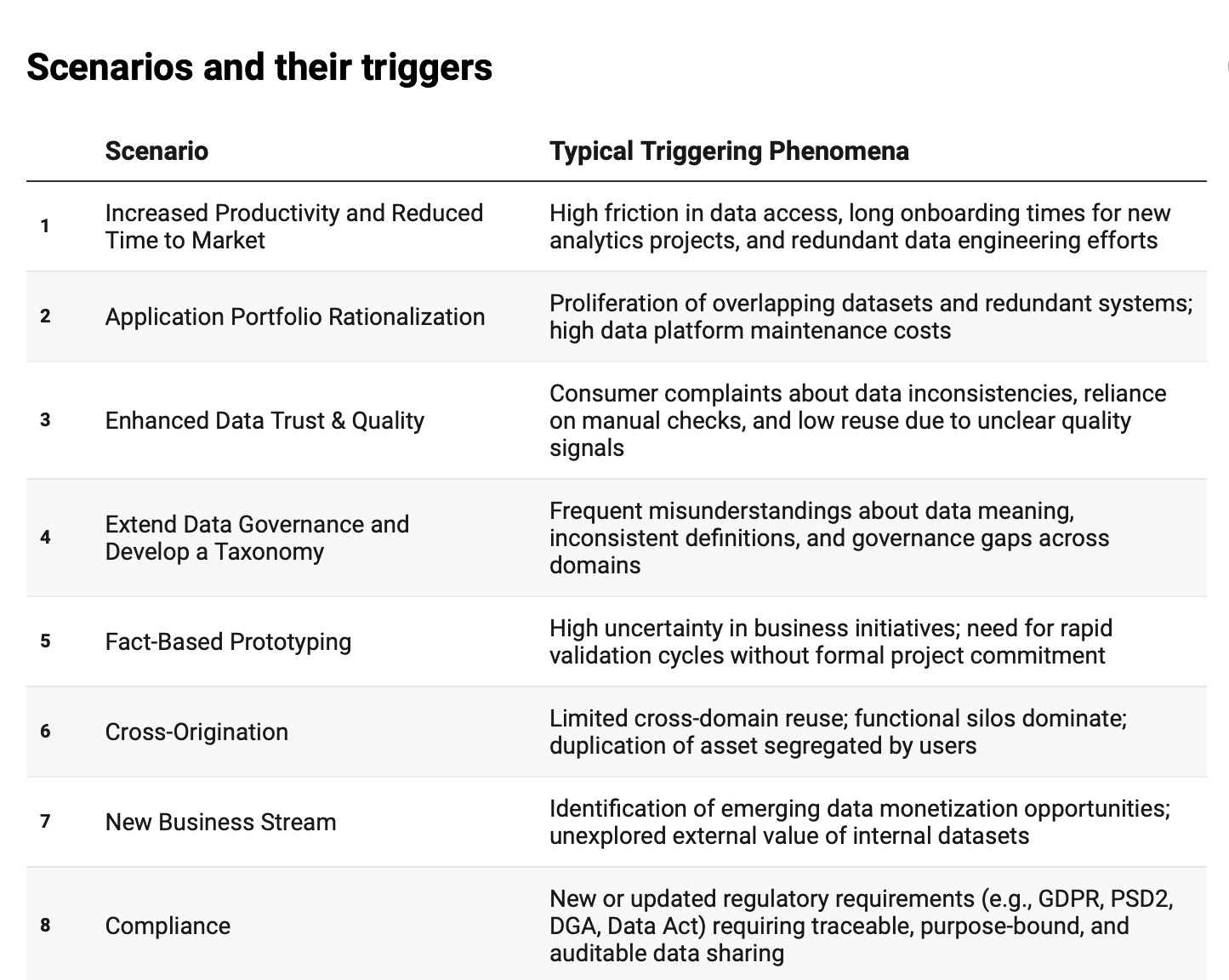

In the first part, we discussed the first three scenarios, the triggering phenomenon in those scenarios that caused data-sharing challenges. Then we explored implementation strategies and architectural patterns to navigate these challenges.

Sharing notes on collective intelligence of the community and where data sharing conversations are headed.

Based on the above, we envision data sharing as a circular ecosystem divided into three overlapping zones (like a Venn diagram, but more organic):

Before moving on to Part 2 of Data Sharing Scenarios by Francesco, a champion community contributor 🏆, we highly recommend diving into Part 1 of Where Data Comes Alive: A Scenario-Based Guide to Data Sharing.

Where Data Comes Alive: A Scenario-Based Guide to Data Sharing | Part 1

FRANCESCO DE CASSAI

Part 1 covers:

Scenario 1: Increased Productivity and Reduced Time to Market

Scenario 2: Application Portfolio Rationalization

Scenario 3: Enhanced Data Trust and Quality

In this second piece, we’ll strive to do the same with a wider band of organizational scenarios.

Each scenario follows a consistent structure for quick understanding and application:

This modular structure allows you to read each scenario independently, compare them, and reflect critically on which elements most apply to your current environment.

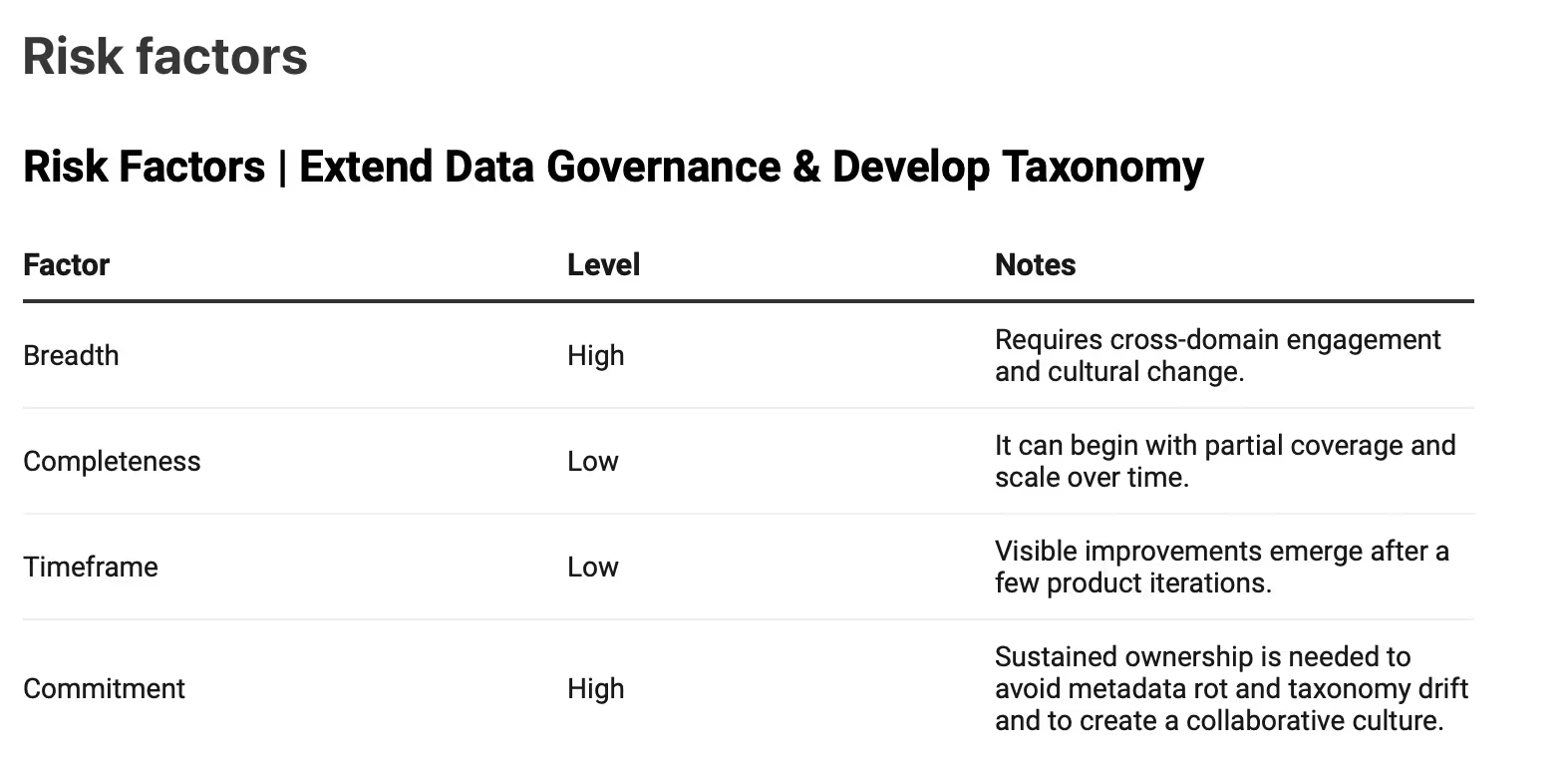

In many organizations, data governance operates in isolation, detached from daily practices and perceived as a compliance-driven exercise rather than a value enabler. Metadata is maintained reactively, often only in response to regulatory demands, and quickly becomes outdated or irrelevant in operational contexts.

This disconnect creates a paradox: governance exists but doesn’t govern. Business users work around it, technical teams ignore it, and new initiatives proceed with tribal knowledge rather than shared standards. The root cause is not a lack of governance frameworks, but the lack of activation: policies and metadata that remain static, passive, and invisible.

Reversing this trend requires moving from a static model to an active and operational governance approach, where metadata flows through systems, informs decision-making, and becomes embedded in the delivery cycle. A strong taxonomy is central to consistency, discoverability, and shared meaning across domains.

This scenario focuses on making governance visible and valuable. When metadata becomes part of the operational flow, it creates feedback loops, improves ownership, and raises data quality and decision-making. It also reduces the burden on governance teams because when the system works, people contribute by design, not by exception.

A marketplace makes governance relevant, visible, and self-reinforcing. It exposes metadata directly at the point of consumption, turning what is often a backend obligation into a frontline enabler.

It also creates tangible incentives for producers and stewards: products with richer metadata are easier to find, more likely to be reused, and more trusted by consumers. This builds natural incentive to keep documentation useful: less top-down policing, more organic upkeep.

TIP – Let Taxonomy Breathe: A good taxonomy grows with its people. When teams suggest new terms or flag gaps, it's perceived as care instead of chaos. That’s how structure stays real, and stays used.

Most importantly, the marketplace closes the loop: it allows organizations to measure metadata quality, coverage, and usage, bringing governance out of the abstract and into the metrics.

The minimal foundations you need in place before launching the scenario are:

The capabilities that will necessarily need to be implemented for the scenario are:

Key indicators to track how this scenario is playing out:

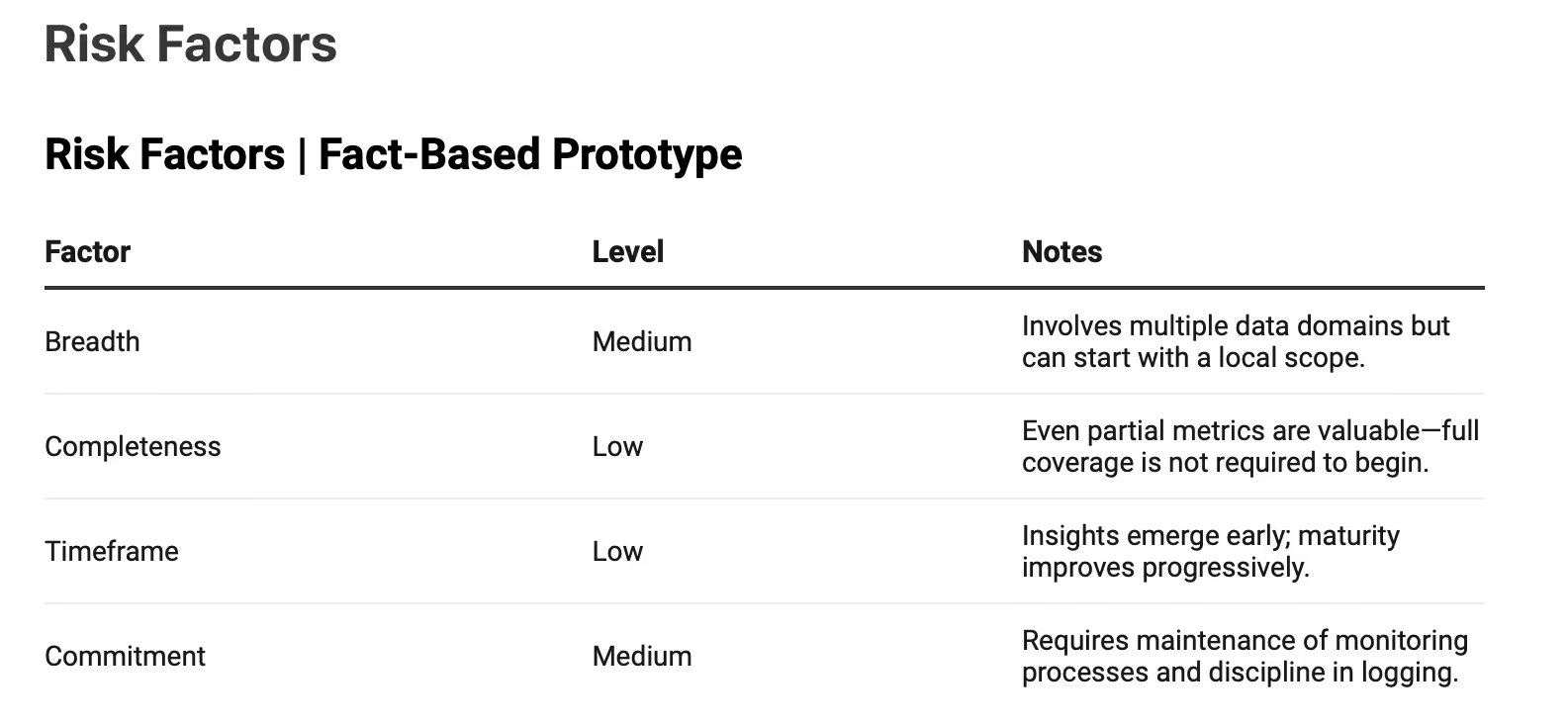

Prototyping gets treated like a playground. It is often seen as a creative and experimental phase with fast iterations, short cycles, and minimal governance. However, this phase can become chaotic in complex data environments, driven more by instinct than evidence. Teams test ideas without a shared context or visibility into prior work, and prototypes either fail silently or evolve into production without validation.

A recurring anti-pattern in this context is the HiPPO effect: when decisions about what to build next are based not on data, but on the opinion of the highest-paid person in the room. Even well-intentioned executives fall into this trap: prioritizing visibility or gut feel over usage signals, reuse potential, or actual demand. The result is predictable: teams invest in ideas that are politically safe but technically redundant or irrelevant to real needs.

This scenario explores a different model: prototyping grounded in evidence. Not just "try fast and fail fast," but try smart, with visibility into what’s already in use, what’s been attempted before, and what the current demand signals suggest. A fact-based prototype process is not about limiting creativity; it’s about focusing energy where impact is more likely and learning from the ecosystem as you go.

Rather than starting from a blank slate, the goal is to prototype on top of structured knowledge: technical metrics, user behavior, reuse signals, and monitored gaps. In this way, even early-stage efforts contribute to a broader understanding and avoid duplicating failed attempts.

In this scenario, the marketplace acts as an observational layer. The system sees what’s being consumed, which domains are investing effort, and what products are underused or emerging.

The marketplace enables teams to anchor prototyping decisions in real usage data, not hierarchy or assumptions, by exposing demand patterns, reuse signals, and access trends. This helps defuse HiPPO-driven prioritization by making usage facts visible, comparable, and actionable. It also provides continuity: a prototype built from an existing product can inherit documentation, quality checks, and contractual terms, speeding up validation and reducing technical debt when scaling.

The minimal foundations you need in place before launching the scenario are:

The capabilities that will necessarily need to be implemented for the scenario are:

Here are the key metrics that should be used to monitor the progress of the scenario:

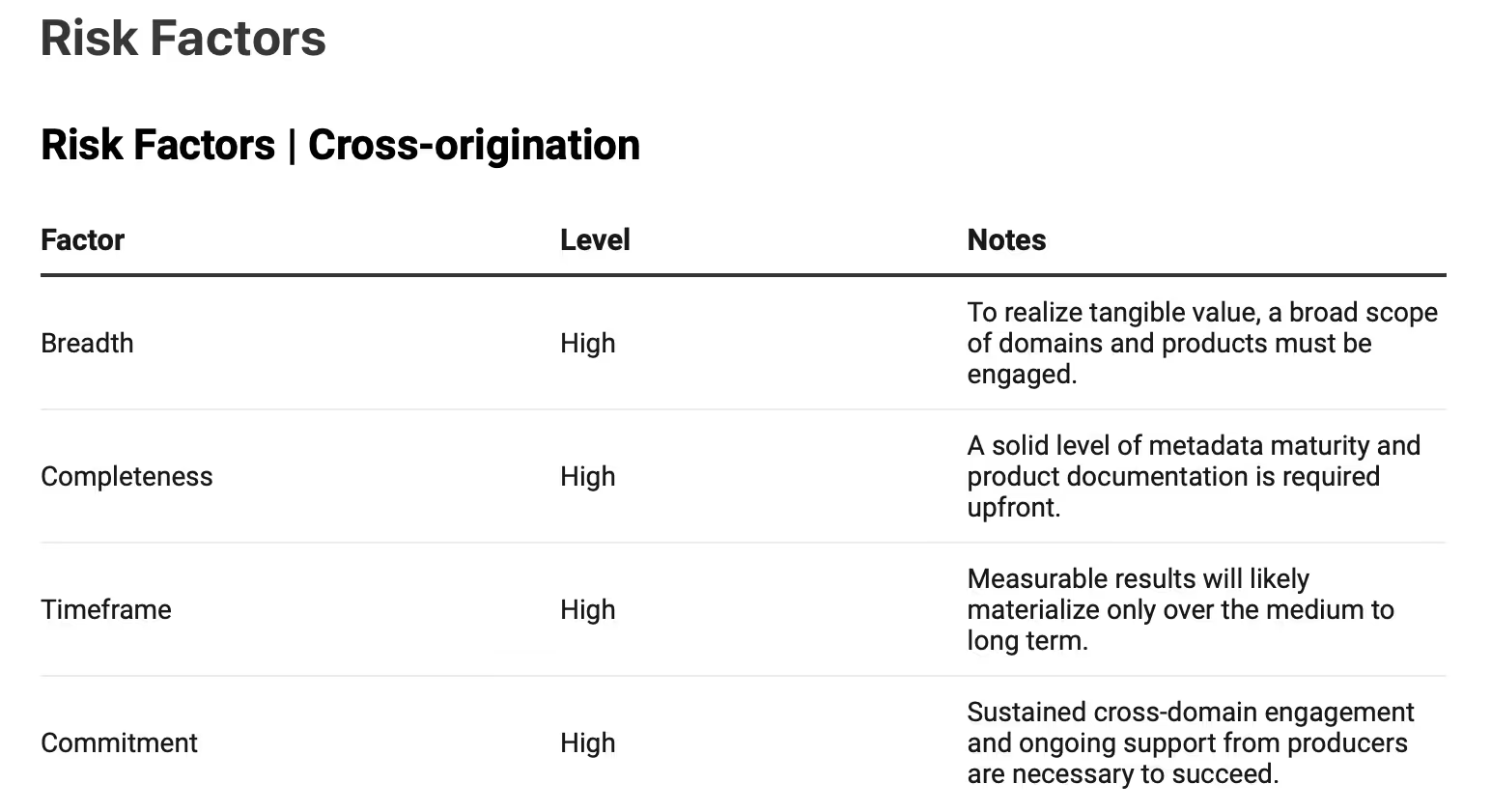

Cross-origination refers to the capacity to repurpose informational assets across different domains or teams; when data products, insights, or intermediate artifacts are made accessible across organizational lines, the chances for innovation increase significantly.

Teams thrive on fresh perspectives: new angles, unexpected links, patterns missed inside silos. But cross-origin reuse doesn’t just happen. It takes intent: making data products legible to those outside the domain.

Things like rich metadata, clear semantics, and crisp context notes aren’t extras but the bridge. And as reuse grows, so does the need for strong edge: clear boundaries that keep meaning tight and use on track.

In essence, cross-origination is ultimately a conscious design choice- creators must consider not only their known consumers but potential future users they may never engage with directly, and tailor their products accordingly.

A marketplace plays a central role in enabling cross-originating by making data products discoverable beyond their original domain. Without a shared space where products are cataloged, documented, and searchable, reuse relies on informal channels or personal connections, which do not scale. The marketplace acts as a bridge between teams, understandably exposing data even to users without deep domain knowledge.

It also provides the contextual scaffolding needed for safe and effective reuse: business descriptions, intended use cases, known limitations, and audience tags. These elements help prevent semantic drift and misuse, especially in cross-domain scenarios where assumptions differ. In short, the marketplace turns isolated data into shareable assets, designed for current and future users who may not speak the same language.

The minimal foundations you need in place before launching the scenario are:

The capabilities that will necessarily need to be implemented for the scenario are:

Here are the key metrics that should be used to monitor the progress of the scenario:

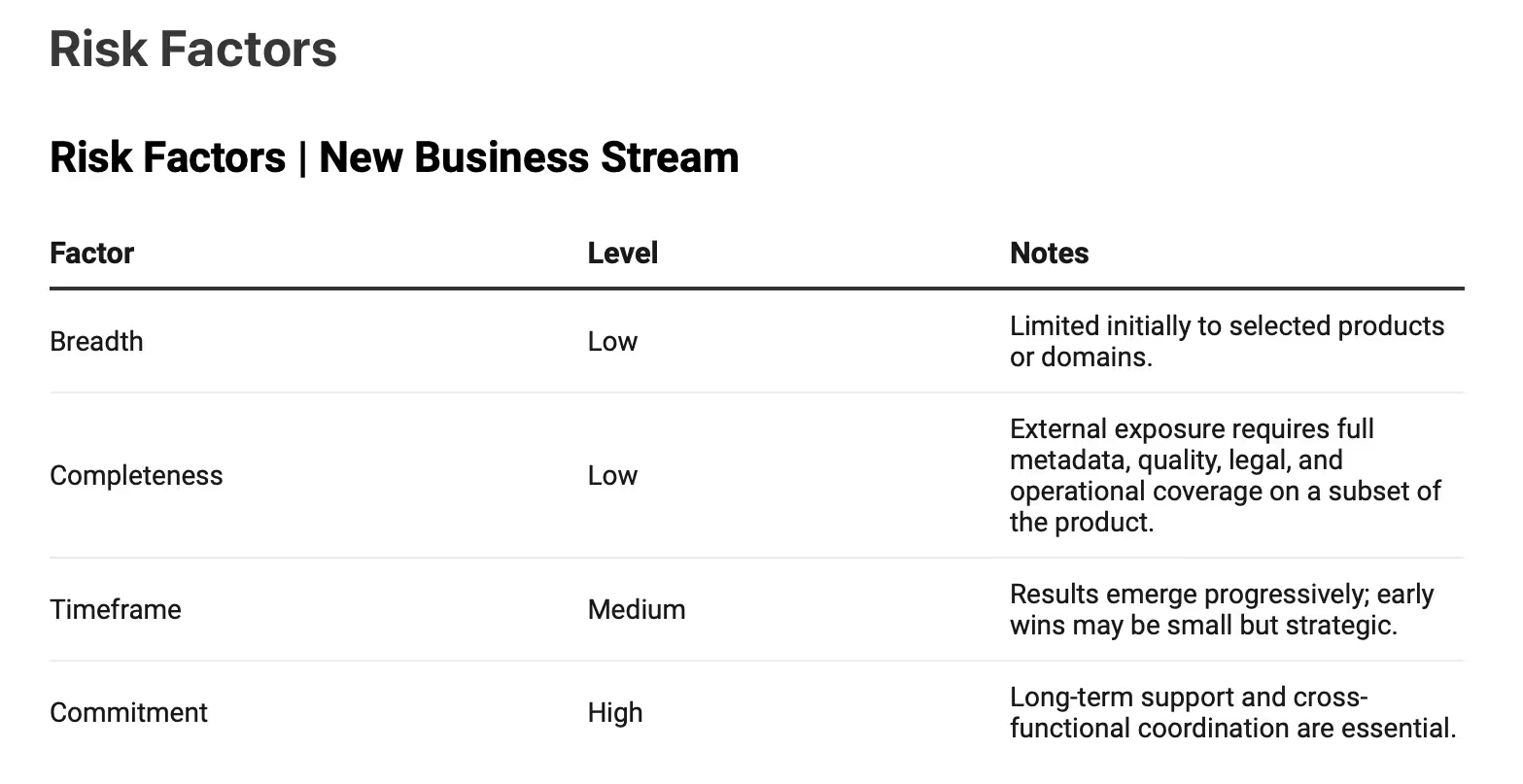

Monetizing data is an appealing but often misunderstood objective. Turning data into revenue sounds great, but it’s often more hype than readiness. Whether it’s insights for partners, dataset access, or data-powered services, this isn’t just about ambition or enthusiasm. It takes scaffolding: trust, timing, product fit, and a tight link to real business value.

Creating a new data-driven business strategy should never be approached as a shortcut to profitability or as the primary justification for investing in data capabilities. Data monetization is a consequence of maturity, not its starting point. Focusing too early or too heavily on commercial exploitation often distracts from foundational work (quality, governance, discoverability, and usage tracking) that ultimately makes monetization feasible in the first place.

This scenario addresses what to do when the organization is ready, internal reuse is consolidated, product ownership is defined, and interest emerges from partners or external stakeholders. It focuses on safely and sustainably opening a data product or group of products to external access under contractual and operational controls.

WARNING – DON’T FOCUS ON MONETIZATION: While monetization is a valid and often strategic scenario, it should not be the primary driver for building a data platform or marketplace. Commercial use will be fragile or irrelevant if internal visibility, reuse, and trust are lacking. Focus on sustainable foundations, monetization will follow.

A marketplace provides the operational foundation for treating data as a commercial product. It centralizes visibility, enforces access conditions, and formalizes ownership, licensing, and provisioning logic. Without a marketplace or similar structured environment, data monetization remains manual, opaque, and limited to one-off efforts.

Critically, the marketplace also differentiates internal from external exposure. Products can be marked as "external grade" only if they meet certain thresholds: quality, documentation, SLA, security, and legal validation. This acts as a filter and a safeguard. It also helps structure pricing logic, consumer onboarding, contract versioning, and deprecation: essential for sustainable external use.

Most importantly, the marketplace enables monetization without compromising internal integrity: what is exposed externally follows a governed path, separate from tactical or experimental data usage within the enterprise.

The minimal foundations you need in place before launching the scenario are:

The capabilities that will necessarily need to be implemented for the scenario are:

Here are the key metrics that should be used to monitor the progress of the scenario:

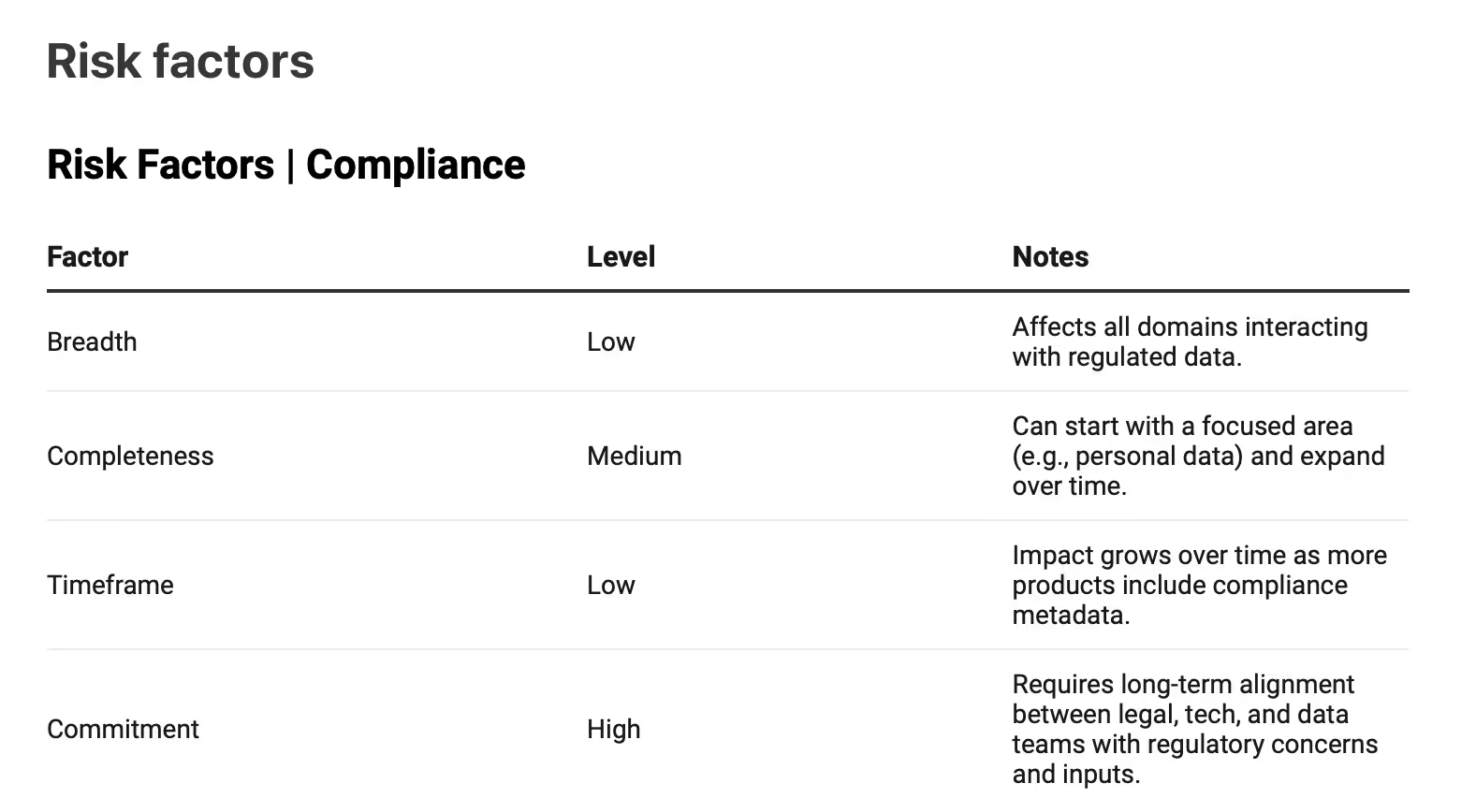

Data regulations have grown in number, scope, and complexity in recent years. Frameworks like GDPR, PSD2, DGA, AI Act, and the upcoming Data Act redefine what data can be shared, how, with whom, under which guarantees, and for what purposes.

For many organizations, these constraints are seen as blockers: something to be dealt with after the platform is designed or the use case defined. But this approach rarely works. When compliance is treated as an afterthought, it leads to rework, friction, or stagnation.

This scenario takes the opposite view: regulatory alignment can be designed into the architecture from day one. Instead of hardcoding exceptions, the system enables sharing by construction, under the right conditions, not by reducing ambition, but by embedding concepts like purpose limitation, contractual enforceability, auditability, and role-based access directly into the data sharing platform.

A marketplace is crucial for changing compliance from cumbersome paperwork to streamlined platform logic. It allows Purpose-bound access through metadata and contracts, implements differentiated provisioning flows based on sensitivity or legal basis, and ensures visibility and traceability via usage logs and versioning. Additionally, it provides pre-packaged governance models that align with regulatory requirements without creating new ones for each project.

For regulations such as PSD2 (banking), DGA (public-private interoperability), or the Data Act (ensuring fair access among parties), the marketplace creates a collaborative control surface where roles, rights, and obligations are established and enforced among participants, not hidden in code or dispersed across Excel spreadsheets.

The minimal foundations you need in place before launching this scenario are:

Capabilities to embed compliance in operational workflows:

Key indicators should be the compliance ones.

This piece is not meant to offer a complete taxonomy of everything you could do with data sharing. That would be impossible and misleading. Instead, it proposes a curated set of recurring situations, selected not for their theoretical purity, but for their practical relevance.

You’ve seen organizations that want to monetize data before having internal trust. Others talk about reuse, but can't find what they’ve already built. Some are driven by regulatory pressure, others by experimentation. All of them are real. All of them start messy.

Scenarios help bring structure to that mess. They are a way of thinking a scaffolding to recognize what kind of challenge you’re facing and what it will take to address it.

They let you:

These scenarios are not mutually exclusive. In fact, they often coexist and compete.

A team may want to accelerate time to market, while governance needs stronger documentation. Compliance may demand control, while business wants openness. The key is to recognize the dominant constraint in each phase and act accordingly.

Architecture will follow that constraint; it has to. But if you skip the reflection, your architecture may be elegant and useless. So treat these scenarios not as models to implement, but as decision-making frames. Use them to:

Thanks for reading Modern Data 101! Subscribe for free to receive new posts and support our work.

If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Find me on LinkedIn 🤝🏻

.avif)

Francesco’s expertise lies in crafting scalable data architectures that enhance data storage, retrieval, and processing along with a passion to explore new data management trends that help find new ways to capitalize on data value, such as Data Space and Governance.

Francesco’s expertise lies in crafting scalable data architectures that enhance data storage, retrieval, and processing along with a passion to explore new data management trends that help find new ways to capitalize on data value, such as Data Space and Governance.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.