Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

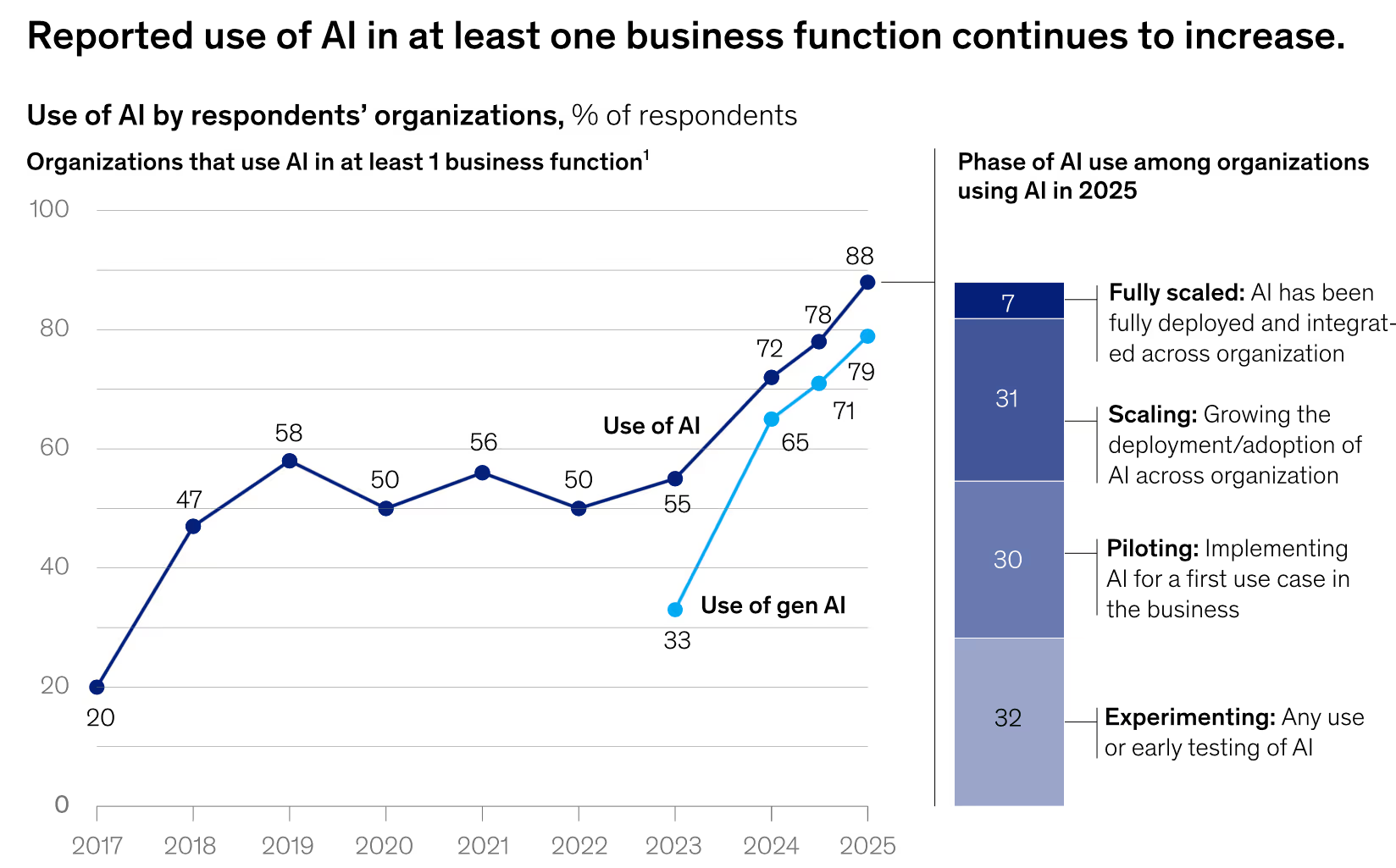

Organisations this year heavily invested in AI and data infrastructures that support AI. While many witnessed exponential growth in their outcomes, a large number of organisations continued into the pilot stage with multiple loopholes in the workflows.

A sharp difference was seen where enterprises moved from their experimentation phase to real-time scale and operation.

This pace is not even a surprise, given how Statista stacks the numbers.

The market for AI technologies is already worth USD 244 billion, and is expected to go beyond USD 800 billion by 2030.

What began as a technology has today gained traction beyond expectations. From autonomous agents to deep insights in real-time, today it’s not just what AI does but how data and AI come together to deliver enhanced business value.

Today, a lot of enterprises have also figured out that technology alone is not going to cut the bill. A regularly observed pattern isn’t performance, but it’s the lack of context-rich, trusted, and productised data.

For CDOs, senior executives, data owners, data product managers, Chief Data and AI Officers, and other decision makers, it’s imperative to understand some of the top data and AI trends in 2026, where each of these trends is a response to that problem, and how enterprises can cut down the gap between AI initiatives and execution.

Most enterprises today face a tough time with “model sprawl”, where a lot of tools and models run in isolation, and they are disconnected from the real business context. Such fragmentation increases risk, cuts down scalability, and leads to inconsistent behaviour.

A Nintex survey mentioned that a significant 51% of mid-level organisations had 100-300 tools in their technology stack.

The same survey report also mentioned that one-third (28%) of IT leaders said that disconnected and too many tools were a major contributor to below par customer experiences.

This is now being changed by agentic AI, which refers to a new generation of goal-oriented and autonomous systems with reasoning and planning capabilities within dynamic business ecosystems. These agents don’t just generate outputs, but they also make decisions, collaborate, and execute workflows.

2025 was a new leaf because of Model Concept Protocols or MCPs, which are open frameworks allowing models and agents to safely access shared context and metadata across APIs, products, and applications. This trend in 2026 will continue to enable continuity in context, which means that every agent action is backed by live business data and not static prompts.

With MCP-driven agentic systems, organisations can move to orchestrated AI ecosystems where agents take care of customer workflows, compliance checks, and operations on their own with traceability and consistency. MCP allows enterprises to operationalise AI at enterprise scale, where each decision is one of confidence.

Gartner’s latest report highlighted the potential of Domain-specific language models (DSLMs) in 2026. These are specialised AI models trained on the vocabulary, rules, and operational context of a particular industry or function. Instead of trying to be universal like general-purpose LLMs, they specialise, so they deliver higher accuracy, reduce ambiguity, and stay compliant with domain standards.

Why they’re rising now

Enterprises are done with “AI experiments.” CIOs want systems that move real business metrics. DSLMs solve that by bringing precision to high-stakes workflows, finance reconciliation, clinical documentation, HR case management, regulatory reporting, where generic LLMs often fail or hallucinate. By narrowing scope, DSLMs cut deployment friction, keep costs predictable, and get teams to production faster.

[state-of-data-products]

There are many organisations that struggle with vendor lock-ins, high operational costs, and opaque black box models linked to AI platforms. This reduces the pace of experimentation and cuts innovation.

The rise of open-source AI systems and model ecosystems is now addressing this. The year, 2026 will witness increased focus of organisations on open governance frameworks, open-weight models, and composable AI architectures.

When open-source components are combined with enterprise-grade governance, organisations get to innovate quicker and at lower costs, ensuring model auditability and transparency.

The shift towards open ecosystems is completely reshaping the competitive landscape. Enterprises adopting open, composable frameworks are working on their AI strategies to avoid reliance on a single vendor.

[related-1]

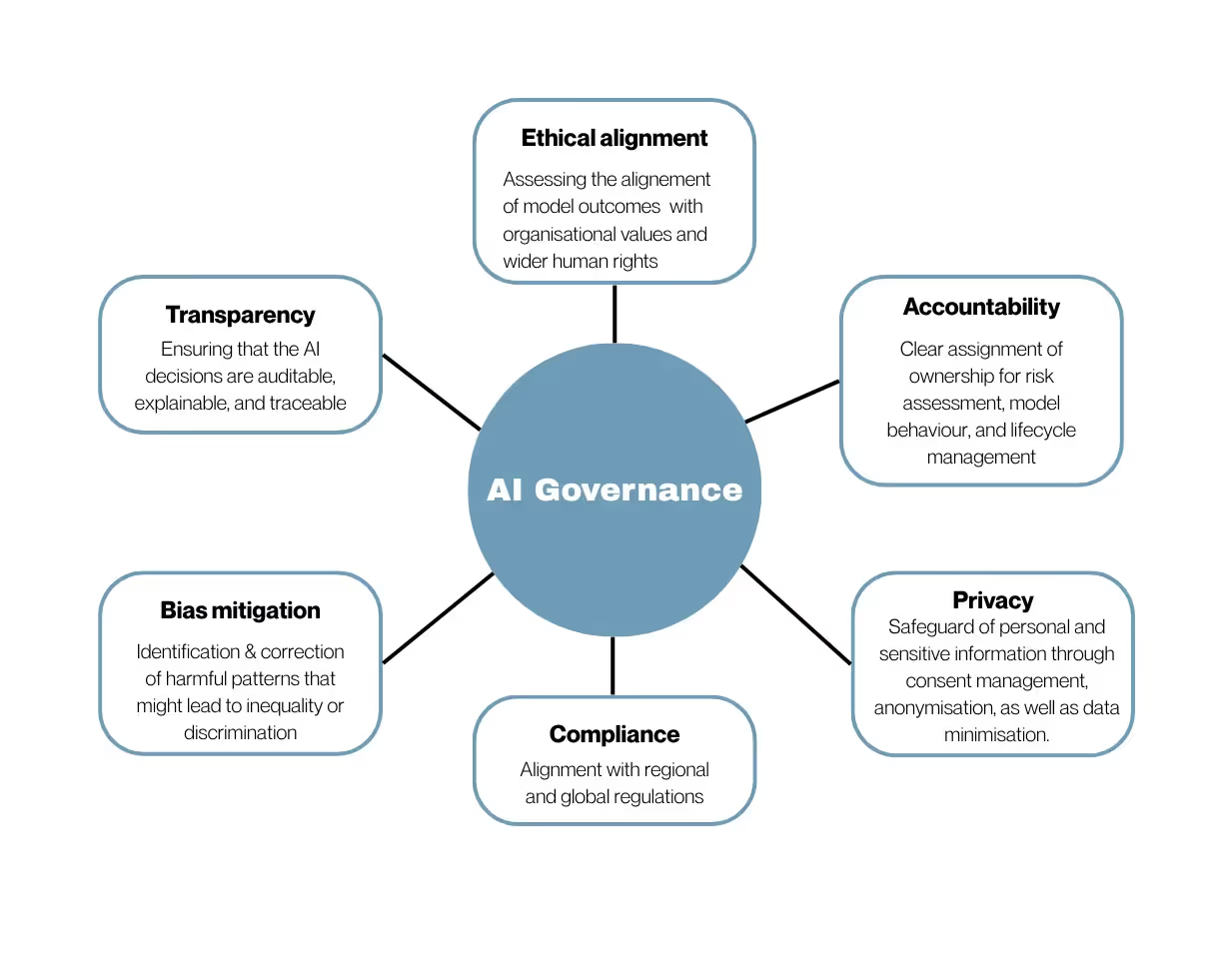

If leadership and governance are fragmented, even the most promising AI initiatives are bound to fail sooner or later. Lack of clear ownership, accountability, and ethical frameworks creates risks that can increase faster than innovation.

One of the top data and AI trends to watch in 2025 is going to be the rise of integrated AI and data governance, highlighting a shift from control to enablement. Organisations are now embedding policy-driven governance within pipelines, defining clear accountability, and also automating lineage tracking through newer roles such as the Chief Data and AI Officer (CDAO).

A stronger governance framework ensures better compliance, trust, and agility, turning data from a liability into a strategic enabler, balancing agility with responsibility. Enterprises embedding governance directly into their data platforms can achieve faster AI scaling with reduced risk, setting a standard for innovation.

[related-2]

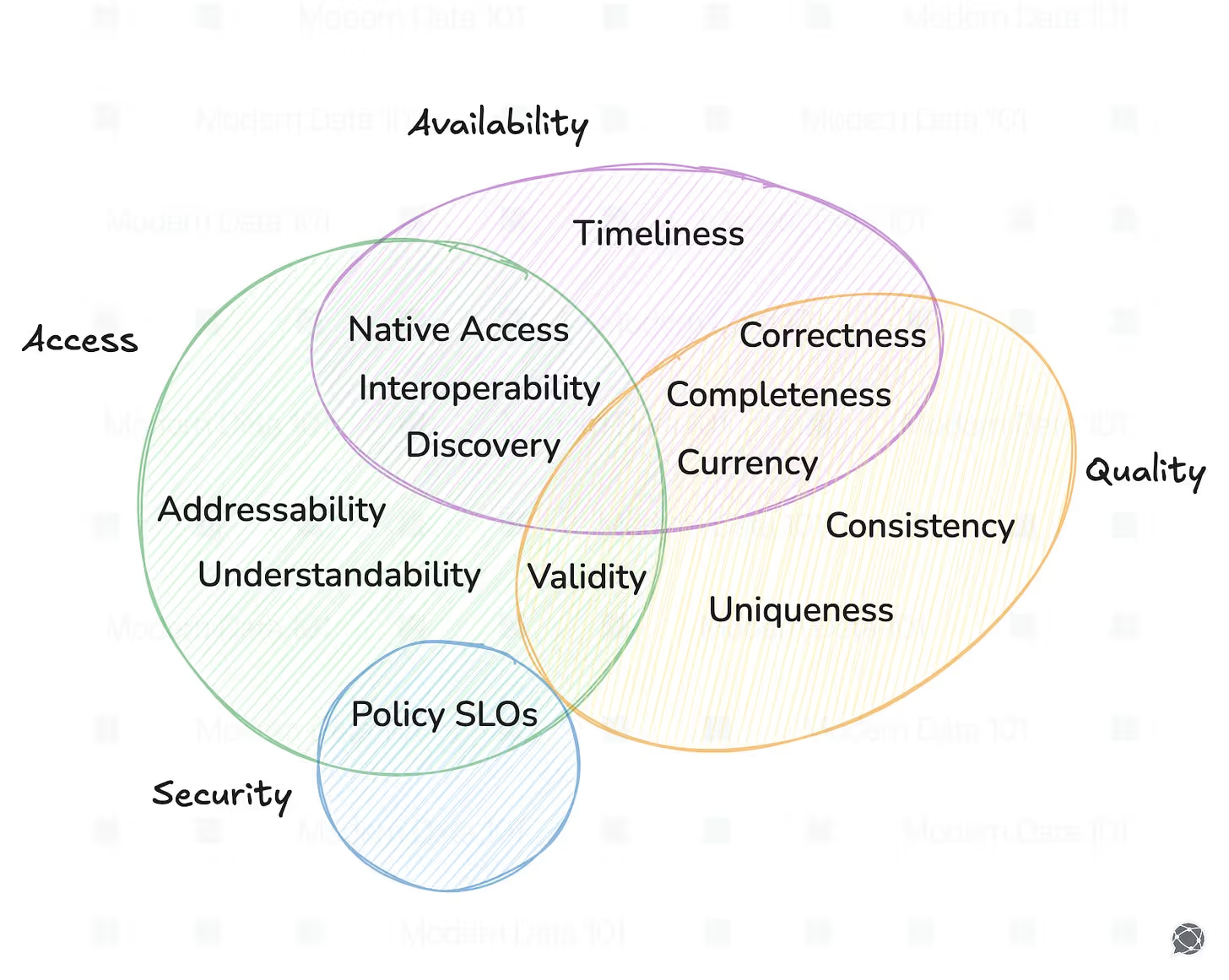

Organisations often end up treating data as a component of their infrastructure, keeping it stored, catalogued, and pipelined, and not treating it as a product with clear ownership, purpose, and measurable impact. The result? Duplication, poor data quality, and inconsistent results across the entire AI ecosystem.

Data productisation transforms data into a reusable, governed, and consumable asset. Each data product gets defined observability, SLAs, and ownership, whether it’s an API, curated dataset, or model endpoint.

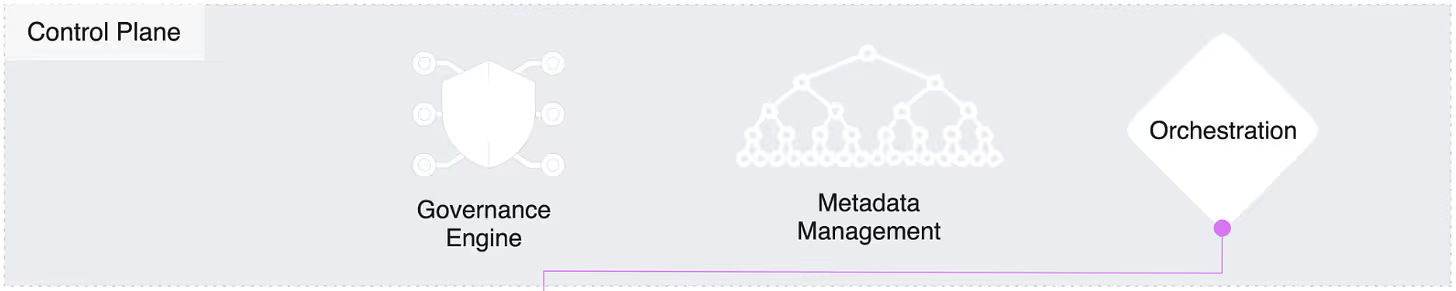

This productisation approach gets operationalised through the Data Developer Platform(DDP), which enables teams to test, build, and deploy data products consistently across multiple domains. A DDP acts as the connective tissue between governance, data engineering, and AI delivery.

Productised data ensures reusability, reliability, and faster time-to-insight. It is productised data that allows Model Context Protocols and agentic AI to operate correctly with a clear lineage and trustworthy input.

Organisations adopting data productisation are building AI ecosystems that are also dependable, while also being intelligent. It is where each metric, model, and decision is entwined with governed and verified data.

A lot of organisations still work with batch-based data systems, notorious for introducing latency, low responsiveness, and limited accuracy when it comes to AI-driven decisions.

As AI becomes increasingly embedded in physical environments, edge computing and real-time analytics are now becoming core priorities, and they will be among the top data and AI trends in 2026 for sure. Processing data close to where it gets generated cuts down latency drastically and also allows AI to act in the present.

As event-driven data platforms, streaming architectures, and low-latency inference become the new normal, 2026 is going to be the year when real-time decision-making becomes a reality.

Each of the data and AI trends mentioned above reinforces the same fundamental truth: that AI is only as strong as the data foundation beneath it.

Organisations continuing to treat data as an afterthought will have a tough time with scale, trust, and ROI. On the other hand, the ones productising their data and adopting protocols such as MCP with building self-serving data developer platforms will successfully operationalise AI with responsibility.

In 2026 and beyond, the metric of organisational success won’t be in how much AI you build, but how intelligently your data products enable those AI systems to act.

Data productisation enables organisations to treat data as governed, reusable, and a high-quality asset. This approach ensures consistent data access for AI models, elevating reliability, innovation, and operational efficiency across the board.

Organisations should look to modernise their infrastructure, drive data literacy across domains, and invest in governance-powered AI platforms. It ensures readiness for emerging trends such as data productisation, autonomous AI agents, and the like.

Following are the big 5 AI ideas:

1. Perception

How machines interpret sensory data (e.g., vision, speech).

2. Representation & Reasoning

How AI stores knowledge and uses it to think or solve problems.

3. Learning

How systems improve automatically from data (machine learning).

4. Natural Interaction

How humans communicate with AI through language, speech, or gestures.

5. Societal Impact

How AI affects ethics, jobs, privacy, and daily life.

.avif)

Simran is a content marketing & SEO specialist.

Simran is a content marketing & SEO specialist.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.