Access full report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

The story of AI sweeping away almost every industry and becoming the master of the shiny lab demo is nothing short of a true sci-fi fantasy. However, the moment you try to move that innovation into the real world, the harsh reality hits you: a solo model is useless. Thinking your cutting-edge algorithm is the entire solution is like having a truly gifted Head Chef but no kitchen, no ingredients, and no staff.

Successful AI innovation hinges entirely on having the right data platform as the foundation, viz, the functioning kitchen. We will navigate the diverse landscape of modern data platforms from those focused on governance and ingredient prep to complex service orchestration.

This guide is your menu. We’ll cut through the noise, covering the core strengths and ideal recipes of today's leading data platforms to help you navigate, assess, and choose the perfect fit for your AI ambitions.

The core challenge of enterprise data platforms is fragmentation. Your data system often looks less like a sleek operation and more like a messy, un-inspected kitchen with prep stations scattered everywhere. We need a unified interface.

An AI Data Platform is the proposed solution. It's not just a storeroom at the back, instead an intelligent operating system that is designed to manage the entire workflow. It unifies ingredient sourcing, health code compliance (governance), preparation, recipe testing (modelling), and service delivery (deployment).

The modern platform rests on three pillars:

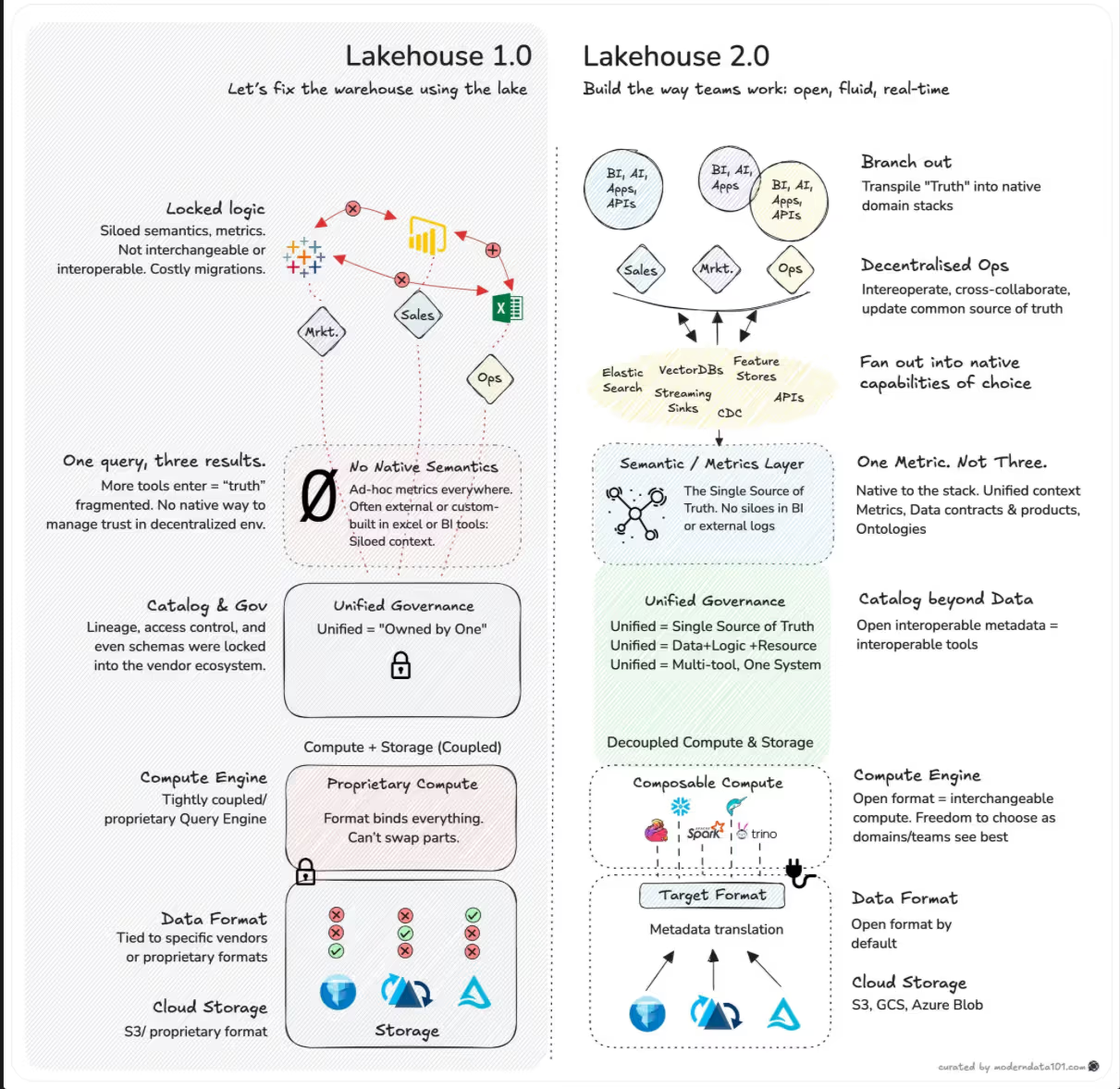

The problem: Your business data is probably scattered across two separate camps. One is the high-speed, structured data warehouse, and the other is a massive, messy data lake. This silo is a headache.

The second generation of the Lakehouse Architecture, Lakehouse 2.0, serves as the apt solution. Think of it as uniting those two camps into one ultimate, highly organised Smart Kitchen.

The unified data platform foundation lets you build reliable Data Products that are guaranteed, reusable, and high-quality ingredients. Crucially, Lakehouse 2.0 is built for Generative AI, natively integrating a vector database. By making this lookup native, we eliminate the clunky manual steps and cut down on "hallucinations."

But the real magic is composability. We’ve moved past the "one appliance for everything" bottleneck. The lakehouse becomes a central hub that seamlessly "fan out" to specialised equipment when needed. The architecture enables plug-and-play constructs that are suited to the nature of the business:

The dirty secret of AI is that garbage ingredients make a garbage product. It’s always about the prep work, and that’s where facilitators of AI-readiness, like data products, come in.

These data platforms enable Human-in-the-Loop (HITL) methodology. This isn't just about slicing but about providing human judgment where the AI is weakest.

Reducing data bias, adding nuance, and refining the final taste through techniques like Reinforcement Learning from Human Feedback (RLHF) are some of the functions carried out in such a data platform strategy.

The platform's job is to transform raw, ambiguous produce into reliable ground truth by blending expert workers with AI-assisted trimming tools. They provide the necessary human oversight, specialised annotation services, continuous model evaluation, and monitoring needed to keep the data and semantics (meta/context) clean, fair, and fit for purpose across all data modalities (text, vision, speech, multimodal).

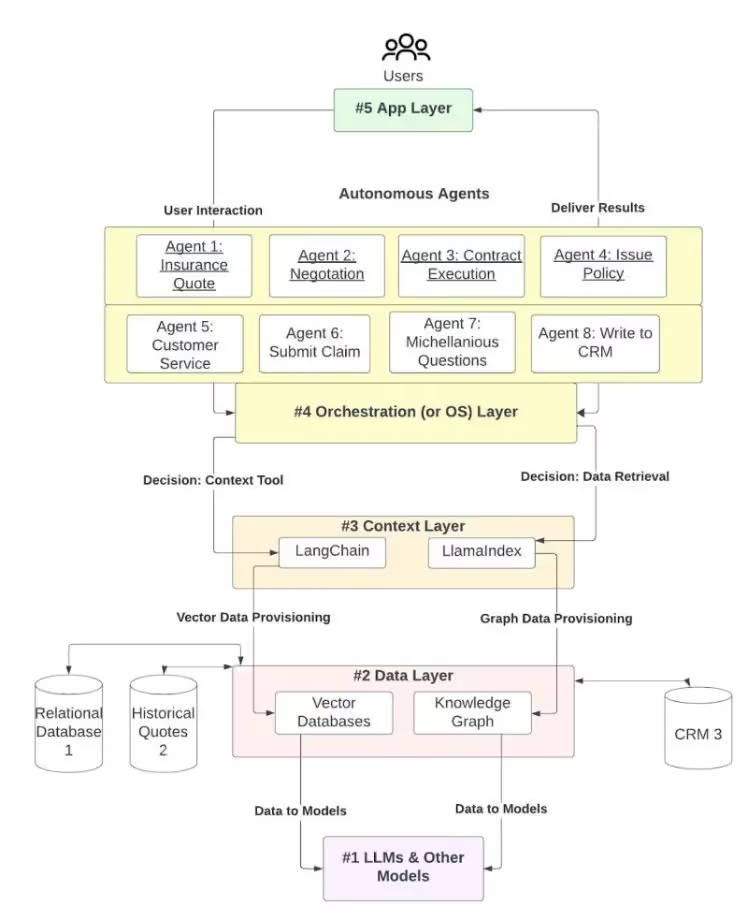

The problem with traditional automation is that it breaks down when a task involves multiple systems or requires complex reasoning, more like a sudden rush of customers.

The solution is the multi-agent AI automation. This platform is the maître d’ and expediter, building, deploying, and monitoring specialised AI agents that collaboratively tackle entire service workflows. Be it taking complex orders, optimising delivery routes, or generating sales proposals.

Instead of one huge brain, you now have a specialised team that might comprise one agent specialising in data extraction, another in analytics, and another in customer interaction. By offering pre-built agent templates and tools, these data platforms dramatically lower the barrier to automating processes that require high-level intelligence.

The multi-agent management moves beyond simple chatbot interactions to create an autonomous digital workforce, automating the entire flow of service with coordinated precision.

Navigating the data platform ecosystem requires asking the right questions about your data ecosystem’s needs.

The reality is many enterprises will integrate layered solutions, which is a strong Lakehouse base paired with specialised annotation and agentic orchestration tools based on the most immediate architectural gap.

Here are the leading platforms specialising in accelerating various components of the AI lifecycle:

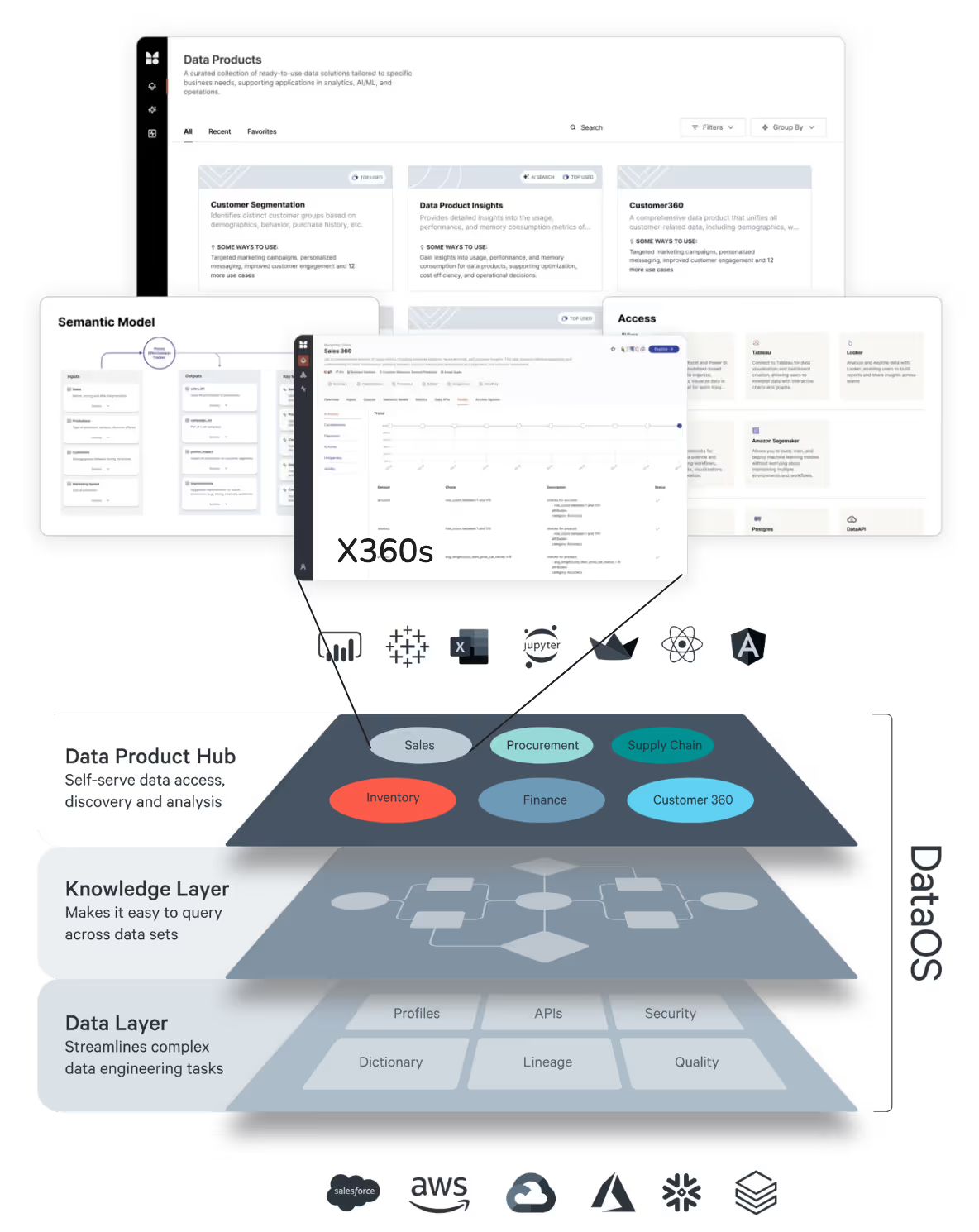

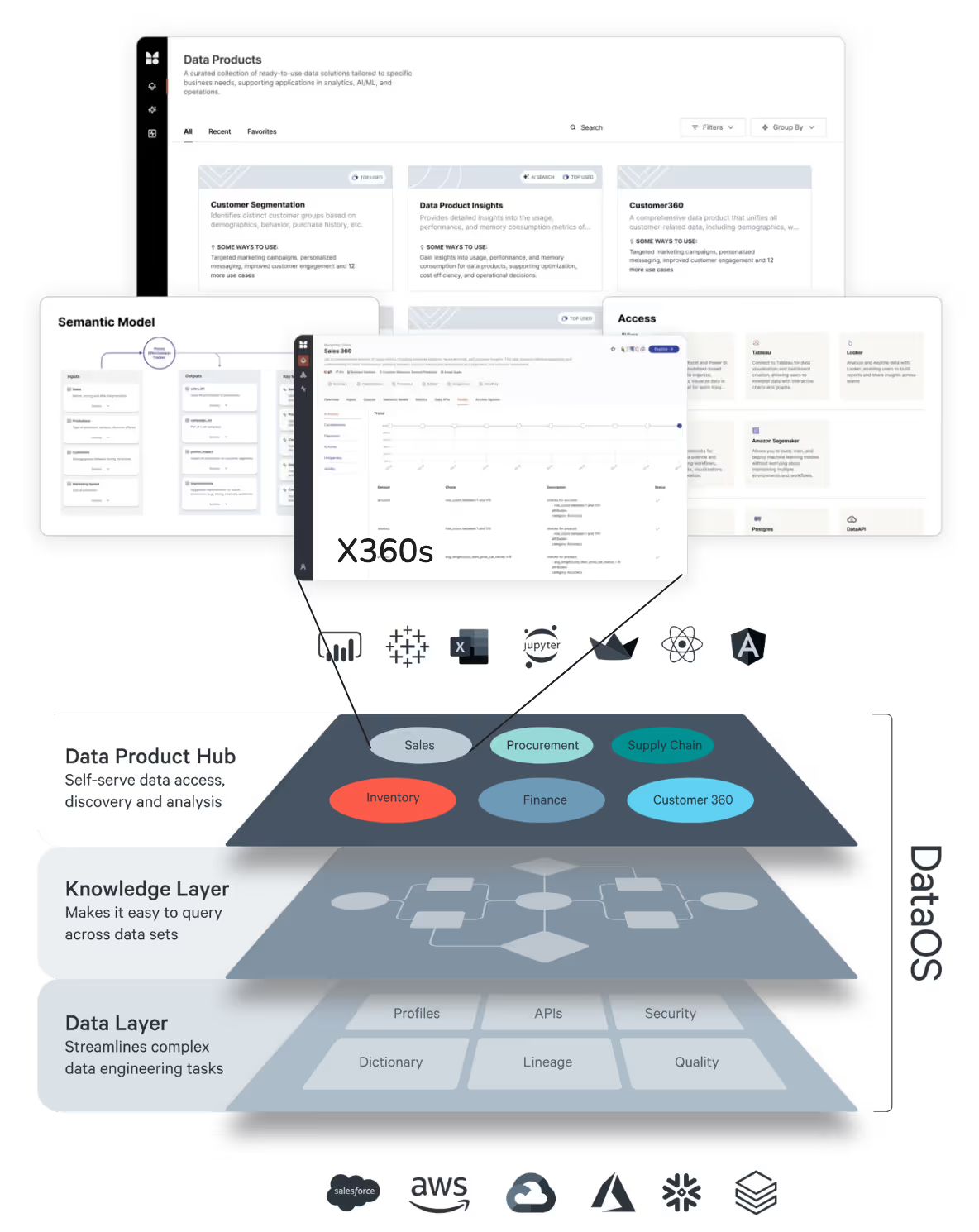

DataOS is a metric-targeted data product platform that uniquely empowers AI agents, apps, and data systems by focusing on two things:

Together, they ensure AI doesn't just compute faster, but reasons better, aligning outputs with business realities and specific business metric goals.

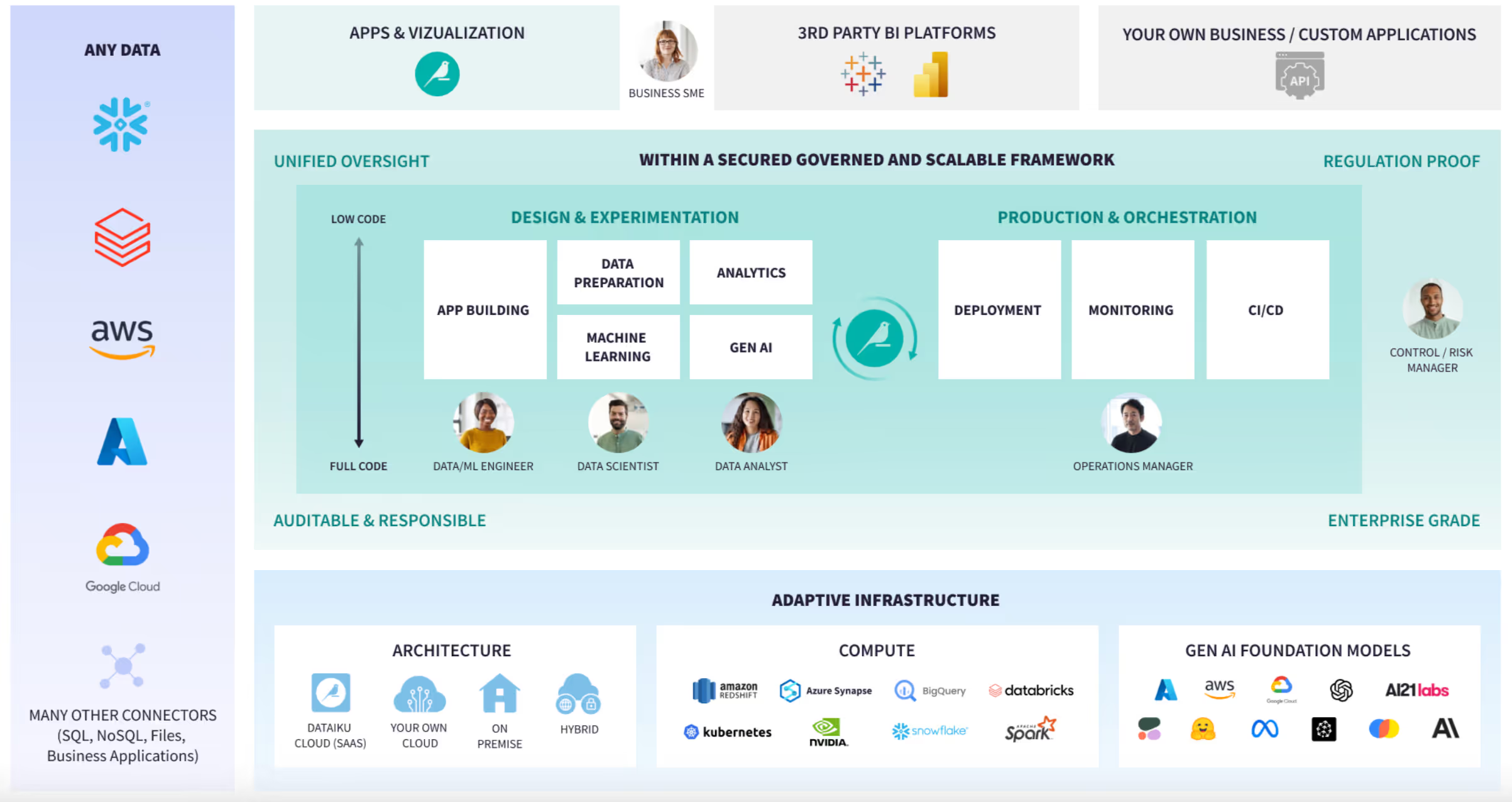

Dataiku is a centralised and collaborative data platform that aims to be an all-in-one kitchen for different data personas. A visual interface for data preparation, AutoML capabilities, and GenAI mesh tools, it helps teams move rapidly from experimental model development to governed production deployment.

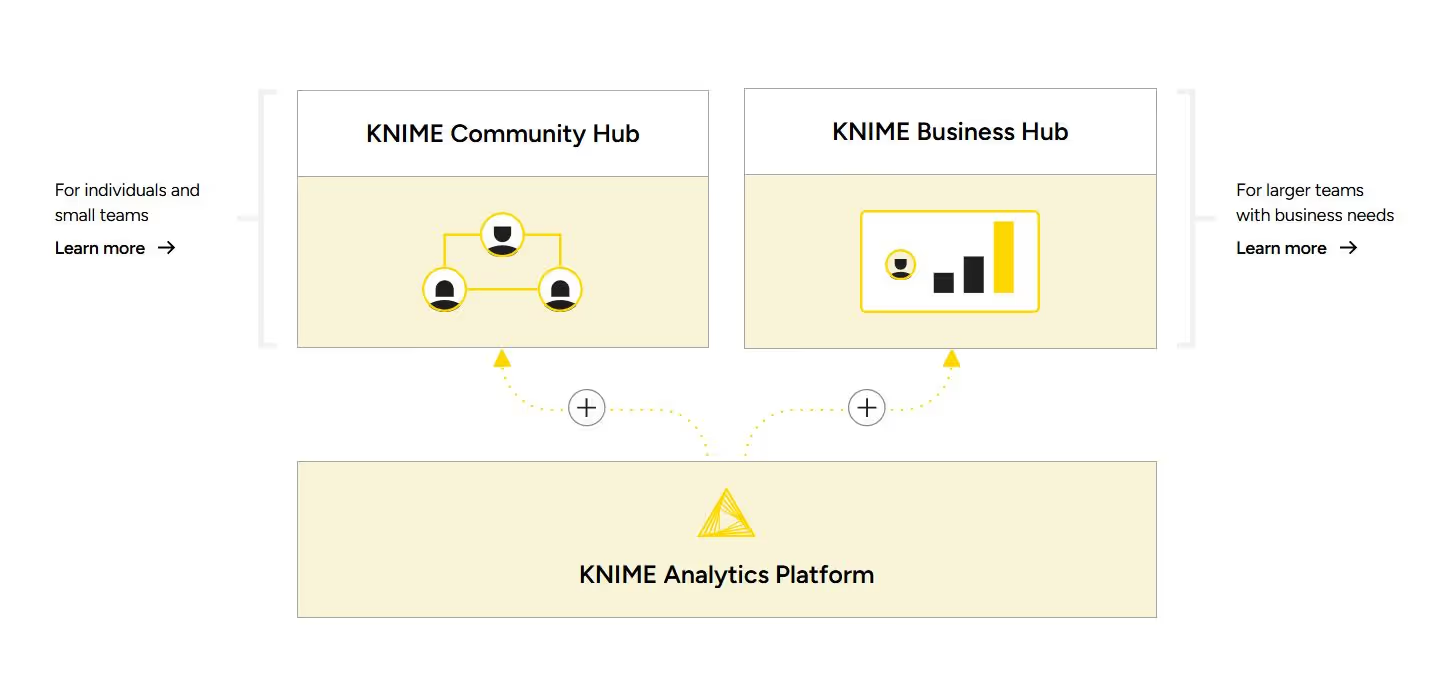

Knime is known for its open-source foundation. It offers an intuitive, visual, low-code/no-code interface for building complex data pipelines and analytical models. It works as a flexible workbench allowing analysts and data scientists to blend data and deploy solutions across the enterprise without needing extensive coding expertise.

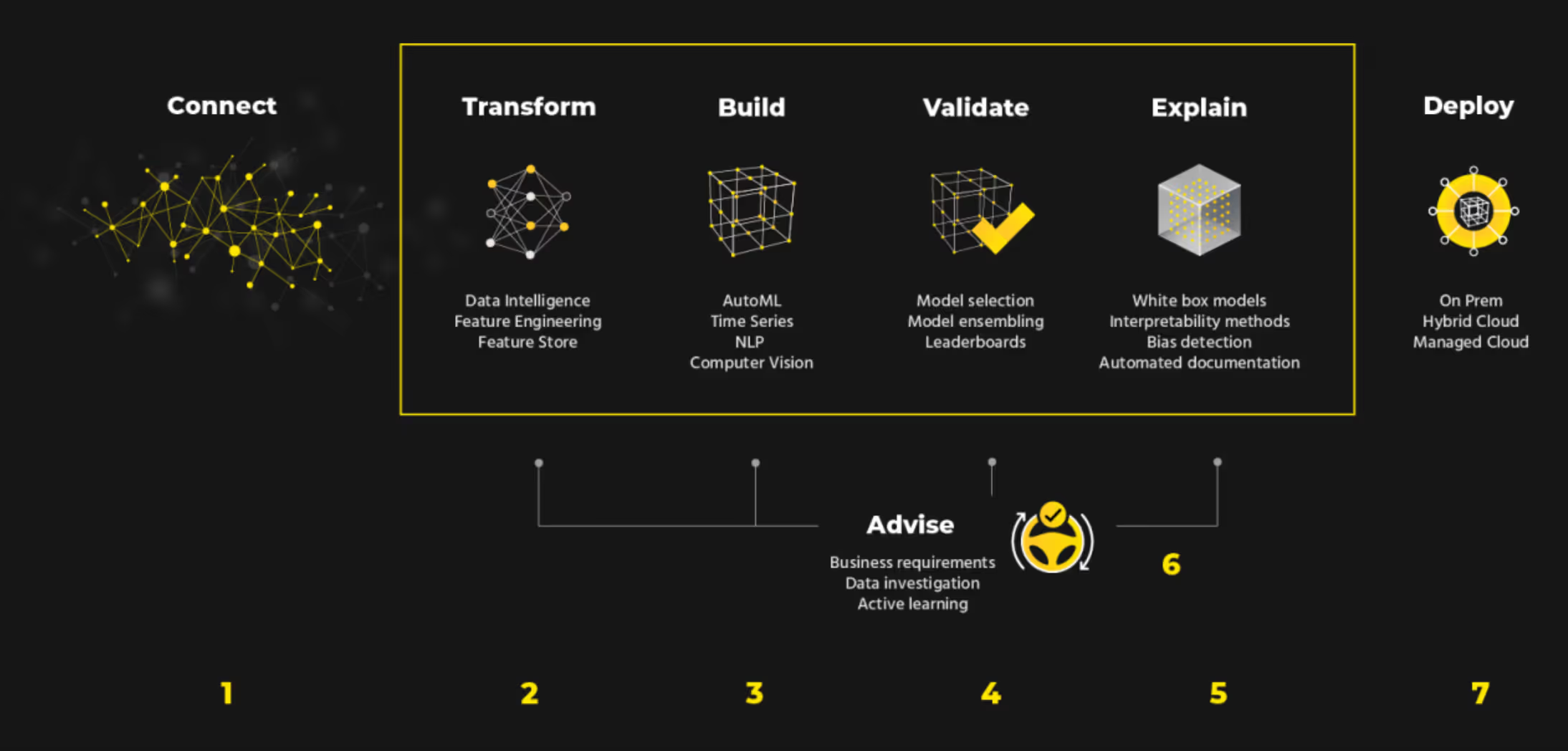

H2O.ai specialises in automated machine learning capabilities that allow users to build, test, and explain highly accurate models rapidly. The platform focuses on accelerating the core model development lifecycle via automated feature engineering, hyperparameter tuning, and Machine Learning Interpretability.

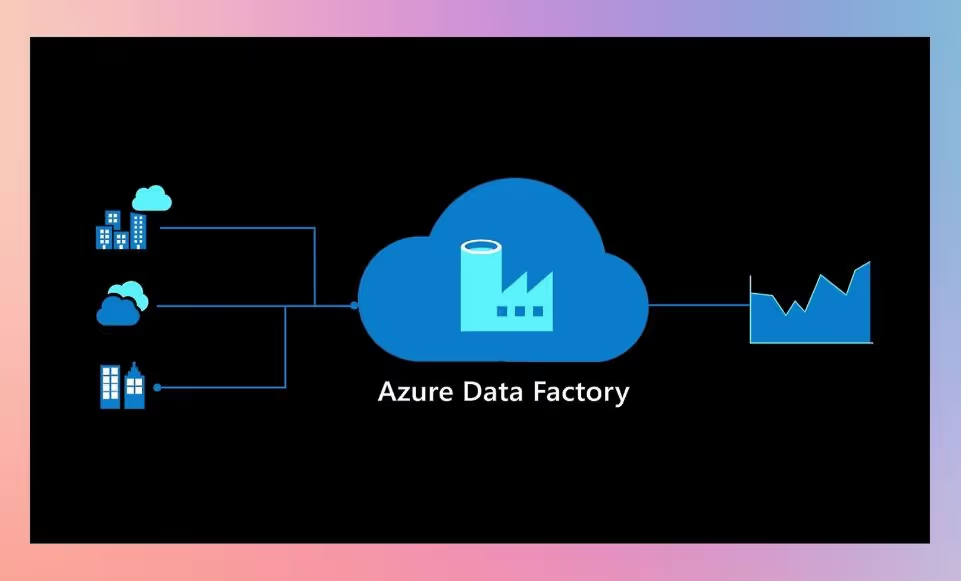

Azure Data Factory is a cloud-based data integration service designed for enterprise ETL and ELT workflows. It excels at orchestrating data movement across hybrid and multi-cloud environments, utilising a visual interface to build scalable, automated data pipelines for consumption by downstream analytics and AI services.

The modern AI supply chain demands strong enterprise data platforms that ensure quality, governance, orchestration, and compute scale. If your team is spending months on ad-hoc feature pipelines and fighting data drift, you haven't built the right kitchen. Choosing well aligns directly with your business goals.

To take the next step, we strongly encourage evaluation of current architecture gaps, running pilots of candidate data platforms, and assessing data platform features well during the course of proof of value. The goal should be to get the right foundations set up today to deliver five-star AI tomorrow.

Q1. What data platforms are used for building AI agents?

The data platform for building AI agents is usually referred to as a Multi-Agent AI Automation Platform. This system serves as the core data operating system for the digital workforce of Agents. It is designed not just to host individual models, but to build, deploy, and monitor specialised AI agents that can collaboratively handle entire business workflows. These platforms provide the necessary orchestration layer, context management, and tools (often including pre-built templates) to coordinate various specialised agents to achieve high-level tasks.

Q2. How do AI agents improve business automation?

AI agents improve business automation by tackling complex, multi-step processes that traditional automation often fails at. Instead of a single, massive model trying to solve everything, multi-agent systems use a team of specialised agents.This coordination allows the system to:

Essentially, they move automation from basic digital labour to autonomous digital service staff.

Q3. Which AI agent framework is the best?

There is no single "best" AI agent framework; the ideal choice depends entirely on specific use case, technical environment, and primary goals. The tools generally fall into two categories:

The best framework for your organisation is the one that provides organisation-specific governance customisations, observability, and seamless integration with your existing data architecture and compute infrastructure.

.avif)

News, Views & Conversations about Big Data, and Tech

News, Views & Conversations about Big Data, and Tech

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.