Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

In the world of AI, Large Language Models (LLMs) are like having a super-smart, incredibly resourceful intern. They can figure out code, draft emails for operational communications, summarise documents, and even brainstorm wild ideas. The catch? Like any high-performing talent, they can get expensive and, if not managed well, can slow things down.

If your LLM-powered applications are draining your budget fast or taking their sweet time to respond, it's not an anomaly and you're not alone. The secret to unlocking their true potential, both in terms of cost and speed, lies not just in the models themselves, but in how you feed them. Eventually, environment matters more than capability.

Think of it like this: you wouldn't give a Michelin-star chef half-rotten ingredients and expect a gourmet meal on time, right? In fact, a star chef would tell you the magic IS IN THE INGREDIENT! To let them naturally flourish through the right cooking (aka the right modelling). You'd provide them with prepped, high-quality components. The same goes for LLMs.

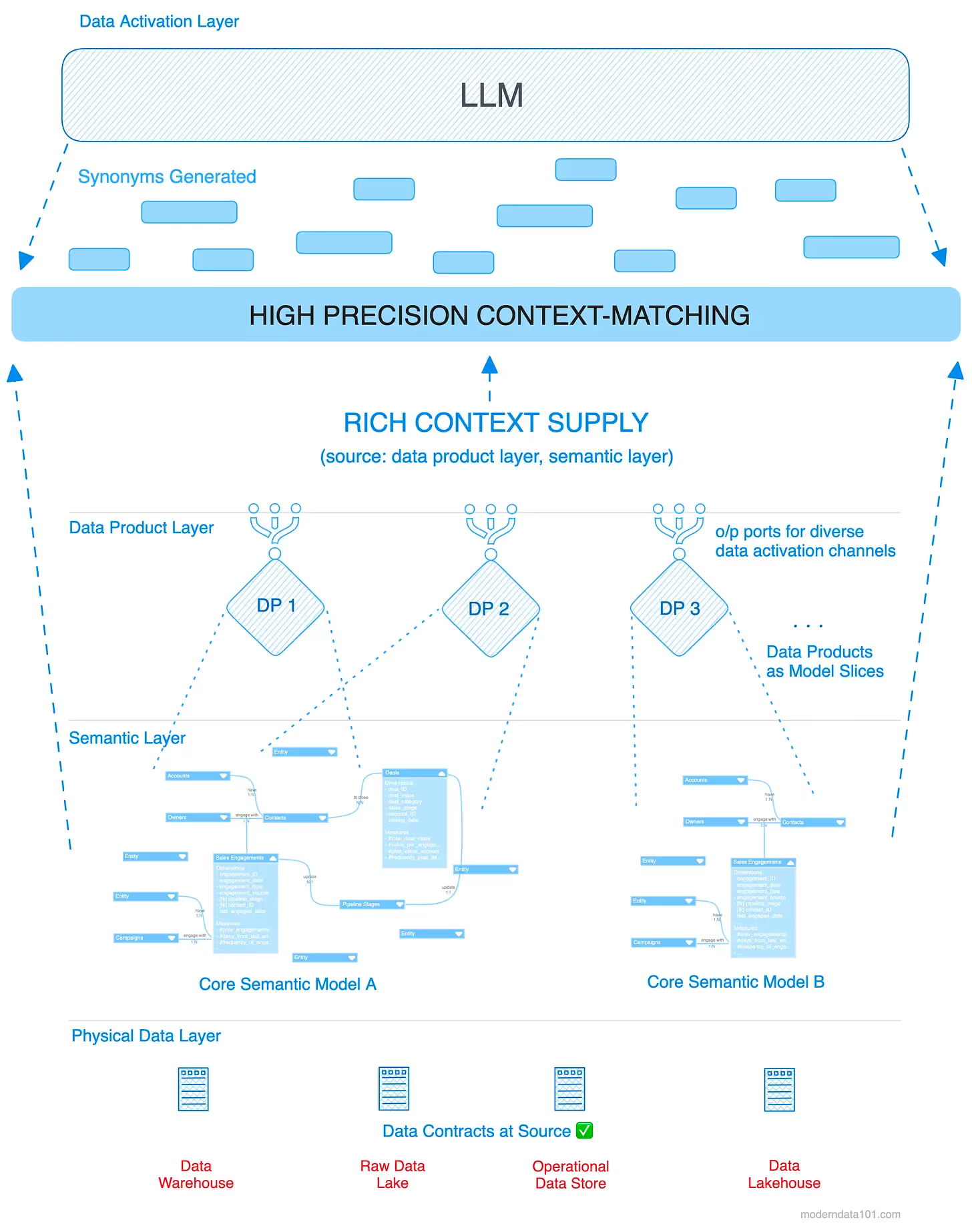

Data isn't just a foundation to kickstart any AI project; it's high-quality data as LLM fuel: the precision fuel that dictates how efficiently and effectively your LLMs run. Without smart data delivery, you’re essentially paying top dollar for an LLM to do basic data prep work.

This is where Data Products become relevant. By treating your data as if they were key ingredients, and how they're delivered to LLMs or AI models, you can drastically cut costs and ensure inference latency reduction. Here are 10 ways to do just that.

Here are 10 ways to achieve data‑product‑driven LLM optimisation, making them cheaper, faster, and smarter.

This is fundamental. Consider explaining a complex project to someone by handing them every single document, email, and sticky note ever created. All context they'd ever need right? No. They'd drown in information, not know where to start, and end up in confusion instead of clarity.

LLMs face a similar challenge when fed raw, unfiltered data.

By using **well-defined, versioned data products (**what we call versioned context delivery) ****to build curated context pipelines, you ensure your LLM consumes only what it truly needs from a dedicated, curated output port. This isn't just about tidiness; it's about reducing preprocessing time, minimising the risk of the LLM "hallucinating" due to irrelevant noise, and critically slashing your token usage. Every unnecessary word costs tokens, and tokens cost money. Less garbage in, less garbage out, less money spent on garbage. LLM cost containment success.

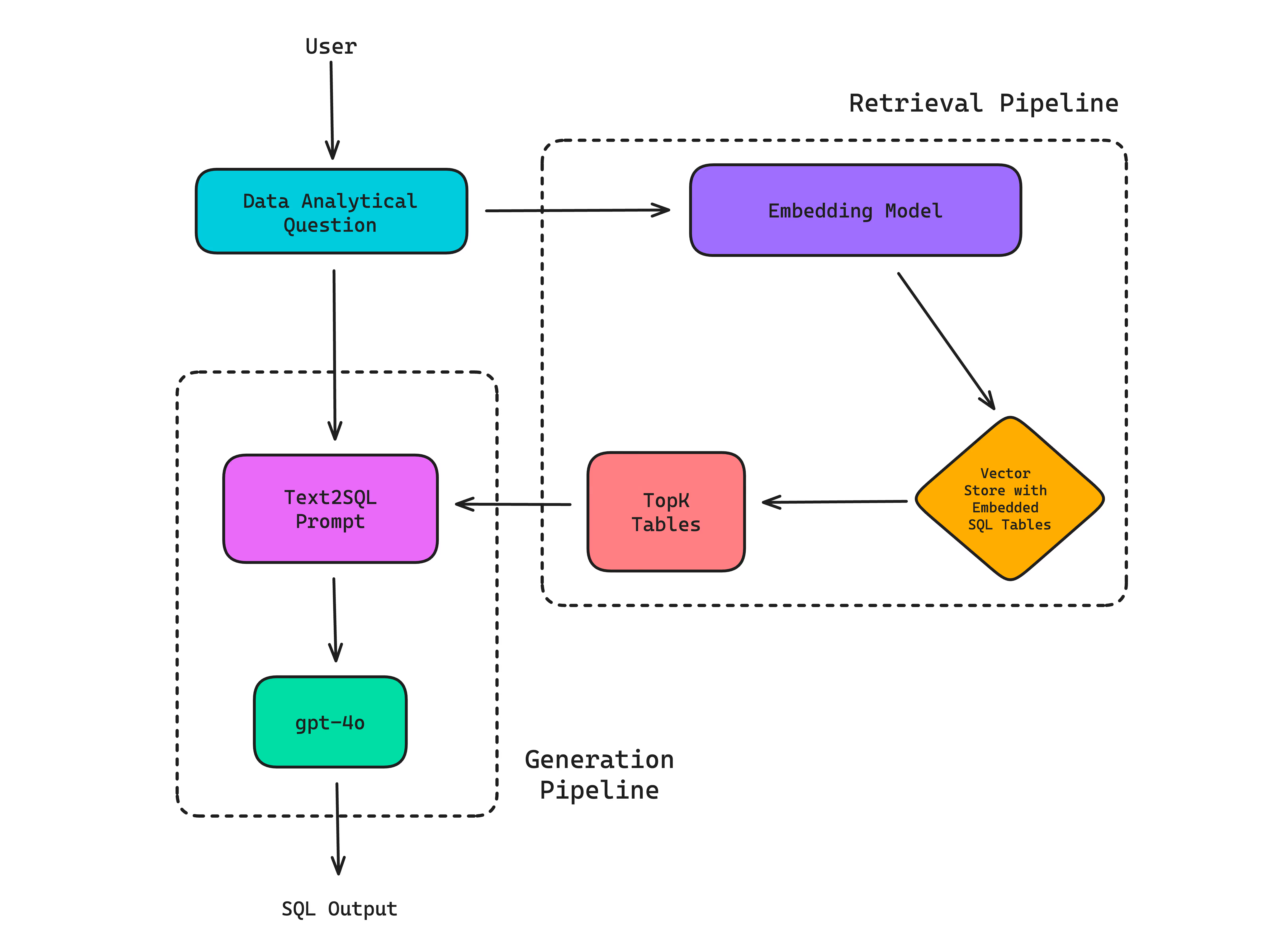

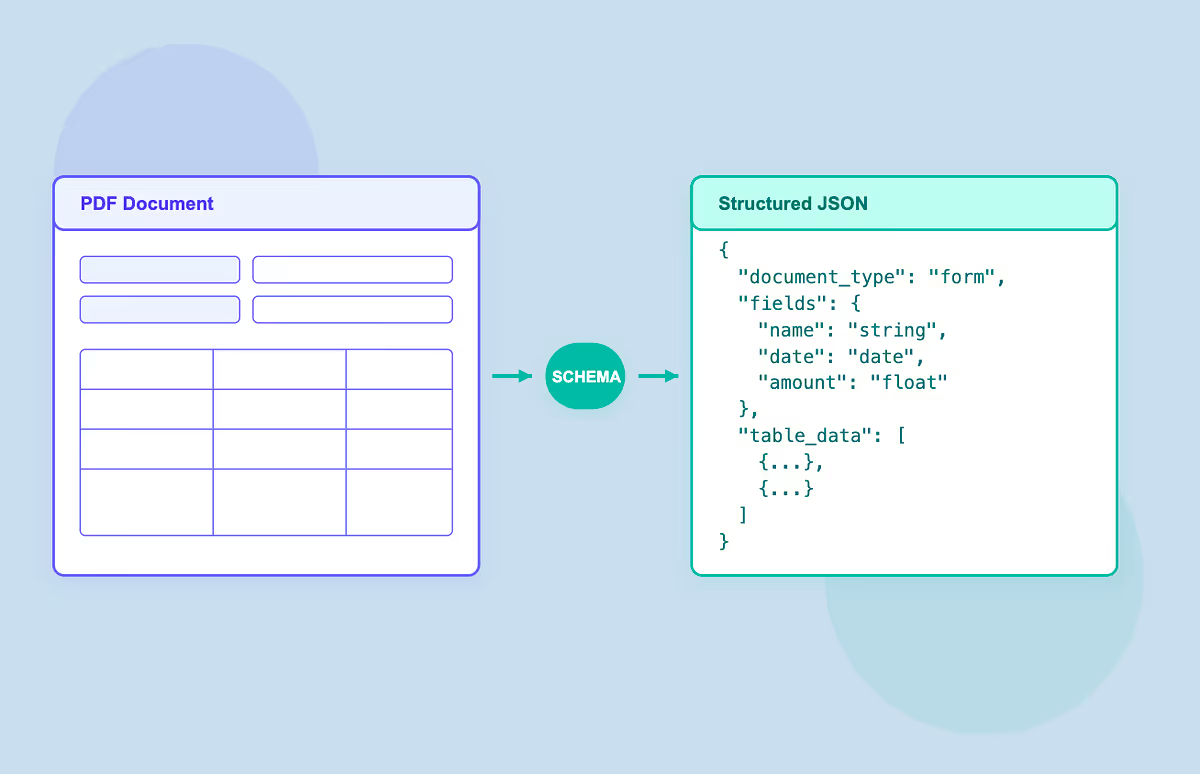

We often think of LLMs as masters of unstructured text, but that doesn't mean you should just dump raw documents on them and hope for the best. AI is unfortunately NOT a magic wand. While they can handle it, they process structured formats like JSON, Markdown blocks, or pre-computed embeddings far more efficiently. Schema-aligned prompting shows how structure really drives consistency.

When your data products deliver model context as structured input formats (observe that context is structured, data doesn't have to be), the LLM spends less effort parsing and understanding the input, and more time generating a quality response. Structured inputs mean LLMs process less junk, respond faster, and ultimately become cheaper to run.

One of the quickest ways to inflate LLM costs and dilute accuracy is to overload prompts with too much irrelevant context. It’s tempting to provide "all" the data, but it's redundant. Consider asking for directions to the nearest coffee shop and getting handed a map of the country.

By using data product output ports to serve precise, isolated slices of context (e.g., just the latest customer service notes, specific product specifications, or a subset of FAQs), the prompt length can dramatically come down. The sharpness and precision in the translated query directly translate to fewer tokens consumed, preserving accuracy by ensuring the LLM focuses only on the most relevant information. This keeps your LLM concise, accurate, and your bill significantly lower, enabling token savings at scale.

If every user or application sends prompts to your LLM in a slightly different format, you're essentially asking the LLM to relearn your query structure every single time. It's inefficient and error-prone.

By designing prompt templates that align directly with the structure (schemas) of your data product’s outputs, you create a consistent, predictable interface. This standardisation reduces variability, dramatically improves the reliability of responses, and critically, enables caching for common queries (more on caching soon!). Think of it as having a standardised order form for your LLM. It makes processing faster, more predictable, and cuts down on frustrating "misunderstandings."

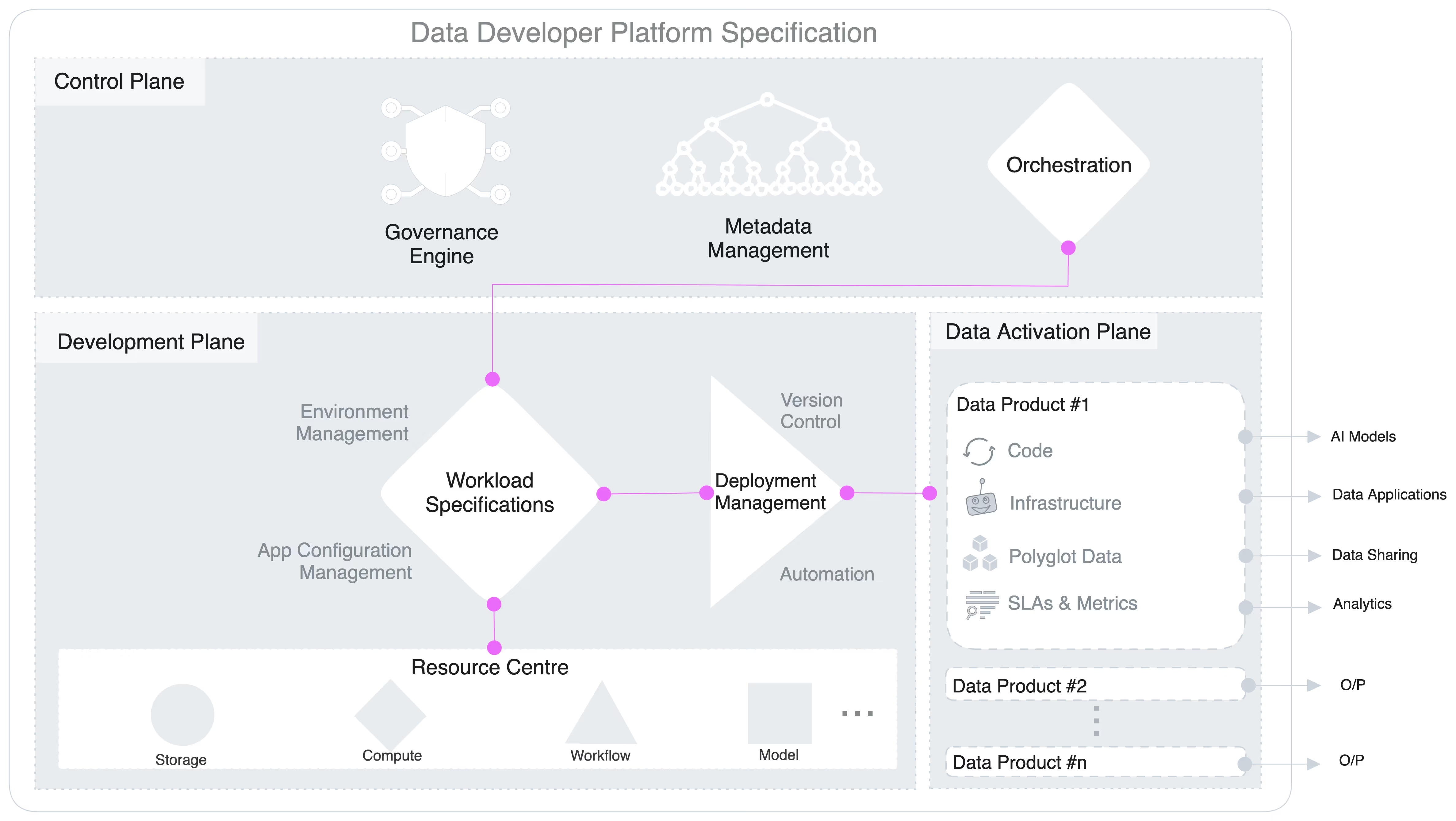

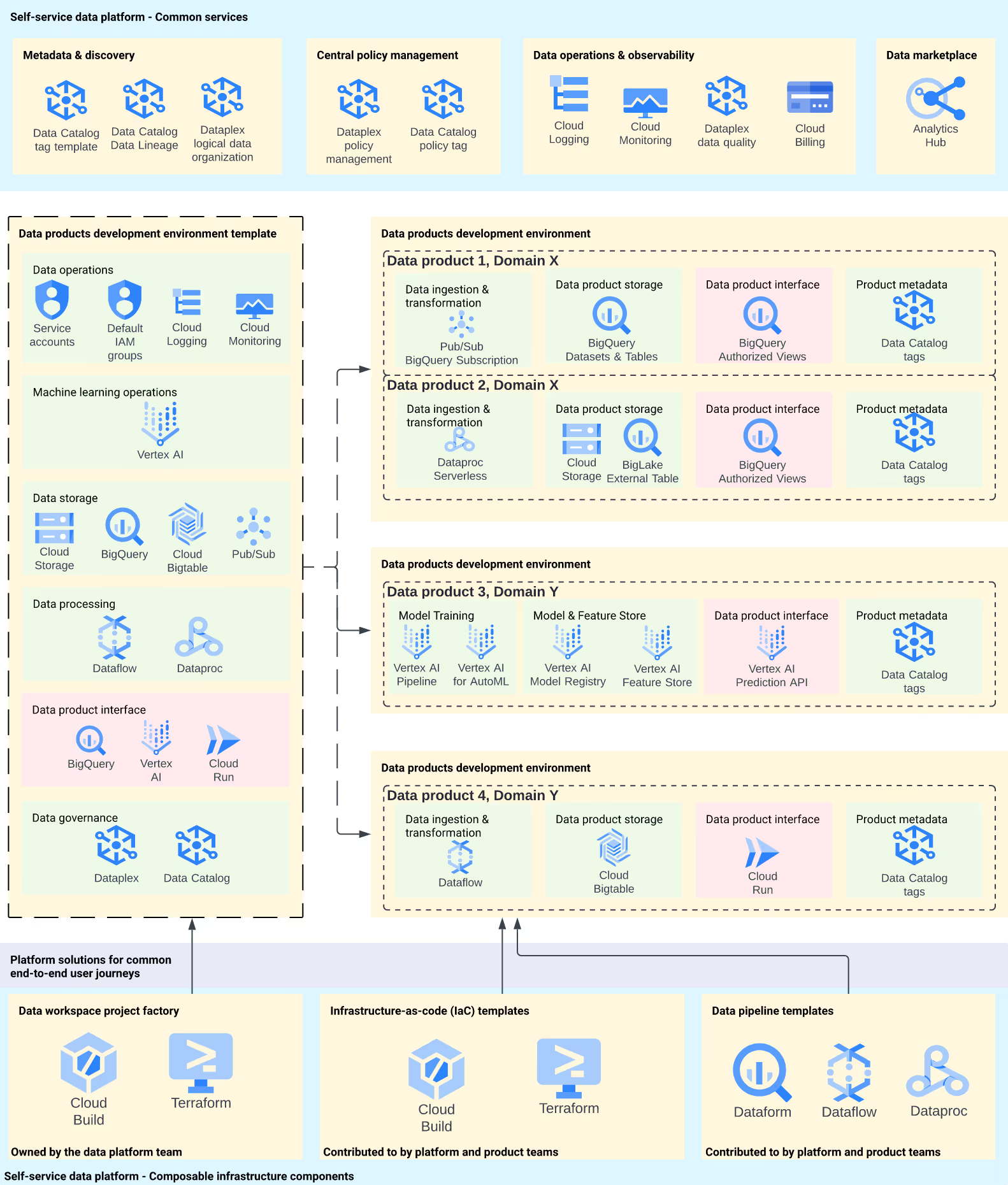

Data Engineers often become bottlenecks, manually prepping and integrating data for new LLM experiments. This is slow and expensive, tying up valuable engineering time. By providing a **self-service platform that allows users (data scientists, analysts, business users) to intuitively choose which data product and which specific output port to pull context from**, you democratise data access for LLMs

No engineering required for every new experiment! This empowers domain experts to quickly iterate and test ideas, making the experimentation phase significantly cheaper and faster, and ultimately accelerating time-to-value for new AI applications.

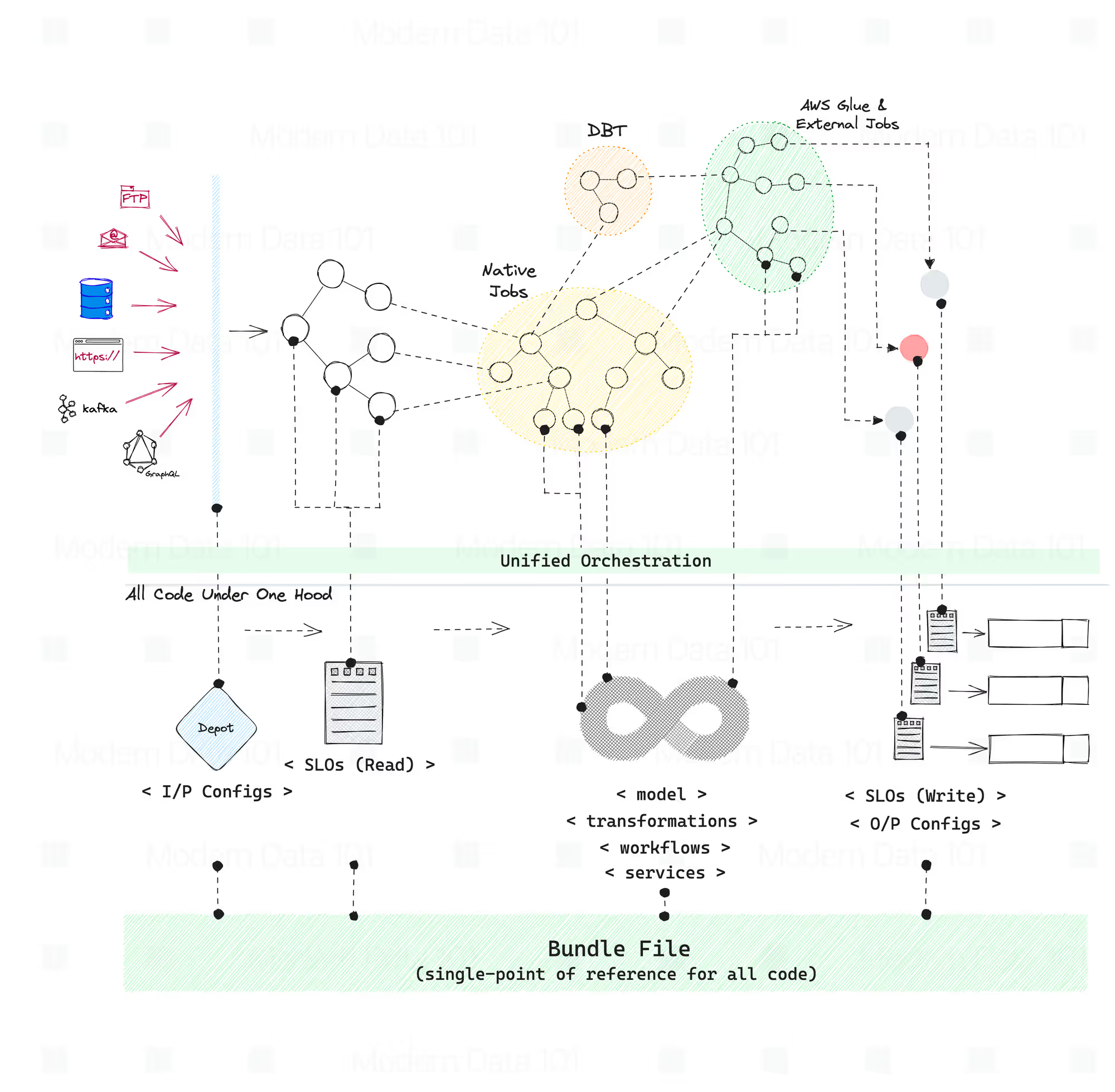

If different teams are each writing their own scripts to chunk documents, embed text, then call an LLM API, you're looking at a lot of duplicated effort and operational overhead. You’ll hit AI performance bottlenecks as you scale. This is where the magic of a platform-driven approach truly shines.

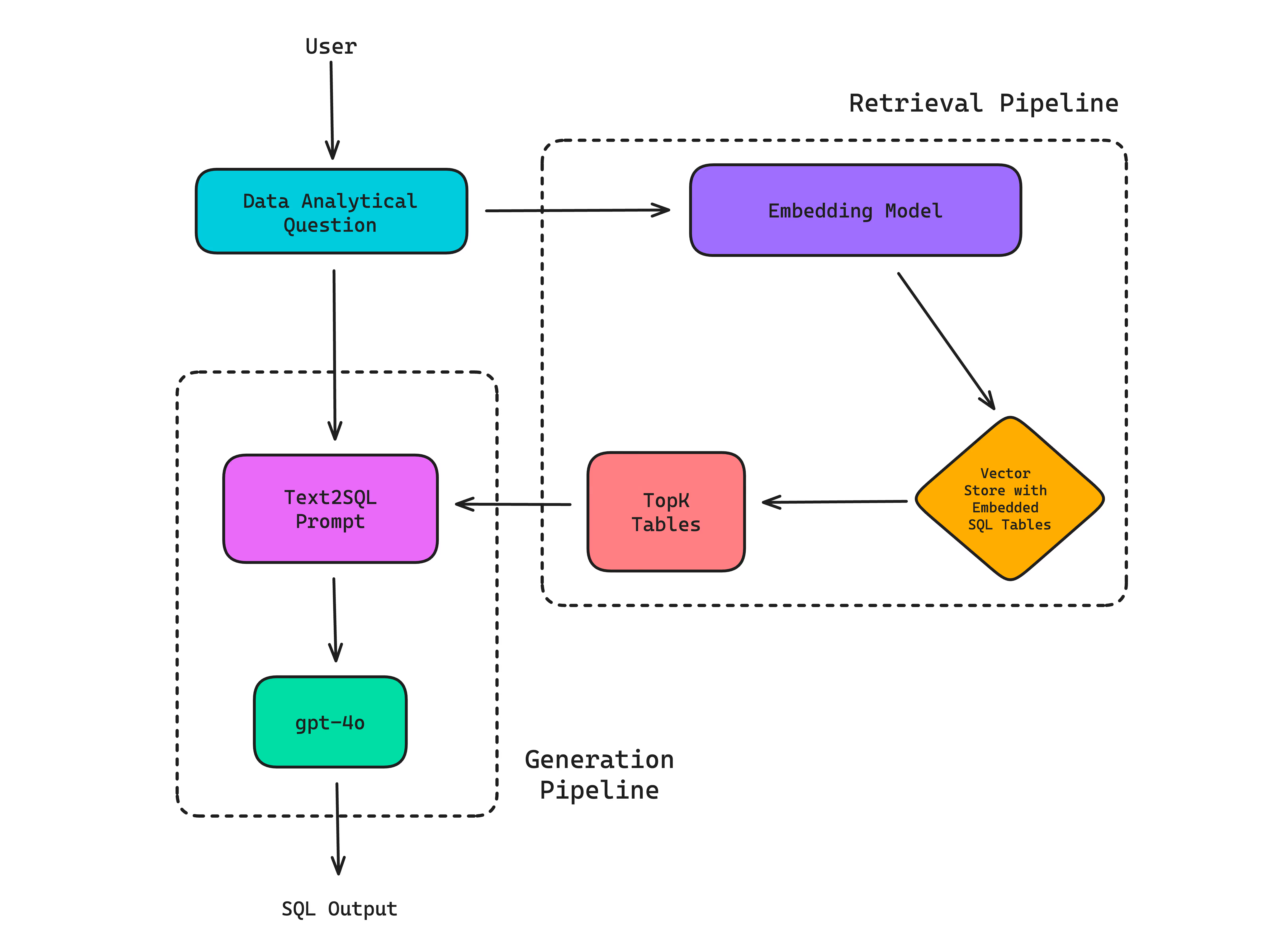

Use your data platform to create shareable, reusable preprocessing pipelines. In other words, callable AI pipelines that encapsulate the full LLM workflow: input port → necessary data transformation/chunking → LLM prompt construction → LLM API call → response processing. These callable AI pipelines help mitigate AI performance bottlenecks, avoid repetitive work, standardise best practices, and drastically reduce operational overhead. It’s like having pre-built, optimised workflows for common LLM tasks, letting teams focus on unique business logic rather than re-inventing the wheel every time.

Some parts of working with LLMs are inherently expensive, especially if they involve large context windows or complex reasoning steps. Think of it like paying a premium for a very specific, rare ingredient every time you cook, even if you only need a tiny bit of it.

By integrating semantic result caching at the platform level, especially for expensive LLM sub-tasks like document chunking, embedding generation, or even common query responses, you can slash recurring costs. If the LLM has already processed and embedded a specific document, cache that embedding! If a common query has a stable answer, cache the response! This significantly reduces redundant LLM calls and token usage, leading to massive cost savings over time.

LLMs are incredible at natural language understanding and generation, but they're not always the cheapest or fastest tool for every job. Asking an LLM to do simple arithmetic or perform complex database joins is like hiring a rocket scientist to sort your mail. Obviously they can do it, but it’s overkill and overpriced.

Offload simpler logic to other, more efficient components within your data platform. Use SQL for precise factual retrieval, heuristics for filtering, or traditional code for rule-based logic. Reserve LLMs for where their true power lies: complex reasoning, nuanced natural language processing, and creative generation. This, not using a sledgehammer to crack a nut approach ensures you are using LLMs only when their unique capabilities are truly needed. This saves significant costs improving overall system performance.

Your LLM applications don't live in a vacuum. User interactions provide a goldmine of information about what's working and what's not. Ignoring this is like building a product without ever talking to your customers.

Instrument feedback loops directly into your data products and LLM integration, enabling feedback-driven schema evolution that continually refines output quality. This feedback, be it the user upvotes/downvotes, explicit corrections, or implicit behavioural signals, can be used to fine-tune how the data product outputs are structured. The continuous feedback loop improves both the speed and the trustworthiness of your LLM responses while also ensuring that your AI is getting smarter and more efficient.

Finally, getting LLMs cheap and fast isn't a one-and-done deal, it's an ongoing journey. Without visibility, costs can quietly swell and performance can degrade.

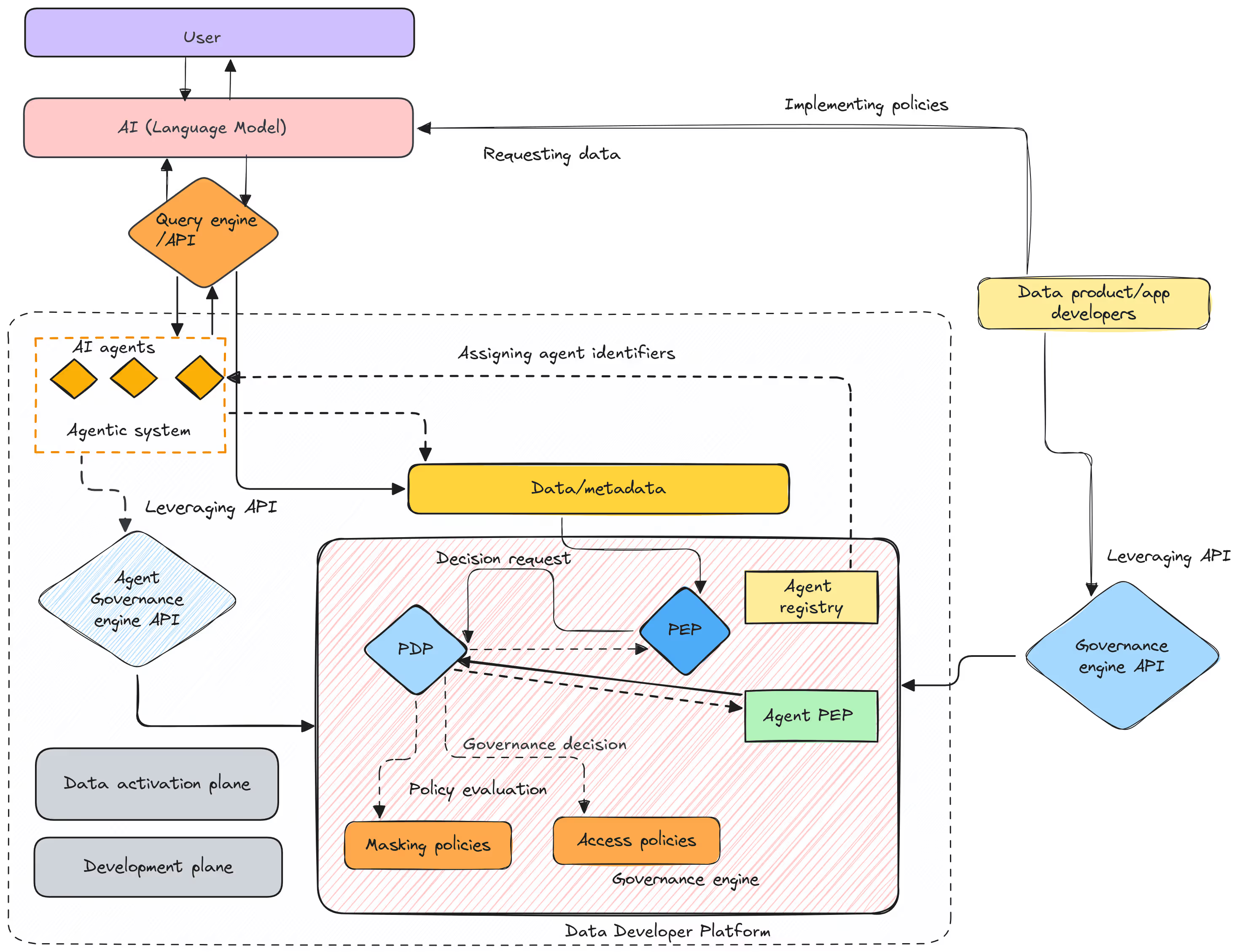

Building platform governance dashboards that track LLM usage by data product, team, and specific use case gives you the transparency needed to manage your LLM budget bloat. This also enables AI governance automation, allowing platform teams to identify expensive usage patterns, guide users toward faster, cheaper configurations, and enforce policies for optimal LLM consumption.

The temptation to adopt an LLM is undeniable, and it does go with the trend, but its real-world impact hinges on managing the operational costs and speed. This shift to AI-native applications is an evolution in our data strategy too. By leveraging the principles of Data Products, organisations can fundamentally transform how they interact with LLMs.

From serving cleaner inputs and structuring data intelligently, to enabling self-service, bundling workflows, and implementing smart caching and governance, each of these 10 strategies contributes to a leaner, faster, and more effective LLM deployment. It's not just about getting answers, it's about advancing to smart answers with speed and affordability.

Data Products can route queries to the most cost-effective LLM based on task complexity, automatically compressing and refining the prompts to minimise token consumption, implementing caching to reuse past responses for common queries, leverage RAG to provide precise, relevant context which reduces the amount of data the LLM needs to process, and enable the fine-tuning of smaller, specialised models that are cheaper to operate, Data Products can significantly reduce the cost of running LLMs.

A self-service platform provides intuitive interfaces for non-technical users to access, configure, and manage LLM resources without relying on data scientists or engineers. This includes features like simplified prompt engineering interfaces to easily test and iterate on prompts, access to various pre-trained or fine-tuned models for specific tasks, and tools for monitoring model performance and cost in real-time. By democratising access and control, a self-service platform accelerates experimentation, fosters quicker iteration on prompts and models, and allows teams to rapidly deploy and scale LLM-powered applications.

Curated data provides precise context, reduces the noise, hence minimising the chances of the LLM going off-topic, allowing the model to focus its processing power on generating accurate and concise responses. It is specifically prepared to be clean, relevant, and structured, directly addressing the LLM's needs. Raw inputs often contain irrelevant information, or unstructured formats that can confuse the model, leading to hallucination, inaccurate outputs, and increased processing time and cost as the LLM struggles to sift through unnecessary data.

Connect with a global community of data experts to share and learn about data products, data platforms, and all things modern data! Subscribe to moderndata101.com for a host of other resources on Data Product management and more!

.avif)

📒 A Customisable Copy of the Data Product Playbook ↗️

🎬 Tune in to the Weekly Newsletter from Industry Experts ↗️

♼ Quarterly State of Data Products ↗️

🗞️ A Dedicated Feed for All Things Data ↗️

📖 End-to-End Modules with Actionable Insights ↗️

*Managed by the team at Modern

.avif)

News, Views & Conversations about Big Data, and Tech

News, Views & Conversations about Big Data, and Tech

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.