Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

Artificial Intelligence, or AI adoption, is increasing at a rapid pace globally. This is only growing, as a McKinsey survey results suggest that 78% of enterprises are using AI in at least one of their business functions. Technology leaders have started emphasising AI as the focal point of their digital transformation strategies.

A lot of organisations hit dead ends not because of the lack of trusted AI models, frameworks, or tools with them, but because they fall behind in ensuring AI readiness for their data. AI, as a technology, depends on governance, accessibility, quality, and scalability. In their absence, even the most advanced algorithms fail to deliver reliable results.

The message is quite evident here. For AI to be successful, data readiness is crucial.

For executives across various industries today, the key question doesn’t revolve around AI adoption, but rather around their data being ready for AI or not. It shifts focus from model experimentation to setting up data foundations to determine whether the said models succeed.

This brings a big question into the picture:

What is data readiness for AI?

Data readiness for AI refers to the state where organisational data is consistent, accurate, governed, scalable, and accessible to support advanced analytics and machine learning at scale. It’s more than collecting data, where it’s also to ensure that the correct data is always trustworthy, available, and explainable in the form of AI systems that can be easily consumed.

There is a direct link between data quality and AI system outcomes. If the data is poor, it leads to generating unstable, biased, and misleading predictions, which hampers confidence in AI-driven decisions. On the other hand, high-quality and well-governed data ensure models are not just accurate, but also resilient, explainable, and trusted across the enterprise.

Complex legacy systems are easily identifiable through their ageing infrastructure, tangled architectures, and old software falling short of modern compatibility and features, leading to hurdles in ensuring seamless integration with modern AI.

There is a sizeable distance between legacy data environments and requisites for modern, scalable AI. Fragmented governance, data silos, and manual approval processes slow down access, overwhelming teams with duplication and challenges in building AI-ready pipelines, as they often end up building repeated pipelines for every business problem or for each new initiative.

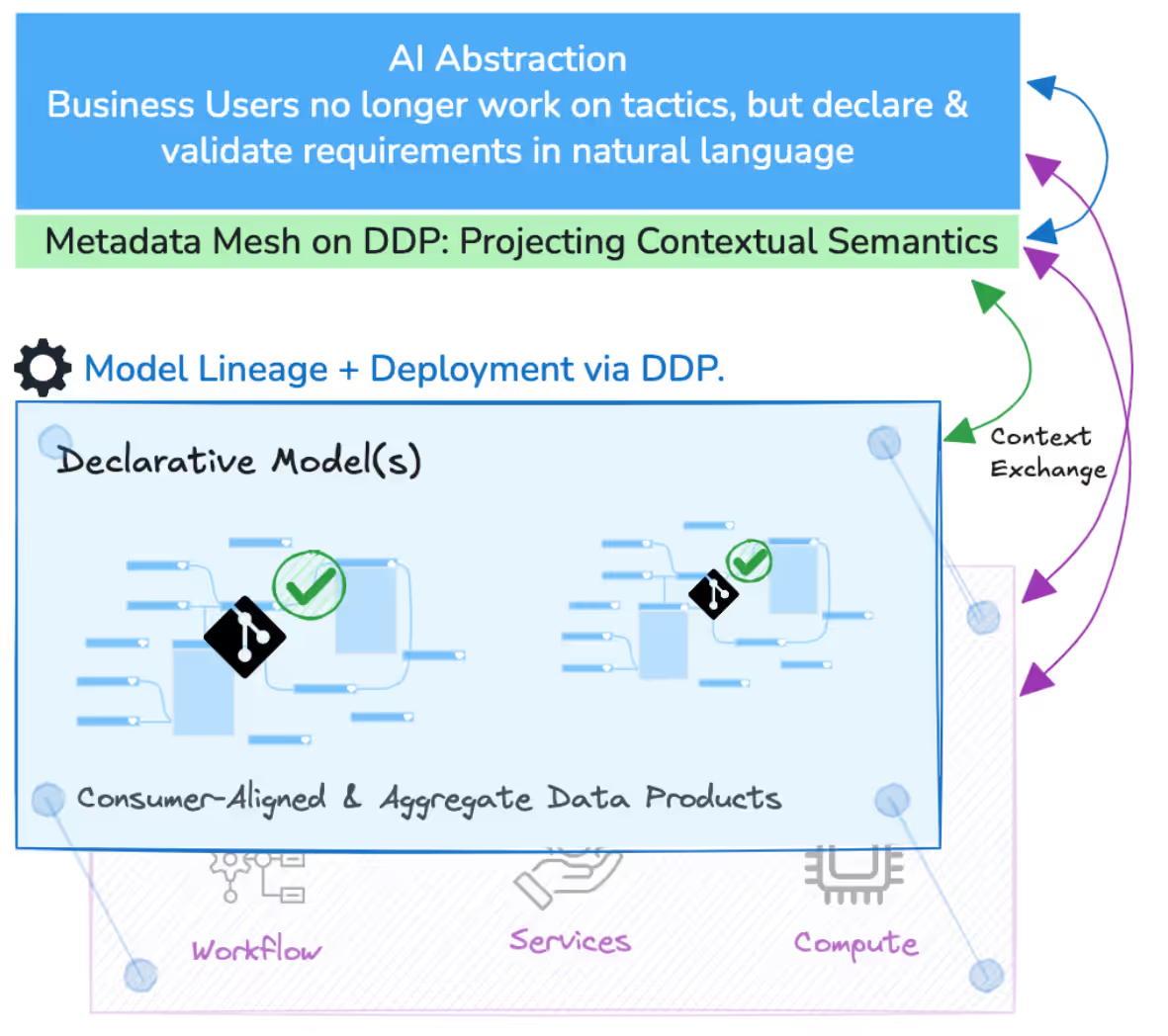

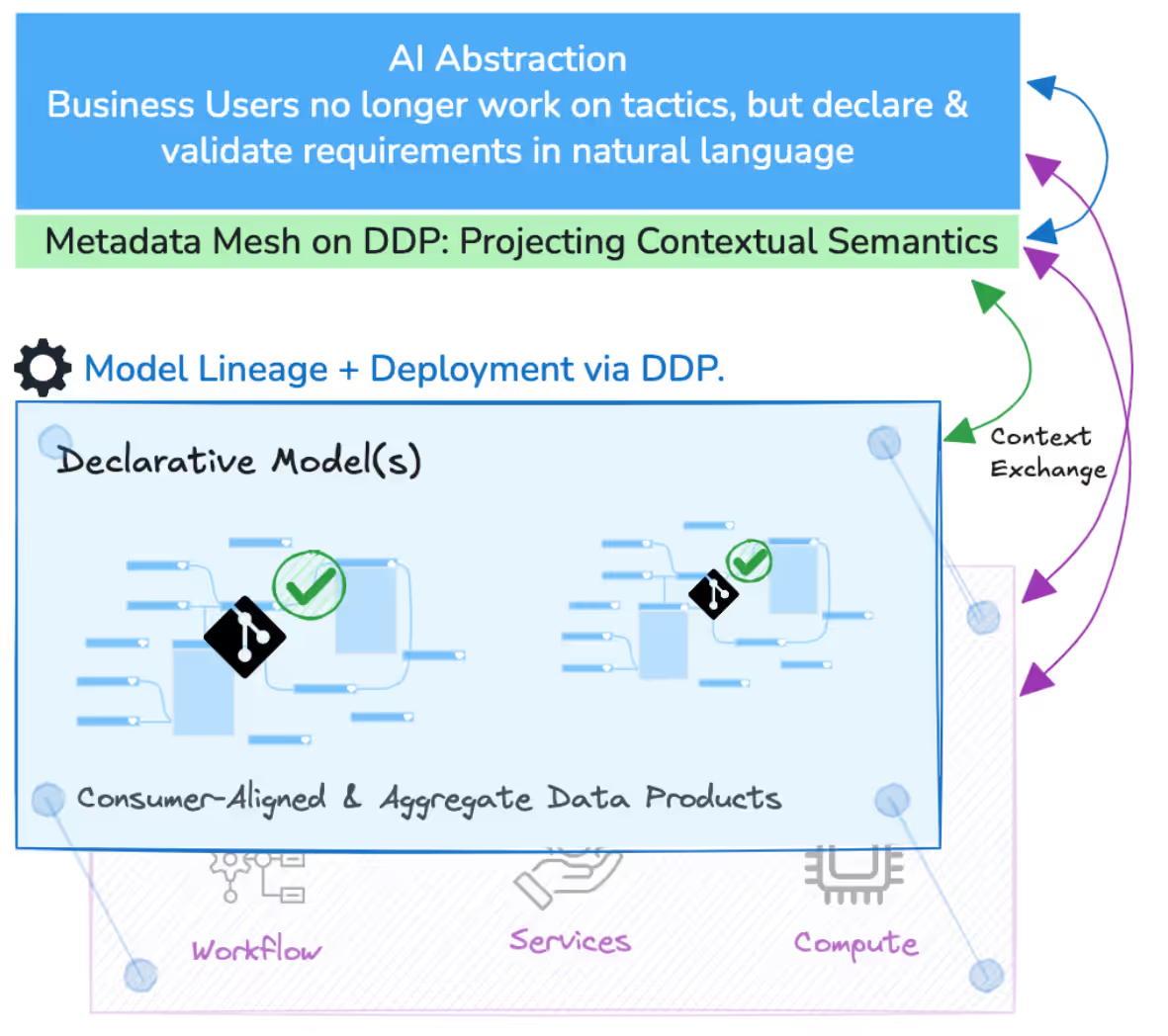

On the other hand, data ecosystems built with AI as the pivot revolve around composable, reusable data products and not like ETL activities in monolithic infrastructures. These data products are modular, versioned, and governed to make them discoverable and usable across different AI use cases. Following this approach reduces the chances of duplication, ensures that quality and governance are embedded into pipelines, and also enforces semantic consistency.

Before scaling Artificial Intelligence, organisations need to ensure that their foundation is backed by sorted, explainable, and reusable data assets. There are a few factors that define the value and sustainability of AI initiatives, or whether they will collapse because of fragile data practices.

Explainable, high-quality data is the foundation for trustworthy AI outcomes, and AI models are as solid as the data they consume. In the absence of proper governance, models give out errors, inherit biases, and expose enterprises to multiple compliance risks. By treating data assets as data products, organisations get to enforce lineage, quality, and self-service data access, ensuring traceability, explainability, and responsible AI in the process.

Data without context is of no use to AI. Metadata acts as that additional layer to ensure optimal data discoverability, reusability, and explainability. Structured metadata helps in capturing essential details such as lineage, business definitions, usage patterns, and data quality scores, among others, so that AI systems and humans can interpret information correctly. AI pipelines, when capable enough to query metadata automatically, reduce friction and accelerate development cycles.

Lineage management is an enabler for organisations to map the entire data journey from source to model input, offering complete visibility into transformations. This is important for explainability, as stakeholders want to be aware of the behind-the-scenes in AI-driven decisions. Solid data lineage also impacts auditability, compliance, and impact analysis to cut down risks of unexpected drifts in AI models.

Redundant data is one of the most significant barriers to scaling AI-ready datasets for enterprises and teams. When every AI use case starts from scratch, teams fall into the never-ending cycle of POCs that never really reach production. When data is designed as reusable products, teams can easily adopt a build once, use everywhere philosophy. It ensures an accelerated transition from POCs to repeatable, production-ready workflows, establishing scalable value throughout the organisation.

Centralised data ownership leads to a lot of bottlenecks and disconnect between data producers and AI consumers. For effective scaling, organisations should make domains custodians of their own data. This is because domains understand the nuances, context, and quality requirements of data generated by them, putting them in an excellent position for accuracy and relevance.

💡 Related Reads:

- Universal Truths of How Data Responsibilities Work Across Organisations

- Data Governance 3.0: Harnessing the Partnership Between Governance and AI Innovation

Enterprises looking to accelerate their AI initiatives tend to ignore a fundamental reality: AI can’t function on a weak foundation. When overlooked, data readiness can lead organisations to tasks that become hurdles in progress and innovation.

Efforts get siloed as AI, product, and analytics teams keep building the same datasets one after the other, increasing efforts for no apparent reason. All of this leads to conflicting metrics, where each team has a different “output” to share. Such mistrust undermines the overall confidence in numbers when it comes to strategic decision-making.

At the same time, engineering bottlenecks cut down the pace of progress, as each new dataset needs manual input, slowing down experimentation and taking the agility away from workflows. The lag also impacts data readiness for AI, as models keep getting trained on inconsistent, low-quality, and poorly generated data based on inconsistent insights.

One of the biggest misses here is the advantage of opportunity cost. Where models could significantly contribute to innovation, weeks and months get lost in just preparing the correct data to serve the purpose. POC to production becomes a distant dream.

An enterprise aspiring to scale Artificial Intelligence cannot make the mistake of treating data as an afterthought. In its place, they need to adopt a Data as a Product mindset, where data can be packaged as usable, reusable, and governed assets with clearly-defined policy controls and ownership.

This Data as a Product approach combines the key elements of AI readiness, where metadata adds context, governance brings compliance and trust, reusability cuts down redundancy, and domain ownership ensures proper accountability.

The product mindset helps in always keeping scalability, consistency, and reliability in the thick of things, unlike traditional, project-driven pipelines. This shift allows AI-ready data to be sustainably adopted across the enterprise.

Most organisations don’t struggle with AI adoption because they lack the right tools, but because they don’t know how to prepare data for AI models. Fragmented governance, siloed datasets, and pipeline inconsistency prohibit AI from scaling beyond its pilot stage. Building an AI enablement mechanism right at the data layer becomes key, where governance, lineage, and quality are embedded by design.

Product thinking mindset can be the pivot here, bringing a future where AI initiatives don’t just stop progress but allow organisations to scale with confidence!

Join the Global Community of 10K+ Data Product Leaders, Practitioners, and Customers!

Connect with a global community of data experts to share and learn about data products, data platforms, and all things modern data! Subscribe to moderndata101.com for a host of other resources on Data Product management and more!

.avif)

📒 A Customisable Copy of the Data Product Playbook ↗️

🎬 Tune in to the Weekly Newsletter from Industry Experts ↗️

♼ Quarterly State of Data Products ↗️

🗞️ A Dedicated Feed for All Things Data ↗️

📖 End-to-End Modules with Actionable Insights ↗️

*Managed by the team at Modern

.avif)

Simran is a content marketing & SEO specialist.

Simran is a content marketing & SEO specialist.

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.