Download Modern Data Survey Report

Oops! Something went wrong while submitting the form.

Facilitated by The Modern Data Company in collaboration with the Modern Data 101 Community

Latest reads...

TABLE OF CONTENT

Last updated on: 12th December 2025

Data engineers have invested decades into their practices, yet DE teams still hours fighting inconsistent environments, brittle configs, and manual fixes that don’t scale. The pain point of fragile pipelines, high operational overhead, and wasted time chasing environment drift instead of delivering insights is still intense across industries.

Infrastructure as Code (IaC) flips this script. By defining infrastructure in declarative templates, teams gain consistency, repeatability, and automation.

🎢The global infrastructure as code market size is expected to reach USD 3304.9 million by 2030, at CAGR of 20.3%.- Fortune Business Insights

For data engineering, this means stable pipelines, faster experimentation, and the confidence that your stack won’t collapse under scale. IaC isn’t just a DevOps best practice, which is a critical enabler for modern data platforms.

[playbook]

Infrastructure as Code is the practice of managing and provisioning computing infrastructure (like servers, networks, databases, etc.) using machine-readable configuration files, rather than manually setting things up via graphical interfaces or command-line tools. Think of it like having access to Lego blocks, which you can use to build everything from a house to a racing track. Instead of building the Legos themselves from scratch.

Researches forecast that the IAC market will reach USD 5.5 Billion by 2033 at a CAGR of 18.5% from 2026 to 2033.

Managing infrastructure used to be a backstage job for IT. Now, Infrastructure as Code is front and centre: in platform engineering, developer tooling, and increasingly, the data world too.

Think of it like skyscraper design: it’s not enough to sketch the exterior. You need blueprints for everything; the plumbing, the wiring, the safety exits. Infrastructure today is too complex to be built manually, and IaC is how you tame that complexity.

With declarative approaches, you define what the end state should look like. No need to write line-by-line instructions. That’s a big shift from imperative scripts or tweaking things in the cloud console, which often leads to drift and surprise failures.

Following are the benefits of Infrastructure as code:

✅ Version control (infra lives in Git)

✅ Automation (CI/CD pipelines do the heavy lifting)

✅ Consistency (same infra across dev, test, prod)

✅ Testing & rollback (catch issues before they hit prod)

In short, it’s no longer just about “setting up a Virtual Machine.” It’s about building scalable, reliable, product-grade infrastructure that applies just as much to data pipelines as it does to web apps.

Without IAC (Infrastructure as Code), managing infra is like building a skyscraper on jelly. 🍮

Just as a building needs a solid foundation, Infrastructure as Code stands firm on a few essential pillars. These aren't just best practices. Instead, they are the steel beams holding up your digital skyscraper.

If Infrastructure as Code were a superhero, automation would definitely be its cape. It spins up environments, applies security settings, and rolls out updates across development and production stages without needing a human to push the buttons.

Automation not only provides speed, it's about predictability. Since infrastructure is described in code and managed like code (in IaC implementation), it can be executed automatically through pipelines, versioned, tested, and reused confidently. That’s how automation powers scalable, self-service infrastructure for teams.

Modularity is more of a design principle that says, “Don’t rebuild. Reuse.”

It’s like having a kit of LEGO blocks for your infrastructure. Instead of rewriting the same setup for every environment or service, you gain access to reusable, self-contained modules for things like networks, databases, or Identity and Access Management roles. This not only saves time but also makes your infrastructure easier to maintain and scale in the long run.

Modular design = less duplication, more consistency, and faster onboarding for teams.

Version control is one of the cornerstones of IaC. Imagine keeping a detailed record of every tweak you make to your building plans. Version control does exactly that for your infrastructure: it tracks every change, makes collaboration seamless, and allows you to roll back safely when something goes off-script.

Without version control, all your Infrastructure as Code efforts are nothing more than just scribbles on a napkin, can’t be relied upon or treated as a blueprint. Tools like Git ensure everything is auditable, shareable, and reproducible. Moreover, with infrastructure in the shape of code scripts, it has never been easier to take snapshots of the end-to-end data infrastructure and manage modules and infrastructure deployments as versions.

Once you automate, it’s time to talk about how you write your infrastructure logic. There are two styles: declarative and imperative.

Both can work, but most often or as part of Infrastructure as Code best practices, declarative wins more often as it's easier to maintain, scale, and reason over time.

Idempotency sounds like a mouthful, but it’s a straightforward (and essential) idea: run the same code multiple times, and you should get the same result every time.

Think of it like telling your builder to install a window. Whether you say it once or repeat it five times, you will still end up with one window and not five stacked on top of each other.

This is what makes Infrastructure as Code safe and reliable. It ensures that infrastructure behaves predictably no matter who runs the code or how many times it is executed.

Once you’ve got modular building blocks, it’s time to ensure they are secure by design.

With policy as code, you embed security and compliance rules right into your infrastructure workflows. No more manual reviews or last-minute checklist audits. You write policies once, and it is automatically enforced every time infrastructure changes.

Want to make sure no one accidentally spins up a public S3 bucket? Just write a policy. Need to enforce tagging, region limits, or encryption settings? Also a policy.

Tools like Open Policy Agent, HashiCorp Sentinel, DataOS Heimdall, or AWS Config help you codify governance so that your infrastructure stays secure and compliant.

GitOps is where everything starts coming together.

It takes version control, automation, and policy enforcement and wraps them into a full-fledged operating model. Changes are made via pull requests, reviewed, tested through CI/CD pipelines, and automatically deployed.

This approach means your infrastructure is not only version-controlled but also continuously delivered, just like application code. Git becomes the single source of truth, and you gain traceability, auditability, and safer collaboration between developers and ops teams.

GitOps = fewer surprises, faster rollouts, and more sleep at night.

Lifecycle management is a quiet but crucial principle of knowing when to let go.

It’s not enough to just spin up resources. You also need to clean it up when it is no longer needed. This means de-provisioning unused VMs, tearing down test environments, and monitoring for zombie resources that inflate your cloud bill.

Effective lifecycle management ensures your infrastructure remains lean, cost-efficient, and well-organised, making sure that it doesn’t turn into a cluttered graveyard of forgotten assets.

[report-2025]

The best practices for Infrastructure as Code focus on building robust foundations that will support the data workflows and also let you scale with ease. It also ensures consistency across the board.

Whether you are deploying cloud services, managing datasets, or building complex data pipelines, these best practices lay the very foundation for your success. Let’s jump into some of the best practices that make Infrastructure as Code effective in the data engineering world.

In data engineering, just like in software development, modularity is key. By treating your infrastructure components as reusable, version-controlled modules, you can achieve consistency and avoid the pain of reinventing the wheel.

This approach streamlines workflows and ensures that the infrastructure components are aligned with business requirements. A well-designed infrastructure module must be easy to integrate and reuse. It’s like having a set of standardised building blocks that, no matter how many times you use them, fit well together.

If you're serious about building data products, then design consistency can’t be optional; it must extend all the way down to your infrastructure. The same purpose-driven thinking you apply to product modules: clear ownership, specific intent, minimal surface area, must seep into the scaffolding beneath them.

Infrastructure isn’t just a deployment concern; it’s a design concern. And when done right, the system becomes modular to the last grain. Like Apple’s minimalism, where even the power brick and packaging speak the same design language, your stack should carry the same logic, from your DAG templates to your Terraform modules to your warehouse schemas.

This isn’t just about aesthetics or technical purity. It’s about scalability, comprehension, and control. When your infrastructure is composed of thoughtfully designed, product-like modules (pre-approved, self-documented, interoperable), it unlocks a different speed of execution. Teams stop guessing and start assembling. Drift is minimised, onboarding becomes frictionless, and environments become reliable by default.

One of the biggest mistakes data teams make is treating infrastructure as a separate entity. Instead, all data products should be treated as a cohesive unit, where code, data, metadata, and infrastructure are tightly coupled. This means that your infrastructure is just one part of the larger data ecosystem that powers the business.

When your infrastructure is designed in harmony with the data product it supports, deployment becomes a lot more efficient, and you avoid unexpected errors. Think of it as designing a car where every part works seamlessly together, rather than just adding random parts that may or may not fit.

Today, aligning infrastructure with the core business units is essential for clarity and efficiency. Data products must be built around business use-cases, like Customer360 for instance. IaC enables the coupling of instances of infrastructure resources, such as compute, services, and policies with specific products or business goals.

Aligning infrastructure with business units also facilitates easy scaling. As the business grows and data products evolve, the infrastructure can be scaled to meet growing demands or curbed to curtail unintended expenses. You know which businesses and teams incur the highest infrastructure costs. You know which products, tools, or teams are undergoing infrastructure drifts. Or which initiatives could be archived to re-provision infra to high-yielding workflows.

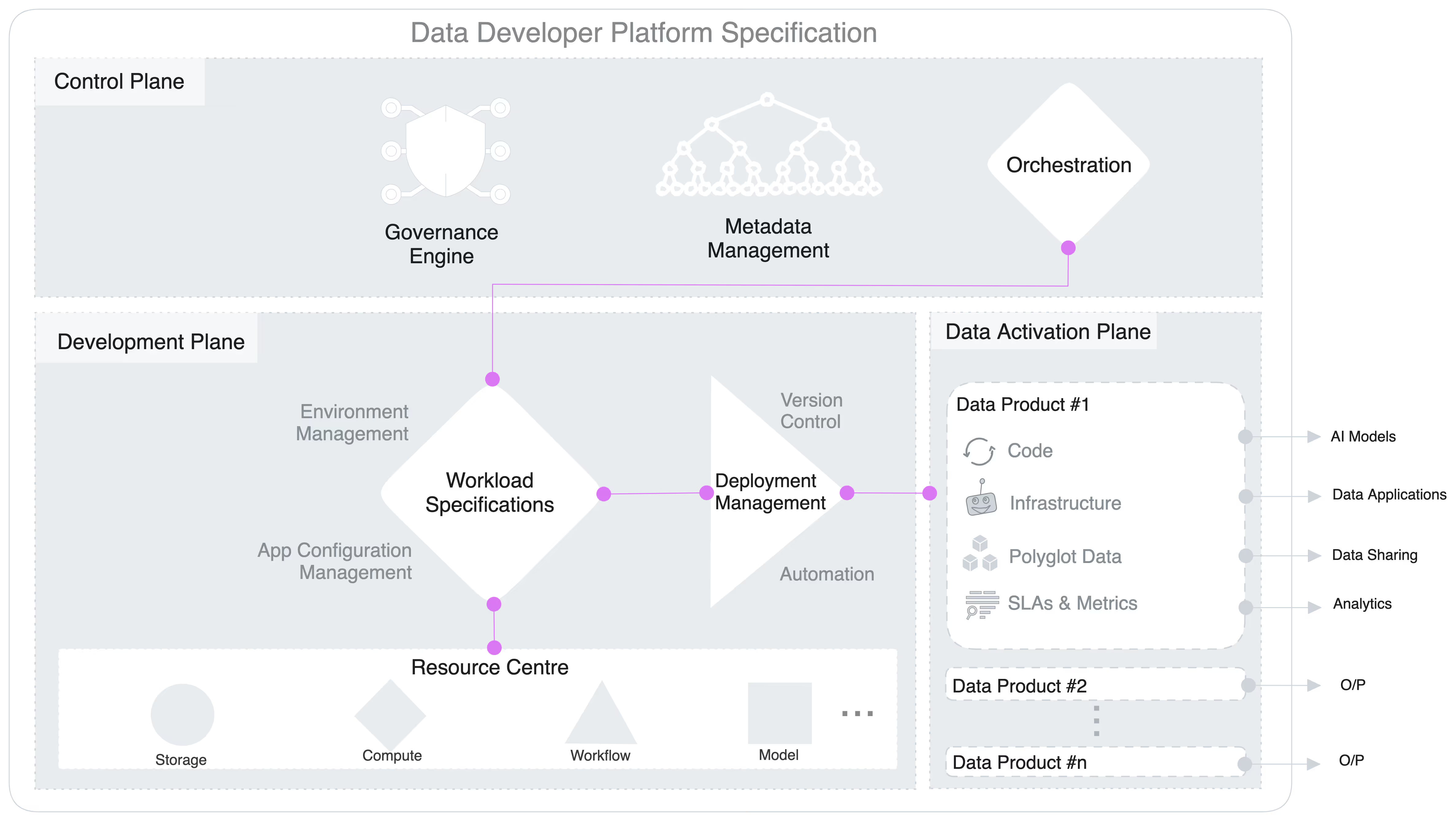

Empowering teams to manage their own infrastructure needs is central to a Data Developer Platform (DDP) approach. By providing pre-defined blueprints for infrastructure, data teams can quickly and easily provision resources without the need to manually set everything up.

These blueprints act like ready-to-use templates, pre-approved for compliance and security, so teams can focus on their core tasks, like building and refining data models without getting bogged down by infrastructure setup.

At the heart of a scalable infrastructure lies observability. With mounting complexities of modern data systems, a clear view into metrics such as performance, resource usage, and potential failures is a must.

Observability should be built into every infrastructure module from the very beginning as it helps to ensure that you are able to monitor the health, troubleshoot issues, and maintain the system's integrity over time. With observation, data governance is equally important. It ensures that your infrastructure is compliant, secure, and aligned with organisational policies.

Whether it is data security, user access control, or compliance requirements, governance is the key to keeping your infrastructure safe. It’s like installing a security system in your infrastructure that ensures everything runs smoothly without any discrepancies.

[related-1]

To make infrastructure scalable and easier to manage, it is important to abstract away unnecessary complexities. Encapsulating complex configurations within product scaffolds, you can make it easier for teams to deploy and maintain their infrastructure without needing deep expertise in every component.

This not only simplifies the entire process and makes it more accessible for a non-technical persona, but it also ensures that everything runs smoothly.

Testing infrastructure is as important as testing the application code itself. Running tests on your Infrastructure as Code before deployment helps identify potential issues, security flaws, or inefficiencies that could impact performance. IaC enables all the abilities of granular code testing. Unit testing, integration testing, functional testing, acceptance testing, and the whole package. Such granularity enables the infrastructure to be stress-tested against thorough edge cases, making the foundation strong and inherently dependable.

When you're building infrastructure for data, it’s not just about making sure the pieces fit. It is also about how well the tools integrate, scale, and work together to create a seamless ecosystem. Luckily, today, we have plenty of tools in the market designed to make life easy. From battle-tested veterans to emerging open-source solutions, let’s break down the key players in the IaC toolkit for data-centric workflows.

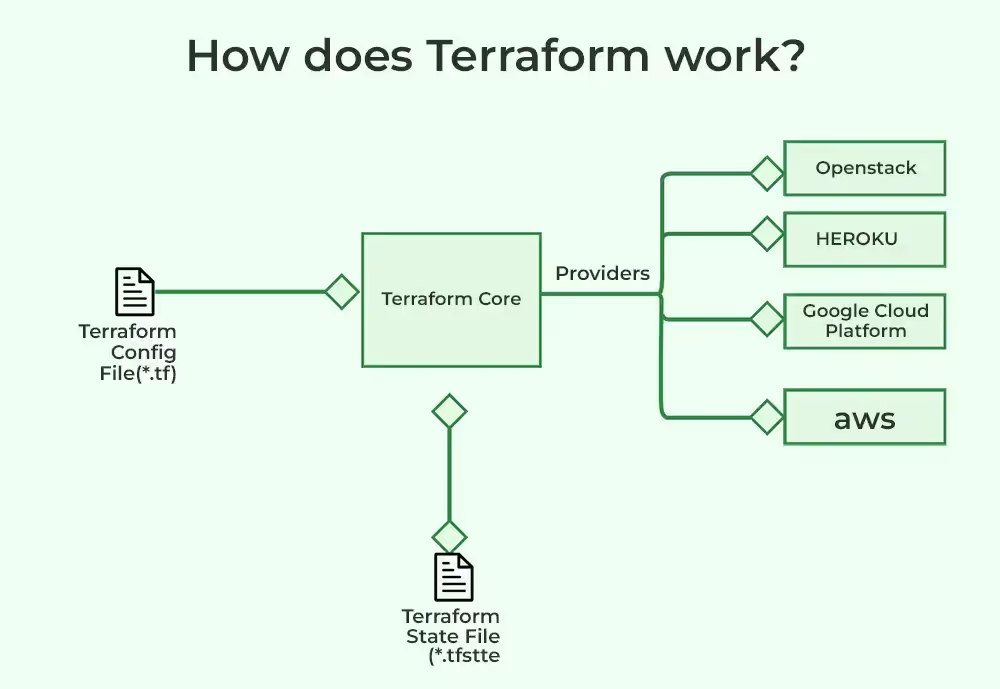

Terraform is the go-to tool for many when it comes to infrastructure as code. It is cloud-agnostic, meaning you don’t have to worry about getting locked into a single provider. Modular and flexible, Terraform helps automate infrastructure provisioning across multiple cloud environments. It is widely recognised for its stability, scalability, and large support community.

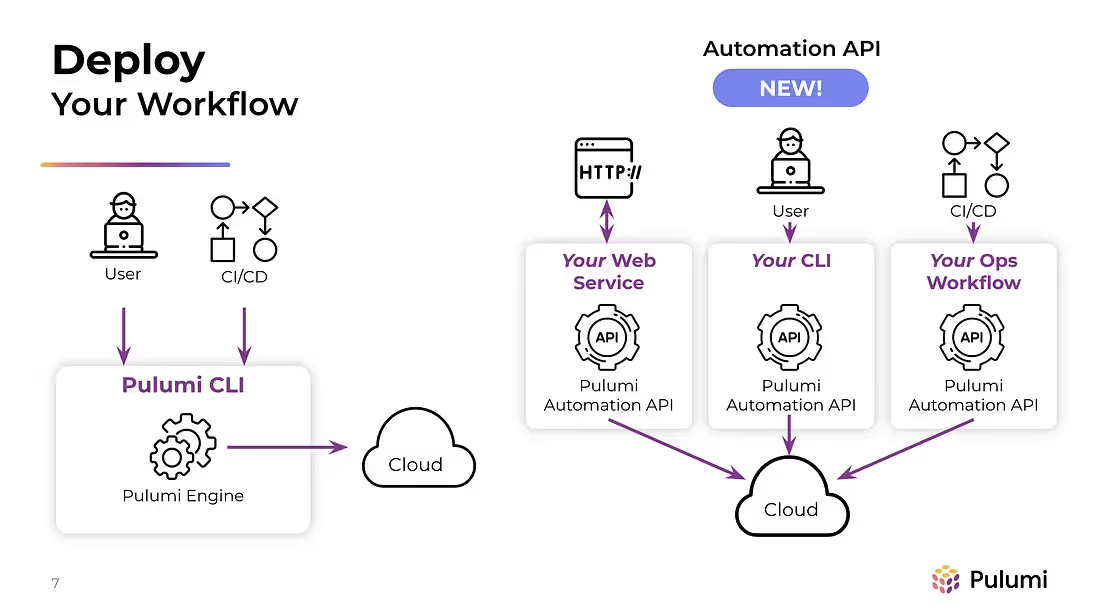

If you prefer to use a language you already know, Pulumi could be your best bet. Data engineers can take advantage of native code debugging, IDE support, and dynamic infrastructure without having to learn a new Domain Specific Language, making it a great choice for teams already comfortable with software engineering practices.

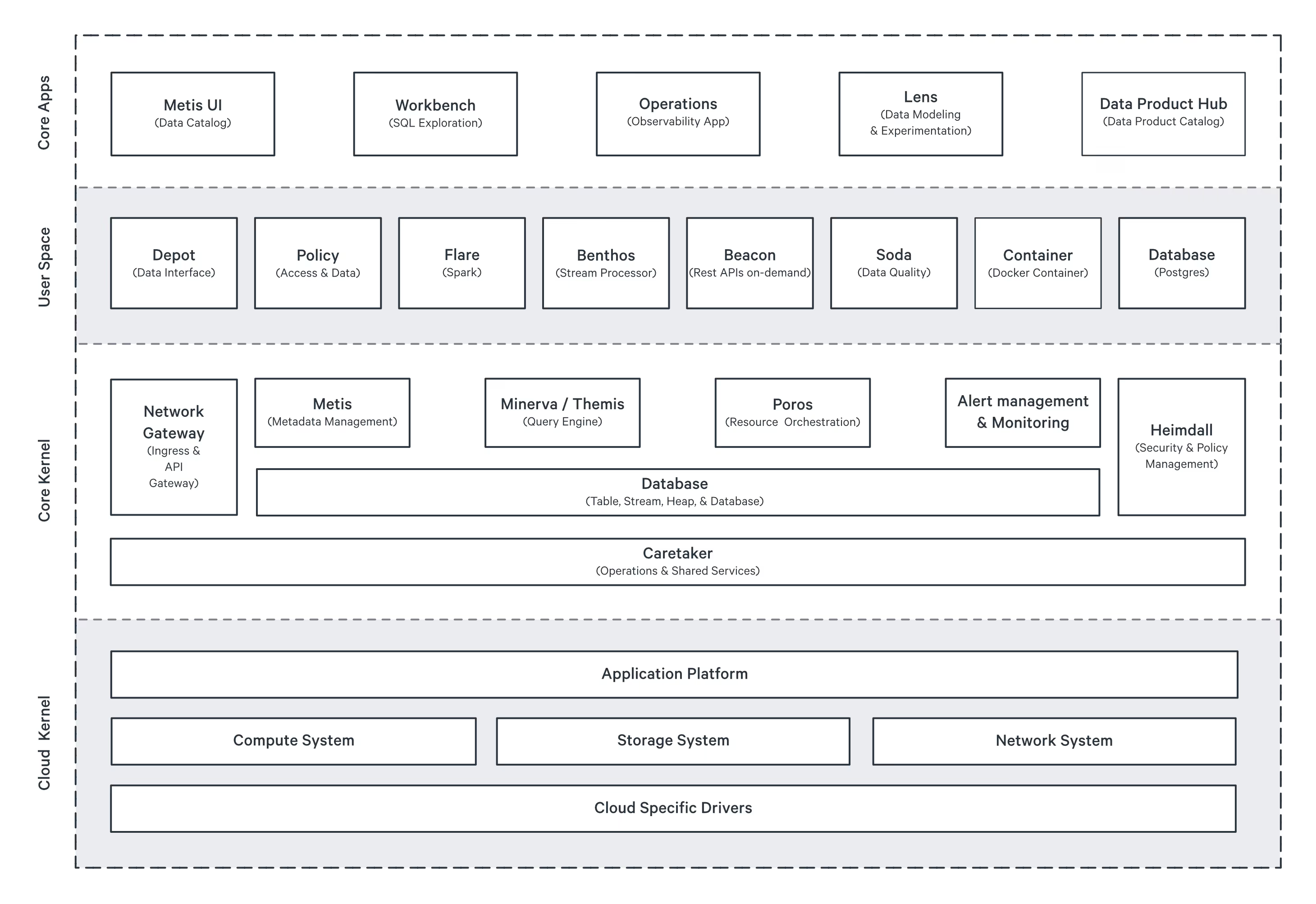

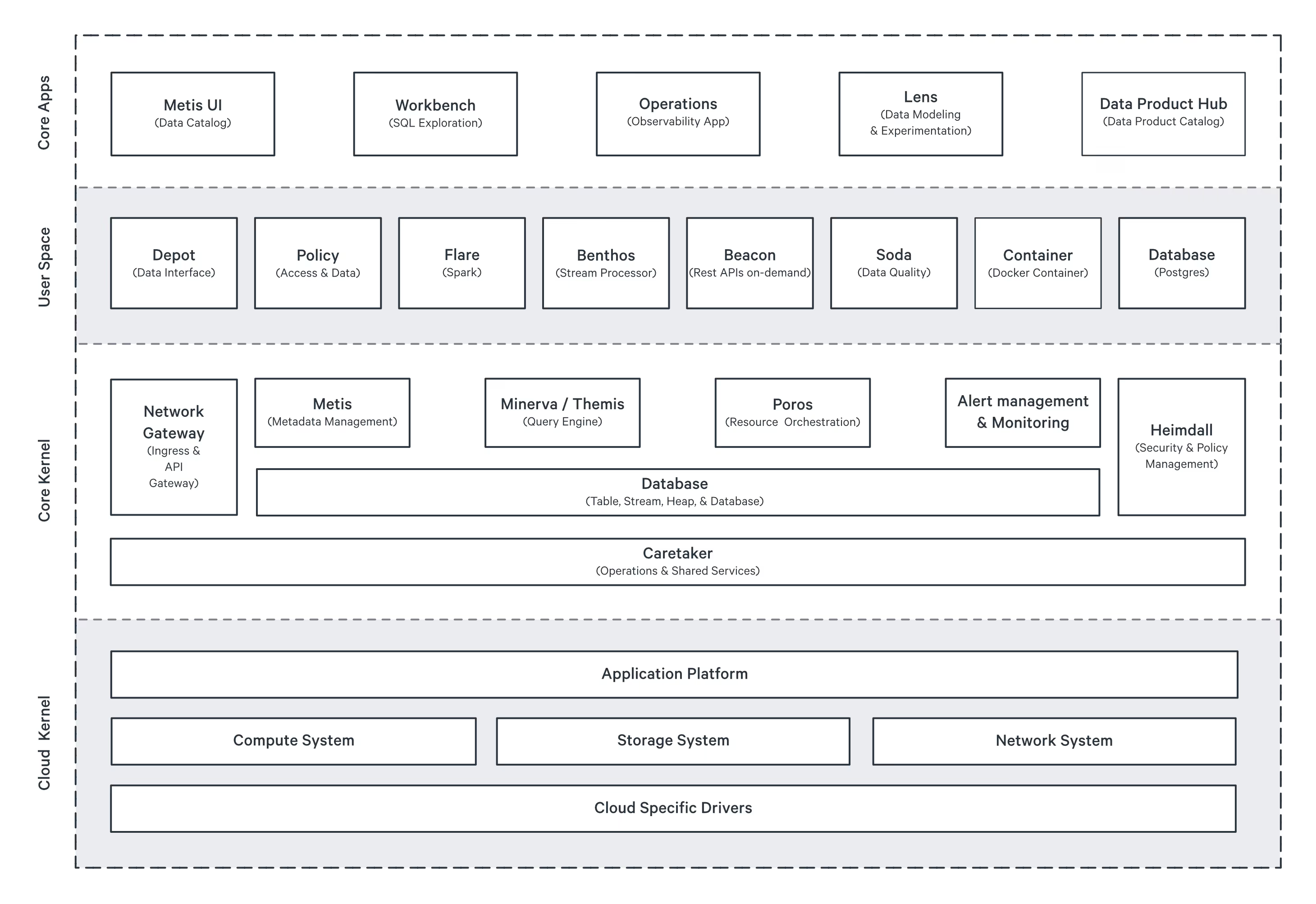

DataOS takes IaC for data engineering to the next level. It abstracts out much of the complexity and allows you to focus on building data products rather than worrying about infrastructure details. It enables ready-to-use infrastructure resources that data engineers directly call for building modular solutions for data products, saving time and reducing errors. The platform is non-disruptive, meaning you can implement IaC without overhauling existing workflows.

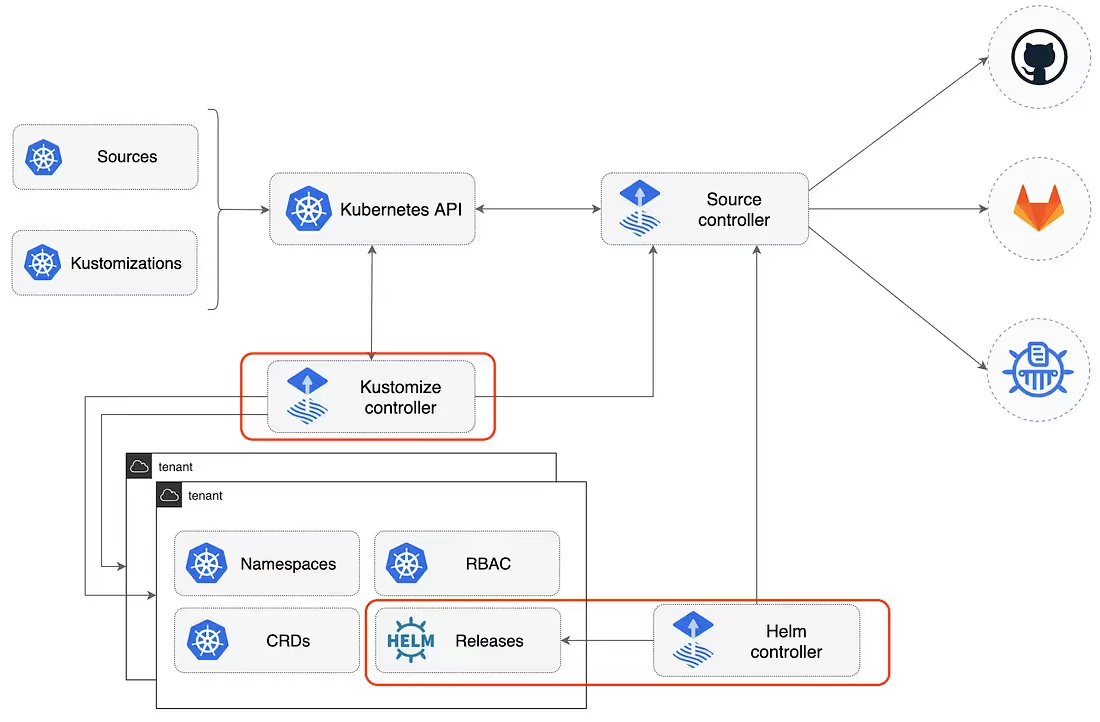

For those working with orchestration solutions, Helm and Kustomize are indispensable. Helm packages everything needed for an application (from resources to configurations), and Kustomize gives you the flexibility to customise them as per the need. Both tools make IaC simpler when dealing with containerised data processing workloads.

Each cloud provider has its own set of native tools that enable IaC within their ecosystems. Tools like AWS CDK, GCP Deployment Manager, and Azure Bicep bring IaC capabilities to each respective cloud platform, making it easier to define infrastructure resources in the context of a specific provider. They allow for deep integration with the cloud environment while enabling programmatic infrastructure provisioning, ensuring that data engineers can build within the cloud-native ecosystem.

Open-source trends are pushing the boundaries of IaC in new and exciting ways. Crossplane offers a unified approach to managing infrastructure across cloud providers, while Data Developer Platforms (DDPs) promise to streamline development workflows by bringing infrastructure and software engineering principles together. These emerging tools aim to provide even more flexibility, abstraction, and integration for data engineers managing complex, cross-cloud data workflows.

Whether you are automating infrastructure across multiple clouds or simplifying deployment in a Kubernetes environment, the right tools can help make your IaC workflows smoother, faster, and more efficient.

[report-2025]

Modern data products are modular units: infrastructure is one of four parts. Data Products are not just pipelines; they consist of code, data, metadata, and infrastructure. IaC ensures each part works seamlessly together, providing a solid foundation for scaling.

IaC helps data products become self-contained, composable, and environment-aware. IaC makes data products self-contained by combining code, data, and infrastructure in one package. This ensures they are adaptable to any environment and easy to deploy.

IaC enables product-aligned provisioning: infra tied to business, not just pipelines. IaC ties infrastructure provisioning to business units, ensuring that each team gets infrastructure tailored to its specific needs, not just pipeline support.

Examples:

This modularity unlocks data developer platforms for self-serve, governed experiences. IaC enables data developer platforms that allow teams to self-serve, provisioning infrastructure quickly and consistently while maintaining governance.

The Data Developer Platform (DDP) is the backbone for data teams. It’s a standard for data platforms enabling modularity at scale. Powered by Infrastructure as Code best practices, DDP makes self-service infrastructure a reality. Data engineers can provision what they need without waiting for lengthy setup processes, all while maintaining observability and compliance.

Think of DDPs as the foundational data platform for streamlining and scaling business use cases with data. They standardise the deployment of data product bundles across different domains, making it easy to replicate solutions and push them live. For instance, templates like a customer metrics product are already pre-configured and ready to deploy, saving teams time and effort.

With unified provisioning, the DDP ensures that infrastructure, code, and metadata are managed together, making the whole process more efficient. On top of that, policy-as-code is integrated into every deployment, ensuring secure-by-default practices are followed across the board.

[related-2]

In real-world use, IaC within DDPs allows platform teams to democratise infrastructure. Rather than relying on a handful of experts, IaC gives all data engineers the power to provision resources, putting control in the hands of those who know the data best.

The shift from managing infrastructure as scripts to treating it as productised modules is nothing short of transformative. With IaC, infrastructure isn’t just something you configure once and forget about. It becomes a set of reusable, modular components that drive efficiency and scalability. This shift is what allows modern data teams to move quickly, while staying in control of complex environments.

IaC doesn’t just govern data products. It enables them to be repeatable, composable, and tightly aligned to business needs. In a world where data complexity is increasing by the day, IaC is the foundation that supports reproducibility and scalability across the entire data ecosystem.

Looking ahead, the future is clear: data engineers must think in terms of products and platforms. IaC is the enabler that makes this mindset a reality, providing the tools necessary to build, manage, and scale data ecosystems in ways that were previously unimaginable.

IaC refers to the practice that includes defining and managing infrastructure through code for consistency and automation. DevOps is the broader culture and workflow that unites development and operations. IaC is one of the core tools that makes DevOps executable at scale.

Immutable infrastructure means servers or resources are never changed after deployment; updates come by replacing them with new versions. Mutable infrastructure allows in-place changes and patches, which can drift from the original configuration over time.

.avif)

Staff Engineer - CTO Office

Staff Engineer - CTO Office

Find more community resources

Find all things data products, be it strategy, implementation, or a directory of top data product experts & their insights to learn from.

Connect with the minds shaping the future of data. Modern Data 101 is your gateway to share ideas and build relationships that drive innovation.

Showcase your expertise and stand out in a community of like-minded professionals. Share your journey, insights, and solutions with peers and industry leaders.